Abstract

In many coastal communities, the risks driven by storm surges are motivating substantial investments in flood risk management. The design of adaptive risk management strategies, however, hinges on the ability to detect future changes in storm surge statistics. Previous studies have used observations to identify changes in past storm surge statistics. Here, we focus on the simple and decision-relevant question: How fast can we learn from past and potential future storm surge observations about changes in future statistics? Using Observing System Simulation Experiments, we quantify the time required to detect changes in the probability of extreme storm surge events. We estimate low probabilities of detection when substantial but gradual changes to the 100-year storm surge occur. As a result, policy makers may underestimate considerable increases in storm surge risk over the typically long lifespans of major infrastructure projects.

Similar content being viewed by others

1 Introduction

Hurricanes Katrina and Sandy recently caused thousands of deaths and hundreds of billions in property damages (Kunz et al. 2013). These storms highlight the difficulties in perceiving, estimating, and preparing for the potential danger of storm surges. Within major metropolitan regions, developers and planners are proposing, designing, and constructing costly and long-lived infrastructure to address storm surge risks (e.g., NYC Economic Development Corporation 2014). An improved understanding of future storm surge risks can inform decisions about risk management strategies. Previous studies using observations to identify changes in the frequency or magnitude of extreme storm surges and other climate-driven weather phenomena have noted the difficulties in detecting these changes (e.g., Menéndez and Woodworth 2010; Grinsted et al. 2012, 2013; Reich et al. 2014; Talke et al. 2014a; Stephenson et al. 2015; Huang et al. 2016; Vousdoukas et al. 2016). Here, we explore and quantify the time required to detect changes to extreme storm surges.

In the USA, tropical and extratropical cyclone storm surges cause most extreme weather damages (Harris 1963; Blake et al. 2007), and storm surge damages have been increasing (Blake et al. 2007; Smith and Katz 2013). Cyclone storm surges result from a combination of wind-driven transport over the ocean and from rain over land. These, in turn, are related to sea level, storm frequency, intensity, size, and track (Harris 1963). The IPCC’s Climate Change 2014 Synthesis Report Summary for Policymakers (Pachauri et al. 2014) states: “Global mean sea level rise will continue during the 21st century, very likely at a faster rate than observed from 1971 to 2010.”

The impacts of other climate-related drivers of storm surge risk remain uncertain (Kunkel et al. 2013). Several factors complicate the detection of climate-driven changes in storm surges. For one, tide gauge data may not span the long time period that is needed for detection (Kunkel et al. 2013). In addition, current climate models face challenges in realistically resolving hurricanes (Knutson et al. 2008; Bender et al. 2010; Kunkel et al. 2013; Bacmeister et al. 2014). Nevertheless, many studies suggest that the global frequency of cyclones is likely to decrease (or stay the same), and the frequency and intensity of the most intense cyclones are likely to increase (Emanuel 1987; Beniston et al. 2007; Knutson et al. 2008; Bender et al. 2010; Reed et al. 2015; Little et al. 2015; Kossin 2017). Pachauri et al. (2014) states, “In urban areas climate change is projected to increase risks for people, assets, economies and ecosystems, including risks from heat stress, storms and extreme precipitation, inland and coastal flooding, landslides, air pollution, drought, water scarcity, sea level rise, and storm surges (very high confidence)”. Given these expected changes, researchers are analyzing how storm surge characteristics are changing over time and depend on climate variables (e.g., Fischbach 2010; Cheng et al. 2014; Vousdoukas et al. 2016).

Independent of these climate-related dependencies, researchers are separately using tide gauge observations to estimate trends in sea level and storm surge (Fischbach 2010; Hoffman et al. 2010; Maloney and Preston 2014; Kopp et al. 2015; Muis et al. 2016). Tide gauge observations provide a measurable characteristic of storms that correlates well with storm-related economic losses and can often provide a long-term consistent data source (Grinsted et al. 2012; Talke et al. 2014a, b). However, in the specific case of storm surges, it is unclear how quickly changes in storm surge statistics might be detected when including future observations.

Researchers have proposed (e.g., Lempert et al. 1996, 2012; Haasnoot et al. 2013), and policy makers are embracing dynamic adaptive strategies to adjust risk management strategies as new information becomes available (e.g., NYC Economic Development Corporation 2014). The design of such adaptive strategies is complicated, for example, by the deep uncertainty surrounding potential changes in future storm surges, the time needed to plan and implement the often large required investments, and the often long lifetimes of surge protection infrastructure. The implementation of adaptive strategies often hinges on the detection of signposts to trigger a change in strategy (e.g., Lempert et al. 1996; Kwakkel et al. 2015).

In the US, the 100-year flood level (also known as the “base flood” in FEMA 2015) is widely used as an indicator to inform the assessments of flooding risk and the need for risk mitigation (U.S. CFR 725 Executive Orders 11988 1988, 2015; Bellomo et al. 1999; FEMA 2015). The 100-year storm surge is defined as the surge level (also referred to as the return level) that will, on average in a statistically stationary system, be exceeded each 100 years (the return period) with a 1% chance of being exceeded during a given year (the annual exceedance probability) (Gilleland and Katz 2011; Cooley 2013). The return level concept can be confusing in a nonstationary system (Cooley 2013). For the remainder of this article, we use 100-year storm surge to refer to the “effective return level” (as defined in Gilleland and Katz 2011). Specifically, this is the potentially time-dependent surge level expected to be exceeded with a 1% probability in a one-year period. In practice, “base flood” 100-year return levels are often expressed as a line (or threshold) with no uncertainty (e.g., U.S. CFR 725 Executive Orders 11988 1988, 2015; NYC Economic Development Corporation 2014; FEMA 2015; Kaplan et al. 2016). The Federal Emergency Management Agency periodically updates and publishes flood maps showing the base flood line based on historic data and computer models (FEMA 2015). Regions above this line may be interpreted as being safe, whereas regions below the line are considered at risk and subject to risk mitigation requirements (FEMA 2005). Furthermore, in the USA, risk mitigation strategies are often intended to withstand events up to the 100-year return level without consideration for more extreme, less probable events (FEMA 2005; Kaplan et al. 2016).

We adopt this familiar 100-year storm surge metric (without uncertainty) as a useful starting point. We then ask: how long would it take to detect a change in the 100-year storm surge? This pragmatic interpretation shifts uncertainty from the estimated 100-year surge levels to a probabilistic estimate of time required to detect changes in the 100-year storm surge reliably. By knowing the delay in detecting this signpost and the manner in which signpost metrics should behave over time, we can help inform the design of adaptive risk management strategies (e.g., Lempert et al. 2012; Kwakkel et al. 2015; Buchanan et al. 2016), such as those currently proposed for NYC (NYC Economic Development Corporation 2014). It is therefore important to know how long it will take to realize that risk levels have changed.

To quantify detection times, we use an observation system simulation experiment (OSSE). Specifically, we generate and analyze simulated observations from OSSE nature states with prescribed changes to statistical parameters. Based on these observations, we calculate the frequency of detection over a range of detection intervals and explore how estimated 100-year storm surges change over those intervals.

Although the peak water level results from the combination of local sea level, celestial tide, and storm-driven surge, we focus here on the storm-driven surge. This focus is motivated by the deep uncertainty associated with estimates of future surges (Oddo et al. 2017) and because the uncertainties surrounding future surges can be the major driver of future flooding risks (Wong and Keller (2017).

2 Data, model, and methods

For illustrative and consistency purposes, we consider the tide gauge readings at The Battery in lower Manhattan (NOAA 2015). We remove celestial tides and local sea level rise and extract the annual block maximum reading from each consecutive one-year sequence of data (see supplementary materials). We next use extreme value theory (Fisher and Tippett 1928; Coles 2001) to statistically model the frequency and intensity of extreme, but rare, events. Specifically, we use a maximum likelihood estimate (Nelder and Mead 1965) for the parameters in the Generalized Extreme Value (GEV) distribution (Eq. 1). (See supplemental material for justification of using this approach.)

The GEV probability density function (Eq. 1),

for return level, f(x) is defined by three parameters: location μ, scale σ, and shape ξ. The location parameter (μ) corresponds to the expected value of the distribution with respect to the block maxima storm surge heights. A change μ to μ + ∆μ shifts every point in distribution by ∆μ. Alternatively, the relationship between μ and f(x) can be expressed as:

The scale parameter (σ) describes the width of the distribution. The shape parameter (ξ) is associated with the skewness of the distribution. Changes in future cyclone frequency, storm size, intensity, tracks, or speeds may lead to changes in any of these parameters. For example, an increase in the frequency of storms generating all magnitudes of storm surges will shift μ (independent of the effect of local sea level, which has been removed) in accordance with Eq. (2). Similarly, nonuniform changes to storm characteristics could result in changes to σ or ξ.

We calculate the expected exceedance levels over any time period using the maximum likelihood parameter estimates. The parameters for The Battery tide gauge (μ 0 = 0.936 m, σ 0 = 0.206 m, and ξ 0 = 0.232) produce an estimated 100-year storm surge of 2.62 m above MHHW datum, approximately the storm surge observed during hurricane Sandy (Hall and Sobel 2013). This 100-year surge (and the corresponding 95% confidence interval from 1.6 to 3.7 m) is consistent with other estimates of The Battery’s 100-year surge (n.b., they are not provided in the papers) (Talke et al. 2014b; Lopeman 2015). Our GEV parameters are broadly consistent with one pre-Sandy estimate (μ = 0.935 m, σ = 0.260 m, and ξ = 0.030) (Kirshen et al. 2007). Note that Sandy’s surge exceeded the study’s estimated 2.05 m 100-year surge, which, if included, would be expected to increase σ or ξ.

GEV estimation methods often assume that time-invariant properties are generating the analyzed time series (Cooley 2013). This assumption, however, may be violated (van Dantzig 1956; Kunkel et al. 2013; Grinsted et al. 2013; Little et al. 2015; Huang et al. 2016). We hence explore the performance differences between stationary and nonstationary analyses.

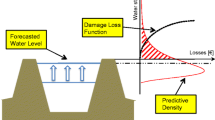

To impose changes in the 100-year surge, we can change μ, σ, or ξ (see Fig. 1). Changing σ, from 0.206 to 0.323, for example, results in a 1-m increase in the projected 100-year surge, increasing the probability for all annual maxima greater than ~ 1.5 m. Similarly, changing μ from 0.936 to 1.936 increases the projected 100-year surge by 1 m, increasing the probability for annual storm surge maxima greater than ~ 1.8 m. Visual inspection of Fig. 1 might suggest that the increase in μ is much worse (in terms of higher probabilities for surges greater than the current 100-year surge) than the increase in σ. Closer inspection, however, reveals that the reverse is true for very large storm surges. That is, the change in σ produces the higher percentage of surges greater than ~ 4.5 m, including surge levels that would exceed the 19 ft protection heights currently proposed for lower Manhattan (i.e., above the NAVD88 datum, corresponding to 5.6 m above MHHW datum) (NYC Economic Development Corporation 2014).

The GEV distribution estimated using the historic data observed at The Battery from 1924 to 2014 (blue solid lines). The left panel shows the probability density (vertical axis) of highest annual block maximum surges from 0 to 8 m (horizontal axis). The right plot is an expanded view on the left panel magenta region, showing the surge range between 4 to 7 m. The green and red lines show the change to the pdf that results from changing the scale and location parameters respectively to achieve a 1-m increase in the hundred-year storm surge. The large black tick at 2.6 m marks the height of the highest surge recorded at The Battery during super-storm Sandy

Changes to ξ imply that the behavior of the extreme tail is changing, but estimating ξ accurately is nontrivial (Coles 2001). Following the common practice in nonstationary GEV analysis (Coles 2001; Kharin and Zwiers 2005), we focus on the relatively simpler detection problem with a stationary ξ. A more detailed discussion of ξ is included in the supplement.

In this paper, we use “nature state” to mean a set of GEV parameters with prescribed changes over time, “nature run” to mean a simulation generated using a specific nature state, and “simulated observation” to mean an estimated 100-year surge calculated from a nature run. All nature states (see supplemental Table S1) use baseline parameters (μ 0 , σ 0 , and ξ 0 ). For each experiment, we generate 100,000 nature runs of a 200-year time series of annual block maximum surge levels.

In experiment E0, all GEV parameters are held at the baseline values and the 100-year storm surge is stationary. In experiment E1, we generate simulated annual block maxima nature runs using a nature state with nonstationary σ and a stationary μ. For simplicity, we construct a linear time-dependent change in σ (σ(t) in Eq. 3), to create a linear increase in the 100-year surge, where:

Experiment E2 employs a nature state with a stationary σ and a linear increase in μ (μ(t) in Eq. 4), to create a linear change to the 100-year storm, where:

The nature state for experiment E3 uses a nonstationary σ and μ, such that each parameter contributes half of the total increase in the 100-year storm surge rates (Eq. 5) to create a linear change to the 100-year storm, where:

In experiment E4, we increase the 100-year surge by alternatively increasing μ, and σ in an 80 year cycle. For the first 40 years of the cycle, we increase μ, holding σ constant, then, for the next 40 years, we hold μ constant while increasing σ.

In all experiments, we use four analysis methods (A-D) corresponding to each version of the GEV distribution to generate simulated observations of estimated 100-year surge levels (Gilleland and Katz 2016). Method A assumes fully stationary parameters. Method B assumes a nonstationary σ, with stationary μ. Method C assumes a stationary σ and nonstationary μ. Method D assumes σ, and μ are nonstationary. For every nature run of every experiment, we use all four methods to estimate the effective 100-year return level for each multi-decadal interval (0–10, 0–20, 0–30 year et cetera). That is, we increase the length of the time series by adding an additional decade of nature run annual block maxima.

We use estimated 100-year storm surges from E0 to establish detection thresholds corresponding to IPCC conventional usage of likely, very likely, and extremely likely to characterize the likelihood of detecting a change in the estimated 100-year surge in simulated observations. For each detection analysis method (A-D), we calculate the 66, 90, and 95% quantile levels of the estimated 100-year surge across every simulation year, resulting in 12 threshold curves. Using the 95% threshold, for example, we can assign a 5% probability that a calculated 100-year surge is above the threshold by chance; thus, we are 95% confident that the observation reflects an actual increase in the 100-year storm surge. From each experiment’s nature runs, we use detection methods A-D to generate simulated observations for each multi-decadal interval. We declare a successful detection of an increased 100-year surge when the estimated 100-year surge for a multi-decadal interval calculated for each analysis method exceeds the threshold for the corresponding analysis method (see Fig. 2). We calculate the percentage of successful detections that occur for every case of each experiment, (E1-E4), using each detection analysis method (A-D), at each multi-decadal interval and for each likelihood level. Here, we describe results from the 1 m per century case examined at the 95% (extremely likely) detection thresholds. Additional cases are shown in the supplemental.

Threshold estimates for 100-year storm surge events with detection examples. All four plots are based on one 200-year nature run from experiment E1 (increasing scale parameter) case 1 (1 m/century increase in the 100-year storm surge). Nature run annual block maximums (ABM) are shown by black dots. Each panel (a–d) displays thresholds, the estimated 100-year storm surge simulated observations, and detection times for each respective detection method (a, fully stationary, b, nonstationary scale, stationary location, c, stationary scale, nonstationary location, and nonstationary Scale, nonstationary location. Detection thresholds for the respective detection methods are shown for extremely likely (cyan), very likely (green), and likely (magenta.) Detection of a change to the 100-year storm surge at a given confidence occurs when the estimated 100-year storm surge simulated observation (calculated at each 0–10, 0–20, etc. decadal interval) exceeds the associated threshold. The decade of initial detection is indicated by arrows whose color corresponds to the associated confidence level

3 Results

We find, as expected, that longer observation periods typically increase the reliability of detection and reduce the frequency of false detections. Detection times are usually shorter and detections are more reliable when using nonstationary analysis methods (with key caveats discussed below). Estimated 100-year surge height simulated observations do not necessarily increase monotonically (Fig. 2). In many cases, early detections are reversed in subsequent decades. In the 1 m per century cases (Fig. 3), earliest median detections occur between 90 and 120 years. The bias and uncertainty associated with the 100-year storm surge estimated at the 100-year time frame is different for each combination of experiments (E1-E4) and detection method (A-D) (Fig. 4).

Frequency of detection results (1 m increase per century case) for the four experiments comparing alternative detection analysis methods. The nonstationary scale parameter (E1), nonstationary location parameter (E2), nonstationary scale and location parameter (E3), and nonlinear nonstationary scale and location parameter (E4) experiment results are shown in panels a–d, respectively. The percentage of successful detections achieved for fully stationary analysis (method A, solid red), nonstationary scale parameter, σ, analysis (method B, dashed blue), nonstationary location, μ, analysis (method C, dashed green), and nonstationary σ and μ analysis (method D, dotted black), is plotted for each multi-decadal interval. The gray line shows the frequency of detection rate for fully stationary parameters

Probability density of simulated 100-year surge observations for each detection method (A-D) for each experiment (E1-E4). The stationary (a), nonstationary σ, (b), nonstationary location, μ (c), and nonstationary σ and μ (d) detection methods are displayed in panels a–d, respectively. For each method, the probability density of 100 year surge simulated observations for nonstationary σ, (E1, blue), nonstationary location, μ (E2, cyan), nonstationary σ and μ (E3, magenta), and nonlinear nonstationary σ and μ (E4, orange) is plotted for 0–50 and 0–100 decadal intervals. Initial, 50 year, and 100 year nature state 100-year surge levels indicated by gray dashed, dark gray dotted, and solid black vertical lines, respectively

In experiment E0, where we test our observation systems against a static nature state with a constant 100-year surge and fully stationary GEV parameters, all detection methods recover a detection rate equal to one minus the likelihood level, consistent with the null hypothesis of stationary storm surges.

We next consider experiment E1, where the generating function uses a nonstationary σ and a stationary μ (Fig. 3a). Using the stationary analysis method (A) detection of change to the 100-year surge is quite slow. After 100 years of additional observations, method A only detects (at the 95% likelihood level) that the 100-year surge has increased about 25% of the time. Adding a nonstationary μ (C) to our detection model results in minor improvements to detection times. As perhaps expected, switching to a nonstationary σ with either a stationary (B) or a nonstationary (D) μ detection model substantially decreases detection times.

In experiment E2, with a stationary σ and a nonstationary μ (Fig. 3b), method A produces a counterintuitive result. Detection frequencies are initially lower (less than 5% for the very likely scenario at 50 years) than detection frequencies associated with a static nature state (indicating fewer detections than that of our fully stationary case used for testing the null hypothesis). This occurs when the best fit of the GEV equation to the nature runs results in a lower σ or ξ, which reduces the 100-year storm surge. This effect is even more pronounced for method B. At the likely level (see supplemental for additional figures and discussion) and after 50 years, detection of the increasing 100-year surge occurs about 20% of the time using method B, but false detection of a decrease in the 100-year surge occurs approximately 40% of the time, an example of negative learning (O’Neill et al. 2006; Oppenheimer et al. 2008). The earliest and most reliable detections are achieved when using a stationary σ and nonstationary μ (C). Using a fully nonstationary analysis (D) slightly degrades the reliability of detection.

In experiment E3 and E4, method D provides the best approach to detect changes in the 100-year events in these particular nature states, where the linear increase in 100-year surge is driven by linear or nonlinear changes to σ and μ (Fig. 3c, d). In all experiments, we calculate how frequently we predict a statistically significant increase in the risk of the 100-year surge and are able to identify the appropriate method for detection based on the original generating function.

The supplement contains additional figures for 66, 90, and 95% likelihood and 0.5 1.5, and 2.0 m/century rates. We have not considered the uncertainty of our initial 100-year surge estimate.

Long detection times may pose problems for decision-makers who would assume the availability of clear signposts to inform adaptive risk management strategies. As an alternative to analyzing detection times, we can consider how estimated 100-year surge levels evolve over time (Fig. 4). A desired characteristic of detection methods is that additional observations will cause estimates to converge towards the actual 100-year surge level. With method A, this does not occur. Longer observation timeframes increase the tendency to underestimate the 100-year storm surge. By using a fully stationary analysis for a nonstationary case, we identify an increasing bias with more observations for all our experiments. Nonstationary methods B-D converge towards the 100-year storm surge for experiments E1–E3, though at different rates. Additionally, the distribution of estimated storm surges differs for each combination of experiment and method. In experiment E4 (generated from nonlinear changes to σ and μ), none of the detection methods converge to the actual 100-year surge over the 200-year simulated timespans. This evolution in bias is discussed in the supplement and illustrated in Fig. S4.

4 Discussion

The risks of extreme events in the USA are often summarized using a single 100-year return level (FEMA 2005, 2015). This metric is often communicated, by drawing a line on a map, without explicit estimates of the uncertainties (FEMA 2005, 2015). Other studies, while not directly addressing potential changes to the 100-year storm surge, suggest that the large increases in the 100-year surges of the magnitudes considered in this study may be possible at The Battery (Coch 1994; Scileppi and Donnelly 2007; Emanuel and Ravela 2013; Talke et al. 2014b; Reed et al. 2015). When realized through a change to the location, scale, or shape parameter (or combinations of the three), changes to the 100-year surge can drive considerable changes in flooding risks.

Our analysis shows that for the considered OSSE nature states, when changes in the 100-year surge are caused by a changing μ, detection occurs earlier (on average) compared to the same shift in the 100-year surge caused by a changing σ. Moreover, the detectability of changes to the 100-year surge depends on the detection analysis method used, where the best results are obtained when the detection method matches what is actually occurring (i.e., as used in the nature state parameter change mechanisms). In considering experiment E2–E4 results, we find that differences between the nature state and detection method can result in fewer detections than what is expected when the 100-year surge is not increasing (our null hypothesis cases). Additionally, we find that the distribution of estimated 100-year surge heights resulting from a prescribed change in the nature state depends upon both the analysis method and how the nature state statistical parameters are changing.

In our OSSE experiments E1–E3, we only consider nature states with linear changes to the 100-year surge driven by linear changes to σ or μ, excluding the possibility of a nonstationary ξ and, in E4, consider one simple set of nonlinear changes to σ and μ. In the real world, actual physical phenomena may drive any combination of nonstationary GEV parameter forcings, introducing further sources of uncertainty into both the time required for detection of any change to the 100-year surge and our future estimates of the 100-year surge.

In stepping from our OSSE to the real world, expected changes to extreme weather events, including tropical and extratropical cyclones, could lead to alterations in storm frequency, intensity, size, or track (Emanuel 1987; Beniston et al. 2007; Knutson et al. 2008; Bender et al. 2010; Reed et al. 2015; Little et al. 2015; Kossin 2017). These may, in turn, drive nonlinear changes to any of the GEV parameters. As discussed above, a mismatch between the underlying phenomena and the analysis method can impact the accuracy of analysis or result in negative learning. In analyzing real world observations, analysts rarely have the luxury of knowing how reality, in terms of these underlying parameters, is changing. Therefore, it is easy to imagine scenarios where long-term nonlinear trends in storm characteristics drive such mismatches between reality and the chosen statistical model for the detection. This can lead to overconfident or biased projections of future storm surge risk that can result in poor decisions.

Various strategies have been proposed to select and manage policies and infrastructure investments required to manage storm surge risk (e.g., van Dantzig 1956; Kok de and Hoekstra, 2008; Linquiti and Vonortas 2012). In addition, it has long been known that storm surge exceedance probabilities and associated risk may not be constant over time (e.g., van Dantzig 1956, Lempert et al., 2012). Hence, it is important to learn from experience and adapt flood prevention strategies. An often used strategy is to set dike heights to the return level for a prescribed annual exceedance probability (e.g., one in 100 years in the USA, or longer return periods in the Netherlands) as estimated at the time of design with an additional fixed safety margin (e.g., FEMA 2005; Kok de and Hoekstra, 2008; Ligtvoet et al. 2012; Zevenbergen et al. 2013). Older strategies rely on resetting maximum dike heights based on new maximum observations at some time interval (Kok de and Hoekstra, 2008). These strategies can result in similar overtopping and overall economic performance (Kok de and Hoekstra 2008; Linquiti and Vonortas 2012), but strategy performance can depend on the ability to learn from observations and to adapt strategies over time (Linquiti and Vonortas 2012). The longer it takes to learn about increasing risk, the more delayed adaptation responses may be, which could result in unanticipated risk increases.

When changes to risk mitigation strategies (such as NYC proposals to implement adaptive risk management strategies) are considered, the estimated 100-year storm surge is often used as a line-in-the-sand demarcation of risk, sometimes with a freeboard allowance to incorporate additional uncertainty (FEMA 2005, 2015; NYC Economic Development Corporation 2014; Kaplan et al. 2016). For a given set of GEV parameters, the statistical uncertainty surrounding return level estimates can also be estimated and can be quite large (e.g., Coles 2001). This estimate of uncertainty, however, often does not account for the increased uncertainty described here, introduced by both statistical mechanisms driving changes to the 100-year storm surge and mismatches between the detection model and reality. Stated another way, uncertainty resulting from mismatches between reality and our detection model may underestimate the uncertainty in both the actual level of our 100-year storm surge estimates and the time required to notice a change to that level.

Developing public consensus on the need for reducing storm surge risk can be a slow process. Even after consensus is reached, evaluating options, designing strategies, establishing public financing, planning, and implementing major construction projects add delays between identification of the need for defenses and their implementation. Adopting an adaptive risk management or dynamic adaptive policy pathway can substantially reduce initial investment cost, potentially reducing the lag time associated with subsequently implementing defensive strategies within a previously agreed framework (Lempert et al. 1996; Kwakkel et al. 2015). The success of these strategies, however, depends on the detection of signposts signaling the need to adapt or switch policies (Kwakkel et al. 2015). When considering storm surge risk management strategies, failing to account for long detection time frames and the potential for contra-indicating results and negative learning can degrade the performance of adaptive strategies.

At the start of this paper, we posed the simple question: How fast can we learn from past and potential future storm surge observations about potential changes? The answer is simple. It can take a long time. Quantitatively, however, the answer is more complicated. We show the answer (in Figs. 2, 3, and 4) for a very limited set of changing parameters, at a few likelihood levels and for a nature state where we prescribe statistical parameters that we selected for generating the changes. The additional uncertainty associated with any particular combination of these circumstances may not be captured in traditional extreme value analyses, thus potentially leading to underestimated uncertainty. The additional uncertainty for any single set of changing parameters can be probabilistically estimated using the methods outlined in this paper and detailed in the supplementary materials. In the real world, analysts and decision-makers often do not have this luxury, and the problem can be more difficult. Nevertheless, decision analyses may be biased if they neglect the possibility of overly confident estimates of future risk that could occur if the additional structural uncertainties outlined here are not considered. Failing to do so may lead to poor adaptive management strategies and, when new protective strategies are implemented, a failure to meet desired protection levels.

References

Bacmeister JT, Wehner MF, Neale RB et al (2014) Exploratory high-resolution climate simulations using the community atmosphere model (CAM). J Clim 27:3073–3099. https://doi.org/10.1175/JCLI-D-13-00387.1

Bellomo D, Pajak, Sparks J (1999) Coastal flood hazards and the national flood insurance program. Journal of Coastal Research Special Issue NO. 28. Coastal Erosion Mapping and Management: 21–26. http://www.jstor.org/stable/25736181

Bender MA, Knutson TR, Tuleya RE et al (2010) Modeled impact of anthropogenic warming on the frequency of intense Atlantic hurricanes. Science 327:454–458. https://doi.org/10.1126/science.1180568

Beniston M, Stephenson DB, Christensen OB et al (2007) Future extreme events in European climate: an exploration of regional climate model projections. Clim Chang 81:71–95. https://doi.org/10.1007/s10584-006-9226-z

Blake ES, Landsea C, Gibney EJ (2007) The deadliest, costliest, and most intense United States tropical cyclones from 1851 to 2010 (and other frequently requested hurricane facts). NOAA/National Weather Service, National Centers for Environmental Prediction, National Hurricane Center. http://www.nhc.noaa.gov/pdf/NWS-TPC-5.pdf

Buchanan MK, Kopp RE, Oppenheimer M, Tebaldi C (2016) Allowances for evolving coastal flood risk under uncertain local sea-level rise. Clim Chang 137:347–362. https://doi.org/10.1007/s10584-016-1664-7

Cheng L, AghaKouchak A, Gilleland E, Katz RW (2014) Non-stationary extreme value analysis in a changing climate. Clim Chang 127:353–369. https://doi.org/10.1007/s10584-014-1254-5

Coch NK (1994) Geologic effects of hurricanes. Geomorphology 10:37–63. https://doi.org/10.1016/0169-555X(94)90007-8

Coles S (2001) An introduction to statistical modeling of extreme values. Springer series in statistics, Springer Veriag, London

Cooley D (2013) Return periods and return levels under climate change. In: AghaKouchak A, Easterling D, Hsu K, Schubert S, Sorooshian S (eds) Extremes in a changing climate. Water science and technology library, vol 65. Springer, Dordrecht, 97–114. https://link.springer.com/chapter/10.1007%2F978-94-007-4479-0_4

Emanuel KA (1987) The dependence of hurricane intensity on climate. Nature 326:483–485

Emanuel KA, Ravela S (2013) Synthetic storm simulation for wind risk assessment. Prot N Y CITY 15

FEMA (2005) FEMA 480 National Flood Insurance Program Floodplain Management Requirements—a study guide and desk reference for local officials. Federal Emergency Management Agency. https://www.fema.gov/media-library-data/1481032638839-48ec3cc10cf62a791ab44ecc0d49006e/FEMA_480_Complete_reduced_v7.pdf

FEMA (2015) Guidelines for Implementing Executive Order 11988, Floodplain Management, and Executive Order 13690, Establishing a federal flood risk management standard and a process for further soliciting and considering stakeholder input. Federal Emergency Management Agency. https://www.fema.gov/media-library-data/1444319451483-f7096df2da6db2adfb37a1595a9a5d36/FINAL-Implementing-Guidelines-for-EO11988-13690_08Oct15_508.pdf

Fischbach JR (2010) Managing New Orleans flood risk in an uncertain future using non-structural risk mitigation, Pardee RAND Graduate School, doctoral thesis. The RAND Corporation. https://www.rand.org/content/dam/rand/pubs/rgs_dissertations/RGSD300/RGSD315/Rand_RGSD315.pdf

Fisher RA, Tippett LHC (1928) Limiting forms of the frequency distribution of the largest or smallest member of a sample. Math Proc Camb Philos Soc 24:180. https://doi.org/10.1017/S0305004100015681

Gilleland E, Katz RW (2011) New software to analyze how extremes change over time. EOS Trans Am Geophys Union 92:13–14. https://doi.org/10.1029/2011EO020001

Gilleland E, Katz RW (2016) extRemes 2.0: an extreme value analysis package in R. J Stat Softw 72:1–39. 10.18637/jss.v072.i08

Grinsted A, Moore JC, Jevrejeva S (2012) Homogeneous record of Atlantic hurricane surge threat since 1923. Proc Natl Acad Sci 109:19601–19605. https://doi.org/10.1073/pnas.1209542109

Grinsted A, Moore JC, Jevrejeva S (2013) Projected Atlantic hurricane surge threat from rising temperatures. Proc Natl Acad Sci 110:5369–5373. https://doi.org/10.1073/pnas.1209980110

Haasnoot M, Kwakkel JH, Walker WE, ter Maat J (2013) Dynamic adaptive policy pathways: a method for crafting robust decisions for a deeply uncertain world. Glob Environ Change 23:485–498. https://doi.org/10.1016/j.gloenvcha.2012.12.006

Hall TM, Sobel AH (2013) On the impact angle of Hurricane Sandy’s New Jersey landfall: HURRICANE SANDY IMPACT ANGLE. Geophys Res Lett 40:2312–2315. https://doi.org/10.1002/grl.50395

Harris DL (1963) Characteristics of the Hurricane Storm Surge, Technical Paper No. 48, US Department of Commerce, Weather Bureau. www.csc.noaa.gov/hes/images/pdf/Characteristics_Storm_Surge.pdf

Hoffman RN, Dailey P, Hopsch S et al (2010) An estimate of increases in storm surge risk to property from sea level rise in the first half of the twenty-first century. Weather Clim Soc 2:271–293. https://doi.org/10.1175/2010WCAS1050.1

Huang WK, Stein ML, McInerney DJ et al (2016) Estimating changes in temperature extremes from millennial-scale climate simulations using generalized extreme value (GEV) distributions. Adv Stat Climatol Meteorol Oceanogr 2:79–103. https://doi.org/10.5194/ascmo-2-79-2016

Kaplan MB, Campo M, Auermuller L, Herb J (2016) Assessing New Jersey’s exposure to sea-level rise and coastal storms: a companion report to the New Jersey climate adaptation alliance science and technical advisory panel report, Rutgers University. http://dx.doi.org/doi:10.7282/T3765HK2

Kharin VV, Zwiers FW (2005) Estimating extremes in transient climate change simulations. J Clim 18:1156–1173

Kirshen P, Watson C, Douglas E et al (2007) Coastal flooding in the Northeastern United States due to climate change. Mitig Adapt Strateg Glob Change 13:437–451. https://doi.org/10.1007/s11027-007-9130-5

Knutson TR, Sirutis JJ, Garner ST et al (2008) Simulated reduction in Atlantic hurricane frequency under twenty-first-century warming conditions. Nat Geosci 1:359–364. https://doi.org/10.1038/ngeo202

Kok de J, Hoekstra AY (2008) Living with peak discharge uncertainty: the self-learning dike, Proceedings international Congress on Environmental Modeling and Software. http://doc.utwente.nl/61135/1/Kok08living.pdf

Kopp RE, Horton BP, Kemp AC, Tebaldi C (2015) Past and future sea-level rise along the coast of North Carolina. Clim Change. https://doi.org/10.1007/s10584-015-1451-x

Kossin JP (2017) Hurricane intensification along United States coast suppressed during active hurricane periods. Nature 541:390–393

Kunkel KE, Karl TR, Brooks H et al (2013) Monitoring and understanding trends in extreme storms: state of knowledge. Bull Am Meteorol Soc 94:499–514. https://doi.org/10.1175/BAMS-D-11-00262.1

Kunz M, Mühr B, Kunz-Plapp T et al (2013) Investigation of superstorm Sandy 2012 in a multi-disciplinary approach. Nat Hazards Earth Syst Sci 13:2579–2598. https://doi.org/10.5194/nhess-13-2579-2013

Kwakkel JH, Haasnoot M, Walker WE (2015) Developing dynamic adaptive policy pathways: a computer-assisted approach for developing adaptive strategies for a deeply uncertain world. Clim Chang 132:373–386. https://doi.org/10.1007/s10584-014-1210-4

Lempert RJ, Schlesinger ME, Bankes SC (1996) When we don’t know the costs or the benefits: adaptive strategies for abating climate change. Clim Chang 33:235–274

Lempert RJ, Sriver RL, Keller K (2012) Characterizing uncertain sea level rise projections to support infrastructure investment decisions

Ligtvoet W, Franken R, Pieterse N, et al (2012) Climate adaptation in the Dutch delta, strategic options for a climate-proof development of the Netherlands. PBL Netherlands Environmental Assessment Agency. http://www.pbl.nl/sites/default/files/cms/publicaties/PBL-2012-Climate-Adaptation-in-the-Dutch-Delt-500193002.pdf

Linquiti P, Vonortas N (2012) The value of flexibility in adapting to climate change: a real options analysis of investments in costal defense. Clim Change Econ 3:1250008. https://doi.org/10.1142/S201000781250008X

Little CM, Horton RM, Kopp RE et al (2015) Joint projections of US East Coast sea level and storm surge. Nat Clim Chang 5:1114–1120

Lopeman M (2015) Extreme storm surge hazard estimation and windstorm vulnerability assessment for quantitative risk analysis. Columbia University. http://hdl.handle.net/10590/3511

Maloney MC, Preston BL (2014) A geospatial dataset for U.S. hurricane storm surge and sea-level rise vulnerability: development and case study applications. Clim Risk Manag 2:26–41. https://doi.org/10.1016/j.crm.2014.02.004

Menéndez M, Woodworth PL (2010) Changes in extreme high water levels based on a quasi-global tide-gauge data set. J Geophys Res. https://doi.org/10.1029/2009JC005997

Muis S, Verlaan M, Winsemius HC et al (2016) A global reanalysis of storm surges and extreme sea levels. Nat Commun 7:11969. https://doi.org/10.1038/ncomms11969

Nelder JA, Mead R (1965) A simplex method for function minimization. Comput J 7:308–313

NOAA (2015) Tides and Currents station The Battery, NY - Station ID: 8518750. NOAA. https://tidesandcurrents.noaa.gov/stationhome.html?id=8518750. Accessed 9 Oct 2014

NYC Economic Development Corporation (2014) Southern Manhattan coastal protection study: evaluating the feasibility of a multi-purpose levee (MPL). NYC Economic Development Corporation. https://www.nycedc.com/sites/default/files/filemanager/Projects/Seaport_City/Southern_Manhattan_Coastal_Protection_Study_-_Evaluating_the_Feasibility_of_a_Multi-Purpose_Levee.pdf

O’Neill BC, Crutzen P, Grübler A et al (2006) Learning and climate change. Clim Policy 6:585–589. https://doi.org/10.1080/14693062.2006.9685623

Oddo PC et al (2017) Deep uncertainties in sea-level rise and storm surge projections: implications for coastal flood risk management. Accepted for publication in Risk Analysis. Preprint at: https://arxiv.org/pdf/1705.10585.pdf

Oppenheimer M, O’Neill BC, Webster M (2008) Negative learning. Clim Chang 89:155–172. https://doi.org/10.1007/s10584-008-9405-1

Pachauri RK, Allen MR, Barros VR, et al (2014) Climate change 2014: synthesis report. Contribution of Working Groups I, II and III to the fifth assessment report of the Intergovernmental Panel on Climate Change. IPCC, Geneva

Reed AJ, Mann ME, Emanuel KA et al (2015) Increased threat of tropical cyclones and coastal flooding to New York City during the anthropogenic era. Proc Natl Acad Sci 112:12610–12615. https://doi.org/10.1073/pnas.1513127112

Reich BJ, Shaby BA, Cooley D (2014) A hierarchical model for serially-dependent extremes: a study of heat waves in the western US. J Agric Biol Environ Stat 19:119–135. https://doi.org/10.1007/s13253-013-0161-y

Scileppi E, Donnelly JP (2007) Sedimentary evidence of hurricane strikes in western Long Island, New York. Geochem Geophys Geosystems 8:n/a–n/a. https://doi.org/10.1029/2006GC001463

Smith AB, Katz RW (2013) US billion-dollar weather and climate disasters: data sources, trends, accuracy and biases. Nat Hazards 67:387–410. https://doi.org/10.1007/s11069-013-0566-5

Stephenson AG, Shaby BA, Reich BJ, Sullivan AL (2015) Estimating spatially varying severity thresholds of a forest fire danger rating system using max-stable extreme-event modeling*. J Appl Meteorol Climatol 54:395–407

Talke SA, Orton P, Jay DA (2014a) Increasing storm tides in New York Harbor, 1844-2013. Geophys Res Lett 41:3149–3155. https://doi.org/10.1002/2014GL059574

Talke SA, Orton P, Jay DA (2014b) Increasing storm tides in New York Harbor, 1844-2013, auxiliary material. Geophys Res Lett 41:3149. https://seaandskyny.files.wordpress.com/2014/05/talke_etal_grlinpress_auxiliarymaterial.pdf

U.S. CFR 725 Executive Orders 11988 (1988) 18 CFR 725—implementation of executive orders 11988, floodplain management and 11990, protection of wetlands. Reprinted in title number 42 FR 26951, 3 CFR, 1977 Comp., p 117. https://www.archives.gov/federal-register/codification/executive-order/11988.html

U.S. CFR 725 Executive Orders 11988 (2015) 18 CFR 725—executive orders 11988, establishing a federal flood risk management standard and a process for further soliciting and considering stakeholder input 42 FR 26951, 18 CFR 725, 2015 Comp., p 92. https://www.gpo.gov/fdsys/pkg/CFR-2015-title18-vol2/pdf/CFR-2015-title18-vol2-sec725-0.pdf

van Dantzig D (1956) Economic decision problems for flood prevention. Econometrica 24:276–287

Vousdoukas MI, Voukouvalas E, Annunziato A et al (2016) Projections of extreme storm surge levels along Europe. Clim Dyn 47:3171–3190. https://doi.org/10.1007/s00382-016-3019-5

Wong TE, Keller K (2017) Deep uncertainty surrounding coastal flood risk projections: a case study for New Orleans. Accepted for publication in Earth’s Future. Preprint at: https://arxiv.org/abs/1705.07722

Zevenbergen C, van Herk S, Rijke J et al (2013) Taming global flood disasters. Lessons learned from Dutch experience. Nat Hazards 65:1217–1225. https://doi.org/10.1007/s11069-012-0439-3

Acknowledgements

We thank M. Tingley, M. Haran, B. Lee, G. Garner, and R. Lempert for useful discussions. This research was partially supported by the National Science Foundation (NSF) through the Network for Sustainable Climate Risk Management (SCRiM) under NSF cooperative agreement GEO-1240507 and the Penn State Center for Climate Risk Management. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the NSF.

Author information

Authors and Affiliations

Corresponding author

Additional information

Code and data availability

The data and code is available at www.datacommons.psu.edu.

Electronic supplementary material

ESM 1

(DOCX 1254 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ceres, R.L., Forest, C.E. & Keller, K. Understanding the detectability of potential changes to the 100-year peak storm surge. Climatic Change 145, 221–235 (2017). https://doi.org/10.1007/s10584-017-2075-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-017-2075-0