Abstract

The International Academy of Manual/Musculoskeletal Medicine (IAMMM) has completely revised the protocol for reproducibility studies of diagnostic procedures in Manual/Musculoskeletal Medicine (M/M Medicine). The protocol was and is aimed at the practitioners in the field of M/M Medicine. This IAMMM protocol can be used in a very practical way and makes it feasible to perform reproducibility studies equally well in private practices and clinics for M/M Medicine with two or more physicians and as by educational boards of the societies of M/M Medicine. This IAMMM protocol provides practical solutions for sample size calculations in reproducibility studies using kappa statistics. Step by step, many different statistical aspects of reproducibility studies are explained, resulting in a very structured protocol format of how to perform a reproducibility study using overall agreement and the kappa value as statistical outcome.

Zusammenfassung

Die International Academy of Manual/Musculoskeletal Medicine (IAMMM) hat das Protokoll für Reproduzierbarkeit von diagnostischen Verfahren in der Manuellen Medizin vollständig revidiert. Das IAMMM-Protokoll war und ist insbesondere für Praktiker auf dem Gebiet der Manuellen Medizin gedacht. Dieses Protokoll kann auf sehr anwendungsorientierte Weise verwendet werden und macht es möglich, Reproduzierbarkeitsstudien gleichernmaßen gut in Privatpraxen und Kliniken mit zwei oder mehr Ärzten, wie bei Vorständen einer Gesellschaft für Manuelle Medizin, durchzuführen. Dieses IAMMM-Protokoll bietet praktische Lösungen für Berechnungen der Stichprobengroße in Reproduzierbarkeitsstudien mit Kappa-Statistik. Schritt für Schritt werden sehr viele statistische Aspekte von Reproduzierbarkeitsstudien erklärt, was am Ende in einem sehr strukturierten Protokollformat resultiert, wie man eine Reproduzierbarkeitsstudie unter Verwendung von statistischer Gesamtübereinstimmung und Kappa-Wert-Ergebnisdaten durchführt.

Similar content being viewed by others

Preface to the 2019 edition of the reproducibility protocol

The last complete revised edition of this protocol was published by the International Academy of Manual/Musculoskeletal Medicine (IAMMM) in 2012. In the meantime, much experience has been gained with the daily practice of this protocol. It has stimulated scientists and practitioners in our field of Manual/Musculoskeletal Medicine (M/M Medicine) to perform reproducibility studies according the format of this protocol.

Based on these experiences and the emergence of new data from research in this field, the IAMMM thought it necessary to completely rewrite the last edition of 2012.

In this new IAMMM protocol, essential changes are made in the different periods of the protocol format. The direct reason for these changes is the fact that nowadays, sample size calculations based on statistical power are advised to estimate study sample sizes. Sample calculations based for studies using kappa coefficients are faced with several difficulties. The kappa coefficient of a diagnostic procedure is not an absolute measure as such. Its value is dependent on many other factors. As a consequence, the same kappa coefficient can have different levels of the overall agreement and the prevalence of the index condition. Furthermore, in a reproducibility study, the kappa coefficient is only related with positive judged diagnostic procedures. In contrast, the overall agreement is an absolute measure and concerns both positive and negative judged diagnostic procedures, reflecting more the daily reality of a clinician. Therefore, in the new protocol we focus in the first instance on calculating a sample size for the overall agreement.

As a consequence, the training period has been extended with a pilot study to evaluate the standardisation of the diagnostic procedure and the overall agreement period has been removed from the protocol.

Since the overall agreement as such is an absolute measure and reflects more the daily practice of a clinician dealing with diagnostic procedures and is most informative for clinicians, the starting point of the protocol is more focused on the overall agreement and in the second instance the kappa coefficient is emphasised. Attention is paid to the comprehensibility and readability of the text compared to the previous edition. Tables and figures have been renewed. In this way the protocol is accessible to those practitioners in M/M Medicine, who are less familiar with statistics and in particular with the format of performing reproducibility studies.

The reproducibility protocol has been elaborated in such a way that it can be used as a kind of “cookbook format” to perform reproducibility studies with kappa statistics.

The protocol format can be used in a very practical way and it makes it feasible to perform reproducibility studies in private M/M Medicine clinics with two or more physicians and by educational boards of the M/M Medicine Societies.

The protocol is used as the syllabus for the International Instructional Course for Reproducibility Studies organised by the IAMMM, and we sincerely hope that the protocol will also be acknowledged in university education.

As in previous editions of the protocol, Edition 2019 strongly emphasises the need to perform reproducibility studies in M/M Medicine.

Therefore, in the introduction the original reasons to develop this protocol are again mentioned because they are still very relevant for present day M/M Medicine.

In this edition, a list of reproducibility studies (exclusively using kappa statistics) of the different region of the locomotion system are published.

The IAMMM is aware that it is a continuous process to keep a protocol like this one updated. We do hope that scientists and educationalists who use this protocol will send their comments to the present second Scientific Director of the Academy. In this way, we can continuously improve and update the protocol.

The IAMMM would also like to encourage scientists and/or educationalists, who receive and use this latest edition of the protocol, to disperse it among their colleagues and students. Hereby the protocol becomes accessible to a larger audience of practitioners in the field of M/M Medicine.

Table of contents

Preface to the 2019 edition of the reproducibility protocol

I. Introduction

The IAMMM International Instructional Course for Reproducibility Studies

The Academy Conference

II. Reproducibility and validity

Definitions

Reliability

Reproducibility

Definitions intra-observer

Definition inter-observer agreement

Definition diagnosis versus diagnostic procedure

Validity

III. Reproducibility studies: data

Nature of data in reproducibility studies

Qualitative diagnostic procedures

Quantitative diagnostic procedures

Inappropriate statistics of qualitative data in reproducibility studies

Percentage of agreement/overall agreement (Po)/observed agreement (Po)

Correlation coefficients

Appropriate statistics of qualitative data in reproducibility studies

Kappa statistics

Appropriate statistics in quantitative data reproducibility studies

Choice of statistics and clinical consequences

IV. Reproducibility studies: kappa statistics

Definition of the kappa coefficient

Overall agreement

Prevalence and prevalence of the index condition

Index condition definition

Prevalence of the index condition definition

Prevalence

Expected agreement by chance

Calculation of the kappa coefficient

Interpretation of kappa coefficient: general

Interpretation of kappa coefficient: dependency of the overall agreement

Interpretation of kappa coefficient: dependency of prevalence of the index condition Pindex

Interpretation of kappa coefficient: bias

V. Developing reproducibility studies: general aspects

Nature of the diagnostic procedure to be evaluated in a study

Diagnosis

Syndrome

Diagnostic procedure

Number of diagnostic procedures evaluated in reproducibility studies

Too many diagnostic procedures in reproducibility studies

Combinations of a few different diagnostic procedures: mutual dependency

Combinations of a few different diagnostic procedures: mutual dependency of diagnostic procedure and final “syndrome diagnosis”

Large number of different diagnostic procedures with a “diagnostic protocol”

Hypothesis of the diagnostic procedure in a reproducibility study

Characteristics and number of observers to be involved in a study

Number of observers in reproducibility studies

Characteristics of observers in reproducibility studies

Number of subjects in reproducibility studies

VI. The problem of the relation between the kappa coefficient and the prevalence of the index condition P index

Defining the Pindex problem

Influencing the Pindex in advance: the 0.5‑Pindex method

VII. Protocol format reproducibility study

Logistic period

Participating members and logbook

Transparency of responsibility

Logistics of reproducibility studies

Finance in reproducibility studies

Approval by the local ethical committee

Training period

Observer and subjects recruitment

Selection and number of diagnostic procedures

Mutual agreement about performance diagnostic procedure

Agreement about hypothesis of diagnostic procedure

Agreement about judgement of diagnostic procedure

Agreement about the blinding procedure

Study evaluation form

Study period with 0.50‑P index method

Observers and subjects recruitment

Blinding procedures

Study period

Statistics period

Publication period

Introduction section

Material and methods section

Results section

Discussion section

VIII. Golden rules for reproducibility studies

References

I. Introduction

The IAMMM developed this protocol in a standardised format for reproducibility studies.

In particular, this protocol provides scientists and daily practitioners in our field of M/M Medicine with a practical format, in a more or less cookbook form, to perform reproducibility studies. The primary reason for the Academy to develop this kind of protocol is still relevant:

There are many different approaches (schools) in M/M Medicine in many countries of the M/M Medicine world, frequently with many different diagnostic procedures and many different therapies for the same clinical picture.

The predecessor of the IAMMM, the Scientific Committee of FIMM, formulated the problem with respect to diagnostic procedures in Manual/Musculoskeletal Medicine (M/M Medicine) and is summarised in Fig. 1.

Summary of the problem and its consequences for Manual/Musculoskeletal Medicine (M/M M) as defined by the previous Scientific Committee of FIMM (Intenational Federation for Manual Medicine) and adapted by its successor the present International Academy of Manual/Musculoskeletal Medicine (IAMMM). EBM evidence-based medicine

The consequences of this statement are five-fold:

-

1.

Most existing different approaches within M/M Medicine have no reproducible proven diagnostic procedures in the various regions of the locomotion system. As a consequence, the reproducibility, validity, sensitivity and specificity of these diagnostic procedures are largely lacking.

-

2.

Because this lack of good reproducibility, validity, sensitivity and specificity studies of the diagnostic procedures of the different schools in M/M Medicine, mutual comparison of diagnostic procedures of the different approaches in M/M Medicine is impossible. In the present situation, scientific information exchange and fundamental discussions, based on solid scientific results and methods, between these different M/M Medicine approaches is frequently impossible.

-

3.

Each of the different approaches in M/M Medicine has their own education system. Most of the diagnostic procedures taught by these education systems lack good reproducibility. This makes the transferability of the taught diagnostic procedures between the various M/M Medicine education systems questionable. Furthermore, mutual exchange between education systems of diagnostic procedures is hampered. Since we are living in the age of evidence-based medicine, medical educational systems in general and M/M Medicine in particular have to be based as far as possible on evidence-based educational teaching material. Most important, proven reproducible diagnostic procedures are mutually exchangeable and can stimulate discussions between the various approaches in M/M Medicine.

-

4.

In the absence of reproducible diagnostic procedures in M/M Medicine only heterogeneously defined study populations for efficacy trials can be used. Therefore, comparison of results of efficacy trials, with the same therapeutic approach (for instance manipulation), is hardly possible.

If the present situation continues, it will slow down of the badly needed process of professionalization of M/M Medicine.

-

5.

Non-reproducible diagnostic procedures of different schools, ill-defined therapeutic approaches and low-quality study designs are the main causes for the weak evidence of a proven therapeutic effect of M/M Medicine.

At present, it is still the opinion of the IAMMM to create the best possible conditions for exchange of scientific information between the various schools in M/M Medicine. This information exchange must be based on results of solid scientific work. By comparing the results of good reproducibility studies, performed by different schools, a fundamental discussion can start. The main aim of this discussion is not to conclude which school has the best diagnostic procedure in a particular area of the locomotion system, but to define a set of validated diagnostic procedures which can be adopted by the different schools and become transferable to regular medicine. The Academy wants to provide the Societies for M/M Medicine with standardised scientific protocols for future studies.

The IAMMM International Instructional Course for Reproducibility Studies

To provide practitioners in the field of Manual/Musculoskeletal Medicine with the right tools to perform high-quality reproducible studies, the IAMMM has the possibility to organise in cooperation with national societies for Manual/Musculoskeletal Medicine or university departments a 1-day instructional course. In the course, beyond the theoretical explanations of statistics in reproducibility studies, practical training is an essential aspect of the instructional course.

Previous IAMMM International Instructional Courses have been organised in many countries, such as the Czech Republic, Denmark, France, Germany, India, Netherlands, Russia and the United Kingdom.

Detailed information about these courses is available from the IAMMM logistic officer (sjerutte@gmail.com).

The Academy Conference

The best forum to create a discussion platform for the different schools in M/M Medicine is the Academy Conference organised by the IAMMM every year. This 2‑day conference is organised every second year. At this Academy Conference, preliminary results of studies, proposals for research protocols, newly developed therapeutic and/or diagnostic algorithms and other new scientific work are presented. In a fruitful discussion between the audience and presenters many ideas can be exchanged based on solid scientific work, without interference of “school politics”.

The Scientific Director of the IAMMM, emphasises that good reproducibility of diagnostic procedures in M/M still has the first priority. These kinds of studies are easy and inexpensive to perform and form the best base for mutual discussion between schools in M/M Medicine.

Co-operation with universities and active involvement of the Societies for M/M Medicine is indispensable and crucial for the future work of the IAMMM.

II. Reliability: reproducibility and validity

Definitions.

Before performing a reproducibility study, it is essential that one becomes familiar with the nomenclature used in this kind of study. Furthermore, it is of utmost importance that the difference between reproducibility and validity is well understood. One of the major problems in medicine and also in medical research is the fact that different name are used for the same condition. Therefore, we think it important first to provide the reader of this protocol with an overview of the definitions used in this protocol. In clarifying and illustrating the definitions in greater detail, reading becomes much easier. In particular those definitions that are used in reproducibility studies are elaborated in greater detail based on experience from previous reproducibility studies.

1. Reliability

In the scientific medical literature, the term reliability is frequently (mis)-used in relation to the evaluation of diagnostic procedures. Reliability reflects the overall consistency of a measure [1, 2]. A diagnostic procedure is said to have a high reliability if it produces similar results under consistent conditions. Reliability comprises how well two persons use and interpret the same diagnostic procedure. However, reliability does not automatically imply validity.

Therefore, reliability must be subdivided into precision and accuracy. Precision is synonymous to reproducibility and accuracy is synonymous to validity. Both terms reproducibility and validity are generally used, as is in this protocol [3].

1.1 Reproducibility

Definitions.

Reproducibility of a diagnostic procedure reflects the extent of agreement of a single person (observer) or different observers using the same diagnostic procedure in the same subject. In the case of a single observer we are dealing with intra-observer agreement or intra-observer variability. The same observer uses the same diagnostic procedure in the same subject but on two different occasions (Fig. 2).

In the case of (two) different observers, we are dealing with inter-observer agreement or inter-observer variability. The (two) different observers use the same diagnostic procedure in the same subject at one occasion (Fig. 3).

Definition diagnosis versus diagnostic procedure.

In essence, the reproducibility of a diagnostic procedure has nothing to do with a diagnosis as such. In medicine, diagnostic procedures are the constituent parts of the whole diagnostic arsenal that finally can lead to a particular diagnosis. Reproducibility of a diagnostic procedure reflects how well observers have standardised the whole diagnostic procedure as such and its final judgement. In our protocol, dichotomous outcomes for the final outcome of a diagnostic procedure such as Yes or No is used. To illustrate this in greater detail we take as example the Patrick Test (Fig. 4). In M/M Medicine the Patrick Test is frequently used to evaluate the mobility of the sacroiliac joint (SI-joint). However, in reproducibility studies, one has to separate the hypothesis of the Patrick Test (meant to test the mobility of the SI-joint) from the Patrick Test as a diagnostic procedure as such. For instance, observers subjectively estimate the distance between the knee and the couch on both sides (see double arrow in Fig. 4). Next, the left/right distances are mutually compared. The observers in advance have agreed that the side with the largest distance has a positive Patrick Test. Whether a positive found Patrick Test reflects a decreased mobility of the SI-joint at the same side is not proven yet. This concerns the validity of the Patrick Test. Validity will be explained later.

In this example the Patrick Test can be one of the constituent diagnostic procedures of a whole diagnostic arsenal for instance in subjects with low back and leg pain. Based on a whole diagnostic arsenal, medical history and neurological examination included, the final diagnosis can be a lumbar radicular compression of the L5 root left. This is a genuine diagnosis in the sense that aetiology and prognosis are known. The Patrick Test as such in this example of a diagnostic arsenal does not provide you with the final diagnosis of a lumbar radicular compression of the L5 root left. The Patrick Test in this case is one of the many performed diagnostic procedures.

Therefore, the reproducibility of a particular diagnostic procedure only has to do with standardisation of the various constituent components of the procedure and the use of the same defined final judgement (in our protocol dichotomous outcome, Yes/No). Reproducibility has nothing to do with a genuine diagnosis. This is very important to realise because it explains why no selection procedure for the study population in a reproducibility study is needed. In a reproducibility study with a dichotomous outcome, the diagnostic procedure as such is independent of a final diagnosis because the latter is based on a whole diagnostic arsenal.

In summary, reproducibility is about a fully, detailed and standardised description on how to perform the various components of a diagnostic procedure and a detailed and standardised description on how to measure and interpret the final outcome of the diagnostic procedure, to ultimately reproduce their findings.

1.2 Validity

Definition.

Validity measures the extent to which the diagnostic procedure actually does test what it is supposed to test. More precisely, validity is determined by measuring how a diagnostic procedure performs against the gold or criterion standard or a reference test. In a separate protocol we will give a more detailed introduction to various forms of validity, among which the criterion validity probably is the best know.

To explain this form of validity in greater detail we use again the example of the Patrick Test. As mentioned in “Definition diagnosis versus diagnostic procedure”, the hypothesis of the Patrick Test was the testing of the mobility of the SI-joint [4]. Suppose we have already a good reproducibly proven Patrick Test. The hypothesis of the Patrick Test was the evaluation of the presence or absence of mobility of the SI-joint. To evaluate the validity of the Patrick Test, we need a gold standard or a reference test that can quantify the mobility of a SI-joint during the Patrick Test manoeuvre.

Suppose we can use the stereophotogrammetry method with two iron rods (A and B, Fig. 5) placed on both sides of the SI-joints [5] during a Patrick Test manoeuvre.

A hypothetical x‑ray method is used to evaluate the mobility of the SI-joint. Two (blue) iron rods are placed on both sides of the SI-joint (A and B). X‑rays are taken before and after a Patrick manoeuvre in the subject. The distance between A and B is measured before and after a Patrick manoeuvre. The difference between the distances (before and after the Patrick Test) is a measure for mobility

The distance between these two iron rods is measured on X‑rays taken before and at the end stage of a Patrick Test manoeuvre. In case, movement in the SI-joint is elicited. Suppose we find a difference in the distances between the rods A and B before and at the end stage of the Patrick manoeuvre, and suppose we have duplicated the results of this method (proven to be reproducible), in this instance we have a good gold standard to evaluate the validity of the Patrick Test. Subsequently, we now have to perform a validity study in which the Patrick Test and an X‑ray SI-joint stereophotogrammetry (as gold standard) are simultaneously performed in the same group of subjects. By comparing the results of the Patrick Test (Fig. 6) and the X‑ray SI-joint stereophotogrammetry (Fig. 5) the validity of the Patrick Test can be estimated. In M/M Medicine, many diagnostic procedures have been developed, each with its own hypothesis. However, we have to realise that gold standards or reference tests are lacking in the vast majority of these diagnostic procedures.

In all above-mentioned examples we have used the Patrick Test. As stated earlier, observers judge the Patrick Test by simply and subjectively estimating the distance between the couch on the left and right side (see double arrow in Fig. 6). In this case one has to develop a quantitative method, which measures this distance between the knee and the couch. This quantitative method can be used as the so-called reference test to estimate the validity of the Patrick Test with respect to a range of motion.

III. Reproducibility studies: nature of diagnostic procedures

1. Nature of data in reproducibility studies

Before starting a reproducibility study, first we have to realise what kind of diagnostic procedure we are dealing with. The nature of the data of a reproducibility study dictates the kind of statistics we have to apply.

In general, we have two kinds of diagnostic procedures: qualitative and quantitative.

1.2 Qualitative diagnostic procedures

Qualitative diagnostic procedures are the most used diagnostic procedures in M/M Medicine and are characterised by subjective interpretation of the observer with respect to the result of a performed diagnostic procedure (end feel, motion restriction, resistance etc.). In qualitative diagnostic procedures, the outcome of the diagnostic procedure can be divided into two kinds of data: nominal data and ordinal data.

Outcomes of diagnostic procedures with nominal data refer to existence or absence of a particular feature and have a dichotomous or binary character reflecting a contrast. Also, the contrast between male and female in studies with gender as outcome is a good example of such nominal data. Other typical diagnostic procedures in M/M Medicine, in which the outcomes of the diagnostic produce are nominal data, are for example diagnostic procedures that evaluate “end feel” (abnormal Yes or No), pain provocation diagnostic procedure (pain Yes or No) under different conditions (provoked by observer or provoked by movements of the subject) and range of motion (restricted Yes or No). For reproducibility studies evaluating these kinds of data, kappa statistics are indicated (see “ IV. Reproducibility studies: kappa statistics”).

If the outcome of a diagnostic procedure has different categories with a natural order we are dealing with ordinal data (good, better, best). An example is the outcome of such a diagnostic procedure which evaluates the measure of range of motion and is divided into minimal, moderate and severe restriction. Other examples are the character of end feel subdivided into normal end feel, soft end feel, and hard end feel. In this case weighted kappa statistics are indicated. This kind of ordinal data is also used in standard x‑rays of the cervical spine in which the severity of the degenerative changes of the cervical spine are subdivided into categories [6, 7].

However, we have to question whether this kind of clinical subdivision into ordinal outcome data, both in M/M Medicine and radiology, has any sense at all. We have to consider whether subjective subdivisions of a diagnostic procedure outcome (for instance normal, moderate, severe) have consequences for the diagnostic and therapeutic indications of the subject. In M/M Medicine, in most of the cases there are no solid arguments to use such a subjective subdivision of outcome in diagnostic procedures. Only in circumstances in which one wants to use a diagnostic procedure to evaluate its outcome during a period of time can subjective subdivision be indicated. However, outcomes of diagnostic procedures, with subjective subdivision, are quite difficult to make reproducible. In particular, the problem is how to standardise this subjective subdivision of a diagnostic procedure. Besides, a gold standard or reference test is necessary to estimate the validity of the subdivision. In this case, it is advisable to use a quantitative method with a device, which measures in detail the outcome of the diagnostic procedure.

1.3 Quantitative diagnostics procedures

In quantitative diagnostic procedures, mostly measured with a certain kind of device, findings are quantified in degrees, millimetres, kg etc. and are recorded as interval or continuous data. A good example is measurement of joint motion of the finger in degrees by goniometry.

First of all, one has to evaluate the reproducibility of the device (test/retest). In this test/retest procedure, the systematic measurement failure can be estimated based on the dispersion of the data values.

For interval or continuous data, the appropriate statistics are intraclass correlation and paired T‑test (two tailed). In case of several different interval data, analysis of variance (ANOVA) is indicated.

Secondly, a gold standard is needed to measure the validity of the method.

Thirdly, for these kinds of quantitative procedures with devices, normative values are needed. A study of the method in normal subjects is needed to estimate the effect of gender and age. Quantitative diagnostic procedures can serve as gold standards for qualitative diagnostic procedures.

2. Inappropriate statistics of qualitative data in reproducibility studies

Frequently, inappropriate statistics are applied to measure the reproducibility of a diagnostic procedure. The main flaw is that agreement is often confused with trend or association, which is the assessment of the predictability of one variable from another. Hereunder the flaws of several statistical methods in reproducibility studies are listed.

2.1 Percentage of agreement/overall agreement (Po)/observed agreement (Po)

In reproducibility studies, using dichotomous outcome data, just mentioning one of the synonymous terms percent agreement or overall agreement or observed agreement does not provide the entire information about the reproducibility of a particular diagnostic procedure. In our protocol we use the term observed agreement (Po). Po in a reproducibility study is the ratio of the number of subjects in which the observers agree to the total number of observations. The main problem is that in reproducibility studies using a dichotomous outcome, the Po does not take into account of the agreement that is expected to occur solely by chance alone. This will be further elaborated in “IV. Reproducibility studies: kappa statistics”.

2.2 Correlation coefficients

In many reproducibility studies correlation and association measures are used to evaluate the reproducibility of clinical data. The problem is that some do not have the ability to distinguish a trend towards agreement from disagreement (Chi-Square [χ2] and Phi) or do not account for systematic observer bias (Pearson’s product moment correlation, rank order correlation) [8, 9].

3. Appropriate statistics of qualitative data in reproducibility studies

3.1 Kappa statistics

Kappa statistics are the statistics of choice for evaluating intra- and/or inter-observer reproducibility for ordinal and nominal data. This statistical method will be extensively explained in “IV. Reproducibility studies: kappa statistics”.

3.2 Appropriate statistics in quantitative data reproducibility studies

To evaluate the reproducibility of repetitive measurements with quantitative/continuous data (that may be interval or ratio data), the paired samples t‑test and/or the intraclass correlation coefficient (ICC) is indicated. This kind of statistic is used in cases of test/retest procedures when a device is used to quantify a clinical finding (range of motion).

The ICC is the statistical method of choice for the reproducibility of observers for continuous data (cm, mm, etc.). The calculated factor R in this statistical procedure is 1 if the ratings are identical for all pairs, but less than or equal to 0 in the absence of reproducibility.

3.3 Choice of statistics and clinical consequences

In reproducibility studies, the choice of statistics is not only dependent on the measurement level of the collected data (nominal, ordinal, interval, ratio). It also depends on the type of clinical decision concluded from the findings of the reproducibility study.

Suppose the reproducibility of leg length inequality has to be evaluated. The results of this reproducibility study have to be used to decide whether or not a heel lift is indicated to correct leg length inequality. In this case reproducibility can be quantified by ICC statistics for interval data. In contrast, if results of this reproducibility study have to be used to decide which side (left or right) has to be adjusted, the kappa coefficient is indicated for nominal data.

In summary, in reproducibility studies of any kind, the nature of the collected data (nominal, ordinal, interval or continuous) and the final clinical purpose of the reproducibility study as such, are decisive for the applied statistical method.

IV. Reproducibility studies: kappa statistics

As mentioned already in “III. Reproducibility studies: nature of diagnostic procedures”, most diagnostic procedures in daily practice of M/M Medicine produce an outcome of the diagnostic procedure that is nominal, and often even dichotomous (Yes/No, Present/Absent, Normal/Abnormal).

For these kinds of dichotomous data, kappa statistics are appropriate. In this section, the different kappa coefficients are explained and illustrated with the results of previous reproducibility studies to highlight different aspects, problems and flaws of this statistical method. Frequently used terms in kappa statistics are defined and explained. This section is essential to understand the reproducibility protocol elaborated in “V. Developing reproducibility studies: general aspects”. Although many formulas will be shown in this section for illustration, all these formulas will be integrated in a spreadsheet (see “VII. Protocol format reproducibility study”, section “Statistics period”) for automatic calculation of the kappa coefficient and related data of a reproducibility study. You do not have to remember these formulas.

1. Definition of the kappa coefficient

The kappa coefficient is a measure of inter- or intra-observer agreement (see “II. Reliability: Reproducibility and validity”, section “Reproducibility”) corrected for agreement occurring by chance.

Why do kappa statistics correct for the chance? If you perform a diagnostic procedure on a subject with a dichotomous outcome Yes or No, just by chance (50%) you can judge a diagnostic procedure positive. In the kappa statistics this chance can be calculated with a formula. The result of this calculation is integrated in the final formula to estimate the kappa coefficient (see “IV. Reproducibility studies: kappa statistics”, section “Calculation of the kappa coefficient”).

To illustrate the kappa statistics in detail, we use an example of a hypothetical reproducibility study in which two observers A and B perform the Patrick Test in 40 subjects. The outcome possibility of the diagnostic procedure was: Positive (Yes) or Negative (No). Both observers A and B examined the 40 subjects with the Patrick Test and recorded their findings. By combining the results of both observers per subject at the end of the study four categories of results between observers are possible: 1. Both Observer A and Observer B judge in the same subjects the Patrick Test positive (Yes/Yes), 2. Both Observer A and Observer B judge in the same subjects the Patrick Test negative (No/No), 3. Observer A judges the Patrick Test positive, while Observer B judges the Patrick Test negative in the same subjects (Yes/No), 4. Observer A judges the Patrick Test negative while Observer B judges the Patrick Test positive in the same subjects (No/Yes). The results of these four categories can be depicted in a so-called 2 × 2 contingency table (Fig. 7). In the rows and the columns are the total numbers of subjects in which the Observer A and Observer B judge the Patrick Test positive or negative.

Observer A judged 17 Patrick Tests as positive and 23 as negative. Observer B judged 18 Patrick Tests as positive and 22 as negative. By adding per observer both figures the end result is 40.

Based on the data from this 2 × 2 contingency table, different important aspects of the kappa statistics can be calculated. As shown later some of these aspects will influence the final kappa coefficient.

2. Overall agreement

2.1 Definition

Under the “III. Reproducibility studies: nature of diagnostic procedures”, section “Percentage agreement/overall agreement (Po)/observed agreement (Po)”, the synonymous terms percentage of agreement/overall agreement (Po)/observed agreement (Pobs) were introduced in reproducibility studies, the overall agreement reflects the percentage of subjects in which the observers agree about the outcome or judgement of the diagnostic procedure. In kappa statistics and in our protocol overall agreement is also named the observed agreement (Po). This means that the overall agreement Po is calculated by the sum of the number of subjects in which both observers judge the diagnostic procedure positive and negative, divided by the total number of subjects in the study. In Fig. 8, a similar 2 × 2 contingency table is shown as in Fig. 7 but now based on a theoretical reproducibility study.

The formula for the overall agreement or observed agreement Po based on the data of Fig. 8 is:

Based on the data of the 2 × 2 contingency of the reproducibility study shown in Fig. 7 the observed agreement Po is calculated as follows:

This Po will later be inserted in the final formula to calculate the final kappa coefficient (see “IV. Reproducibility studies: kappa statistics”, section “Calculation of the kappa coefficient”).

As will be explained later, the overall agreement is very important in a reproducibility study—because it influences strongly the magnitude of a kappa coefficient (see “IV. Reproducibility studies: kappa statistics”, section “Interpretation of kappa coefficient: dependency of the prevalence of the index condition Pindex”).

2.2 Prevalence and prevalence of the index condition

Three new statistical concepts, used in reproducibility studies, are introduced: index condition, prevalence and prevalence of the index condition.

2.3 Index condition definition

The index condition is synonymous with the positive judged diagnostic procedure of a subject participating in a reproducibility study. In Figs. 7 and 8 the index condition is illustrated by the “Yes” In reproducibility studies with diagnostic procedure and a dichotomous outcome (final judgement), a positive judged diagnostic procedure by observers is referred to as the index condition.

2.4 Prevalence of the index condition definition

The prevalence of the index condition in reproducibility studies reflects the frequency of positive judged diagnostic procedures in the study population by both observers. In the 2 × 2 contingency table we have three boxes with a number of subjects with a positive diagnostic procedure: the box with Yes/Yes, the box with Yes/No, the box with No/Yes. In the example of Fig. 8, these boxes are filled out with a, b and c and in Fig. 9 with 15, 2 and 3. To calculate the prevalence of the index condition Pindex we need a special formula. Based on a theoretical 2 × 2 contingency table shown in Fig. 8, the formula for the prevalence of the index condition Pindex is:

Based on the 2 × 2 contingency table of the reproducibility study shown in Fig. 10 the formula for the prevalence of the index condition Pindex is:

Relation between kappa coefficient and prevalence of the index condition. The dotted/dashed horizontal line is the cut off level of 0.60. a The kappa/prevalence index curve with an overall agreement Po of 0.97. The part of the curve above the horizontal line represent 98% kappa coefficients larger than 0.60. b The kappa/prevalence index curve with an overall agreement Po of 0.77. The curve does not cross the horizontal line, illustrating that 0% of the kappa coefficient exceed 0.60

Both observed agreement (Po) and prevalence of the index condition (Pindex) are important in a reproducibility study. Their values are decisive for the magnitude of the final kappa coefficient in a reproducibility study (see “VI. The relation between the kappa coefficient and the prevalence the index condition Po“, section “Defining the Pindex problem”).

2.5 Prevalence

Prevalence is a statistical concept referring to the number of subjects with a positive diagnostic procedure that are present in a study sample. Because two observers examine the same subject in a reproducibility study, each examined subject can have both a positive and a negative judged diagnostic procedure. Therefore, a prevalence in the sense of the above-mentioned is not feasible in reproducibility studies with a dichotomous outcome of the diagnostic procedure. Only the prevalence of the index condition (Pindex) can be calculated.

However, it is possible to calculate the prevalence of the positive judged per observer. In Fig. 9, the prevalence of the positive judged diagnostic procedure by observer A is: (15 + 2) / 40 = 0.43 and for observer B (15 + 3) / 40 = 0.45. The prevalence of the index condition (Pindex) in the example of Fig. 9 is 0.44.

3. Expected agreement by chance

As stated before, the kappa coefficient is a measure for inter-observer agreement or intra-observer agreement corrected for agreement occurring by chance. Because, if you perform a diagnostic procedure in a subject with the dichotomous outcome (Yes or No), you just by chance can judge a diagnostic procedure positive or negative.

Therefore, we have to calculate the expected agreement by chance Pc. This expected agreement by chance Pc will integrated in the final formula to estimate a kappa coefficient (see “IV. Reproducibility studies: kappa statistics”, section “Calculation of the kappa coefficient”).

The formula for the expected agreement by chance Pc [10] based on the theoretical reproducibility study shown in Fig. 8 is:

The expected agreement by chance Pc will be used for the final formula to estimate a kappa coefficient (see “IV. Reproducibility studies: kappa statistics”, section “Calculation of the kappa coefficient”).

Based on the 2 × 2 contingency table of the reproducibility study shown in Fig. 9, the expected agreement Pc can be calculated as:

4. Calculation of the kappa coefficient

To calculate the kappa coefficient, we need the observed agreement Po elaborated in “Definition kappa coefficient” of this section and the expected agreement by chance Pc of section “Calculation of the kappa coefficient” of this section to be inserted in the formula for the kappa coefficient κ:

When we apply the kappa formula on the data of the reproducibility study as shown in Fig. 9, the expected agreement by chance Pc will be 0.51, the observed agreement Po is 0.88. Inserting these figures in the kappa formula leads to:

The prevalence of the index condition Pindex in this study is 0.44 (see above) with an observed agreement Po of 0.88 (see above).

5. Interpretation of kappa coefficient: general

The kappa coefficient, as a measure for intra-observer or inter-observer agreement, can be either negative or positive. It can range between −1 and +1. Several schemes are available to interpret the kappa coefficient of a reproducibility study. The most widely used is the scheme of Landis and Koch [11]. They stated that kappa coefficients above 0.60 represent good to almost perfect agreement beyond chance between two observers. In contrast, kappa coefficients of 0.40 or less represent absence to fair agreement beyond chance. Kappa coefficients between 0.40 and 0.60 reflect a fair to good agreement beyond chance (Table 1).

However, the standards for strength of agreement provided by Landis and Koch is just an agreement about the kappa interpretation.

The same kappa coefficient can be based on different values of the overall agreement (Po). A very low or negative kappa coefficient can be the result of a very high or low Pindex and does reflect the quality of the agreement between two observers about a diagnostic procedure.

This will be further explained below in sections “Interpretation of kappa coefficient: dependency of the overall agreement” and “Interpretation of kappa coefficient: dependency of the prevalence of the index condition Pindex” of this section.

6. Interpretation of kappa coefficient: dependency of the overall agreement

As already mentioned in section “Definition of the kappa coefficient” of this section, the overall agreement is a very important factor to interpret the kappa coefficient of a reproducibility study. In reproducibility studies, the overall agreement Po reflects the proportion of subjects in which the observers agree about the outcome or judgement of the diagnostic procedure. More precisely, it reflects the total proportion in which both observers agree about positive and negative found diagnostic procedure in the same subjects.

In the example of Fig. 14, the overall agreement Po is calculated by the sum of the number of subjects in which both observers judge the diagnostic procedure positive and in which both observers judge the diagnostic procedure negative, divided by the total number of subjects of the study. In our example the overall agreement Po = (15 + 20) / 40 = 0.88.

Many published reproducibility studies in M/M Medicine show low kappa coefficients without mentioning the overall agreement data.

In Fig. 10 the relation between the kappa/Pindex curve and the overall agreement is illustrated, with curves for two different overall agreements Po.

In Fig. 10a there is a very high overall agreement of 0.99, the whole kappa/Pindex curve is located above the 0.60 kappa cut off level. In 98% the kappa coefficient is ≥0.6. In case a low overall agreement, the kappa/Pindex curve will ultimately drop under the 0.60 kappa cut off level line and now the kappa coefficient will be <0.6 (Fig. 10b). Because a part of the kappa/Pindex curve drops below the zero-line, negative kappa coefficients can occur.

For instance, if the overall agreement Po decreases from 0.98 to 0.79, the kappa/Pindex curve slowly shift downwards and become finally located under the zero line. The percentage with kappa coefficients kappa coefficients ≥0.6 will decrease from 99 to 0%. In Fig. 11, all kappa/Pindex curves of the Po interval 0.98–0.79 are depicted.

7. Interpretation of kappa coefficient: dependency of the prevalence of the index condition Pindex

As already mentioned in section “Prevalence and the prevalence of the index condition” of this section, the prevalence of the index condition Pindex is a very important factor. Not only how to interpret a kappa coefficient of a reproducibility study, but the Pindex importantly can influence the level of the kappa coefficient.

In reproducibility studies, the prevalence of the index condition Pindex is synonymous with the frequency of all positive judged diagnostic procedures (the index condition) by the observers. The Pindex has to be calculated using a formula (see section “Prevalence of the index condition definition”). The relation between the kappa coefficient and the Pindex is illustrated in Fig. 9.

The kappa/Pindex curve in Fig. 12 illustrates that if the Pindex is low (0.2) or high (0.9), the matching kappa coefficients will be under the kappa cut off line of 0.6. This means that in this study sample, there are too few (low Pindex) or too many (high Pindex) positively judged diagnostic procedures. As stated in the section “Interpretation of the kappa coefficient”, the standards for strength of agreement provided by Landis and Koch [11] (Table 1) was just an accordance about the kappa interpretation and without a scientific base. The same kappa coefficient can be based on different values of the Po and Pindex. A very low or negative kappa coefficient can be the result of a very high or low Pindex and does reflect the quality of the agreement between two observers about a diagnostic procedure.

Relation between kappa coefficient and prevalence index of the index condition Pindex. The horizontal line is the cut off level of 0.60. The part of the curve above this line represent 51% of the kappa coefficients ≥0.60. The blue dots indicate low kappa coefficients (horizontal arrows) in case of a low Pindex (left blue dot) or a high Pindex (right blue dot)

The relation between the kappa coefficient and Po and Pindex has consequences for the interpretation of kappa coefficients in published reproducibility studies. As can be seen in Fig. 13, the same kappa coefficient can be located on the left or right side of different kappa/Pindex curves. Each kappa/Pindex curve has its own Po value, ranging from an overall agreement of 0.97 till 0.79. The kappa coefficient 0.4 can be due to a low overall agreement Po, of which the top of the kappa/Pindex curves is on or below the cut off line of 0.6 (inner curves near the left red arrow). Besides, the kappa coefficient 0.4 can also be due to a too high or too low Pindex, of which the top of the kappa/Pindex curves is above the cut off line of 0.6 (outer curves near the right red arrow) because these kappa/Pindex curves have a high Po value.

Relation between kappa coefficient and prevalence of the index condition. The horizontal dotted/dashed line is the cut off level of 0.60. The kappa/prevalence index curves with an overall agreement Po between 0.97 and 0.79. Red dots represent all kappa with the same coefficient of 0.4, but on different kappa/prevalence index curves (see text)

The same is partly true for reproducibility studies finding kappa coefficients ≥0.6 without mentioning data about Po and Pindex. Although authors conclude, based on the scheme of Landis and Koch (Table 1), a very good reproducibility of the diagnostic procedure and subsequently advice to use this diagnostic procedure in daily practice, they were just lucky that the prevalence of the index condition Pindex was not too high or too low. The Pindex is always calculated after completing the study and therefore is not known in advance.

8. Interpretation of kappa coefficient: bias

Bias can be present when observers produce different patterns of ratings or outcomes [12]. No systematic pattern of scoring trends should be present by any observer. If a solid training phase is incorporated in the study protocol, bias should not be a problem [13]. In the IAMMM protocol a well-defined training phase is incorporated.

Concluding, published reproducibility studies that do not mention the values of the Po and Pindex and in which authors concluded an absence of clinical value because of a low observed kappa coefficient and using the standards for strength of agreement provided by Landis and Koch [14] have to be interpreted with caution.

How to deal with the relation between kappa coefficient and Po and Pindex in reproducibility studies will be elaborated later (“The problem of the relation between the prevalence of the index condition and the kappa coefficient”, section “Influencing the prevalence of index condition in advance”).

V. Developing reproducibility studies: general aspects

1. Nature of the diagnostic procedure to be evaluated in a study

The first step, before starting a reproducibility study of a diagnostic procedure(s) in M/M Medicine, is to be clear about the nature of the diagnostic procedure(s) to be evaluated. In reproducibility studies and in daily medical practice, it is essential to realise the difference between a diagnosis, a syndrome and relation with diagnostic procedures.

Diagnosis.

In a genuine diagnosis, by definition the aetiology and prognosis of the disease are known, for instance bacterial meningitis.

Syndrome.

A syndrome is a combination of signs and symptoms that appear together in a high frequency in a certain population, for instance a sacroiliac syndrome, low back pain. The aetiology however is unknown or diverse.

Diagnostic procedure.

In (M/M) medicine, diagnostic procedures are the constituent parts of a whole diagnostic arsenal that finally can lead to a particular diagnosis or syndrome.

A diagnostic procedure is a procedure, performed by a clinician, to identify and/or objectify in a qualitative (subjective) manner a clinical symptom of the subject. In both genuine diagnoses and syndromes, diagnostic procedures are needed. We can rarely rely one single diagnostic procedure to make a diagnosis or define a syndrome.

For example, the single finding of the absence of an Achilles tendon reflex does not constitute a lumbar radicular syndrome. The additional combination of findings of radiating pain, sensory deficit, motor deficit and a positive Lasègue are necessary to make the conclusion of a lumbar radicular syndrome. Since we are dealing with a syndrome, the aetiology can be as well as an intervertebral disc prolapse as a tumour in the intervertebral foramen, both with lumbar nerve root involvement. In our daily practice we are dealing with many non-specific clinical conditions, for instance low back pain. Since in low back pain 85% of the aetiology is lacking, we have to principally rely on diagnostic procedures to form syndromes of low back pain.

Also, in our educational systems, many diagnostic procedures are taught to the students as a “diagnostic” procedure. For instance, diagnostic procedure for restricted passive cervical rotation. The students just learn how to perform the whole procedure of passive cervical rotation (setting of the hand, applied force etc.). Such a restriction can have many reasons and it therefore gives no information about a particular diagnosis or syndrome as such.

Therefore, a combination of diagnostic procedures has to be performed, which all together point in the same direction towards a particular clinical syndrome or diagnosis. In summary, before starting a reproducibility study of a diagnostic procedure(s) in M/M Medicine observers have to agree about its nature and have to realise that:

- a.

A single diagnostic procedure is never related to a particular diagnosis or syndrome.

In a reproducibility study of a single diagnostic procedure, just the reproducibility of the execution of the whole performance of the diagnostic procedure and the judgement of the observers is evaluated (for instance a positive or negative judged Patrick Test).

- b.

Different diagnostic procedures are related to a particular syndrome.

In a reproducibility study of a set of diagnostic procedures, just the reproducibility of the combination of the different diagnostic procedures in relation to a “Syndrome” is evaluated (for instance the absence [no] or presence [yes] of a sacroiliac syndrome). In this case the different diagnostic procedures must be mutually independent for the observers (see section “Combinations of a few different diagnostic procedures: mutual dependency”).

- c.

Several diagnostic procedures are related to a particular diagnosis.

In a reproducibility study of a set of diagnostic procedures, the reproducibility of the combination(s) of the different diagnostic procedures in relation to a diagnosis are evaluated (for instance the absence [no] or presence [yes] of international criteria for rheumatoid arthritis of a knee). In this case the different diagnostic procedures must also be mutually independent for the observers (see section “Combinations of a few different diagnostic procedures: mutual dependency”).

2. Number of diagnostic procedures evaluated in reproducibility studies

2.1 Too many diagnostic procedures

Reproducibility studies in non-specific clinical conditions, for low back pain, sometimes evaluate a large number of diagnostic procedures at the same time, for instance all diagnostic procedures in the lumbar region. In these kinds of reproducibility studies, many of the diagnostic procedures at the end show low kappa coefficients and subsequently it is concluded that these diagnostic procedures have no clinical value.

As already explained in the sections “Interpretation of the kappa coefficient” and “Interpretation of kappa coefficient: dependency of the overall agreement” (under “IV. Reproducibility studies: kappa statistics”), the prevalence of the index condition Pindex and overall agreement Po influence greatly the final kappa coefficient of a study. Since data of Po and Pindex are frequently lacking in studies evaluating many diagnostic procedures at the same time, a definite conclusion about the reproducibility of the diagnostic procedures with low kappa coefficients cannot be drawn.

The largest flaw of this kind of reproducibility studies with many diagnostic procedures is the absence of a training period. As a consequence and very predictable, a low overall agreement Po is obtained for many diagnostic procedures and therefore a low kappa coefficient.

2.2 Combinations of a few different diagnostic procedures: mutual dependency

As mentioned already in the section “ Nature of the diagnostic procedure to be evaluated in a study”, reproducibility studies can also evaluate a combination of diagnostic procedures in relation to the existence of a particular syndrome or diagnosis. In M/M Medicine, a combination of diagnostic procedures is frequently used in relation to sacroiliac syndromes. It was also stated in paragraph 1 that the individual diagnostic procedures in this combination have to be mutually independent. How to evaluate the mutual dependency of the diagnostic procedures investigated in reproducibility studies?

Mutual dependency of diagnostic procedures.

Kappa statistics are normally used for the agreement between observers—the inter-observer reproducibility—as illustrated in Fig. 15. In this example of a 2 × 2 contingency table we have two observers A and B.

The same kappa statistics can be used to determine the mutual dependency between diagnostic procedures used in a study.

Instead of the two observers A and B, we now use per observer (A) a set of two diagnostic procedures (Test I and Test II). The agreement and disagreement between Test I and Test II of the examined subjects is likewise estimated in a 2 × 2 contingency table (Fig. 16).

Based on the data in the boxes of Fig. 16, a kappa coefficient can be calculated. A kappa coefficient of ≥0.40 means that that there is a probability that Test I and Test II (Fig. 16) are mutually dependent diagnostic procedures for observer A. Also, in this case the values of the Po and Pindex are necessary for proper interpretation of the kappa coefficient.

The reason for such a mutual dependence of tests is the fact that observer A judges Test I positive, he subsequently and unconsciously judges Test II also positive. In a previous reproducibility study, the problem of evaluating too many diagnostic procedures in the same reproducibility study was illustrated [15]. In this study, three observers were involved and used 6 SI-Tests to make a final conclusion of the presence of an SI-joint dysfunction. The data of two observers A and B are used as an example. In the reproducibility study 6 SI-Joint Tests (I, II, III, IV, V and VI) were used. Based on the 6 SI-joint Tests observers A and B had to judge whether the examined subjects have the SI-joint dysfunction syndrome yes or no.

Instead of showing a separate 2 × 2 contingency table for each observer A and B of all possible combinations of two SI-Tests, the data are summarised in one single table (Fig. 17).

In the most upper black row, 6 SI-Tests I to VI are listed. In the far-left black column, these Tests I to VI are also listed from top to bottom but now in black. In the second left column, the observers A and B within each SI-Test row are listed. In the next columns to the right the kappa coefficients for each observer A and B per SI-Test I to VI are shown. These kappa coefficients are calculated based on the principles used in the 2 × 2 contingency table presented in Fig. 16.

Fig. 17 has to be read in the following way. If one wants to look for a mutual dependency between Test V and Test VI of observer A, the first step is to follow the black dashed/dotted line with arrow to the right, starting from observer A in left upper square till you reach the square under number V of the SI-tests at the top of the row. Next, from this position, follow the vertical column of this Test V downwards (black dashed line with arrow downwards), till you reach the horizontal row corresponding with Test VI of observer A. The kappa coefficient you will find in this case is +0.89 (see square right lower corner of the table in the figure). The kappa coefficient +0.52, depicted beneath that of +0.89 illustrates the same relation between Test V and VI but now for observer A. Both kappa coefficients 0.89 and 0.52 are above 0.40 and when using standards for strength of agreement provided by Landis and Koch, both kappa coefficients demonstrates a possible mutual dependency of the diagnostic procedures.

2.3 Combinations of a few different diagnostic procedures: mutual dependency of diagnostic procedure and final “syndrome diagnosis”

In M/M Medicine in general and the SI-joint dysfunction syndromes in particular, reproducibility studies use combination of diagnostic procedures to make a final judgement about the existence of a clinical sign or syndrome. We use again the example of a study mentioned in section “Combinations of a few different diagnostic procedures: mutual dependency” of this section. Observers A and B had to judge, based on six SI-Tests I to VI, the existence of a SI-joint dysfunction syndrome—yes or no [16].

To evaluate which of the six SI-Tests (I to VI) the observers (unconsciously) have used for their final judgement of SI-joint dysfunction syndrome, kappa statistics can be applied again.

The data for estimation of the mutual dependency between a single SI-Test and the final judgement of a SI-joint dysfunction syndrome (= syndrome diagnosis) of Observer A are presented in a 2 × 2 contingency table of Fig. 18.

Instead of showing a separate 2 × 2 contingency table for each observer A and B for all SI-Tests and the final conclusion of a SI-dysfunction syndrome, the complete data are summarised in one single table (Fig. 19).

The kappa coefficients in the dashed boxes in the right column are above 0.40 and when using standards for strength of agreement provided by Landis and Koch, that both observers mainly use (unconsciously) SI-Test V and VI for their final judgement. Observer A also probably uses SI-Test IV for his final judgement. The other SI-Tests I to IV are hardly involved in the final judgement of observer A and B.

Another flaw of reproducibility studies, evaluating different diagnostic procedures for the same clinical phenomenon (for instance different SI-Tests for SI-joint dysfunction), is the fact that it is almost never clear to what “functional system” the different diagnostic procedures are related. Pain provocation SI-Tests and motion pattern SI-Tests (Vorlauf phenomenon) are located in two different functional systems: nociceptive system and postural system. The outcome of the diagnostic procedures have to be in the same functional system as the diagnostic procedure as such.

For instance, SI-joint provocation diagnostic procedures with the outcome of a numeric pain score.

Reproducibility studies, using combinations of several diagnostic procedures to make a final judgement of the existence of a clinical sign or syndrome and not evaluating the mutual dependency of the diagnostic procedures and/or mutual dependency of diagnostic procedures and final judgement, have no clinical value.

The same is true for reproducibility studies, evaluating a set of diagnostic procedures for a single diagnosis, advocate the use of a minimal number of positive diagnostic procedures to confirm the final judgement, for instance, use 3 out of 6 diagnostic procedures.

Realising the amount of work of reproducibility studies evaluating the many diagnostic procedures that have been developed in the six decades in M/M Medicine it is advisable to evaluate only one diagnostic procedure in a reproducibility study. Secondly, developing a new diagnostic procedure for M/M Medicine, it is advisable to perform a reproducibility study before publishing the new diagnostic procedure.

2.4 Large number of different diagnostic procedures in a “diagnostic protocol”

In some reproducibility studies, the observer(s) have to classify a subject within a particular system using a diagnostic protocol with a large number of diagnostic procedures. A well-known example is the McKenzie System that distinguishes several different syndromes for instance for low back pain [17,18,19]. However, frequently the single diagnostic procedures were not evaluated with respect to their reproducibility properties. Although observers may agree to a large extent about their final judgement to classify a subject with the diagnostic protocol, it is unclear what diagnostic procedure(s) or combination of diagnostic procedures the observers used for their conclusion. These kinds of reproducibility studies using a diagnostic protocol with a large number of diagnostic procedures have to incorporate only reproducibly proven diagnostic procedures. Besides the mutual dependency of the diagnostic procedures used, their dependency with the final judgement has to be evaluated in the statistical analysis of the reproducible study as illustrated in sections “Combinations of a few diagnostic procedures: mutual dependency” and “Combinations of a few different diagnostic procedures: mutual dependency of diagnostic procedure and final ‘syndrome diagnosis’ “ (under “V. Developing reproducibility studies: general aspects”).

3. Hypothesis of the diagnostic procedure in a reproducibility study

The hypothesis of a diagnostic procedure as such can influence the final result of a reproducibility study. More precisely, there is a relation between the extent of agreement (read kappa coefficient) and the supposed hypothesis by the observers participating in the study. In general, hypothesis means what the observers assume what their diagnostic procedure really is supposed to test. In case of a simple hypothesis such as range of motion there is no problem. The problem arises when observers just adapt the hypothesis of a diagnostic procedure from their textbooks or what they were taught in their M/M Medicine courses. A well-known example is the mobility of the sacroiliac joint (SI-joint). In M/M Medicine, a vast number of SI-joint Tests have been developed, all supposedly testing the mobility of the SI-Joint. Looking carefully and critically at all these different SI-joint Tests, we have to question whether all these diagnostic procedures evaluate the same aspect of the SI-joint mobility, especially because all these different SI-joint Tests differ substantially in their performance.

Although it has been proven in cadaver studies that mobility of the SI-joint exists [20,21,22], it is impossible, even for the most experienced observer, to test manually the mobility of the SI-joint. Nevertheless, in many reproducibility studies involving SI-joint Tests, this incorrect hypothesis is still the starting point. In a reproducibility study, an incorrect hypothesis as such can influence the observer agreement and consequently the final kappa coefficient of the study. Because it is essential to understand the effect of the hypothesis, two examples from previous performed reproducibility studies are presented to illustrate this phenomenon. In a former reproducibility study 3 observers (A, B, C), wanted to evaluate the reproducibility of hypo-mobility of the SI-joint, based on 6 SI-Tests (I to VI) [15, 23]. Their hypothesis of the used SI-Tests was that all these diagnostic procedures could demonstrate the presence or absence of mobility of a SI-joint. The three well-experienced observers (all were M/M Medicine course leaders) adapted the hypotheses of the 6 used SI-Tests from literature. In Fig. 20 the kappa results are listed between observers (A↔B, A↔C, B↔C), per SI-Test (I to VI) and with respect to their final judgement of the absence or presence of a SI-joint hypo-mobility (SI-dysfunction syndrome diagnosis).

Note all kappa coefficients between pairs of observers are below 0.60 both for the individual SI-Tests I to VI and for the final judgement of the absence or presence of a SI-joint hypo-mobility.

In a second reproducibility study [24], the same two observers (A, B) from the previous study mentioned above wanted to evaluate the reproducibility of the SI-joint dysfunction based on 3 SI-joint Tests (Test I, Test II, Test III from the above-mentioned first study). Observers first renounce their previous hypothesis of the used three SI-Tests, namely, that all these three diagnostic procedures could determine the extent of the SI-mobility. Secondly, by very precisely looking at all aspects of the performance of the diagnostic procedures and their judgement, observers A and B concluded by mutual deliberation that all three SI-Tests measured increased muscle tone of different muscle groups related to the lumbo-sacral-hip complex. Because no structural abnormalities were found, a SI-joint dysfunction was assumed. Observers argued that increased muscle tone led to motion restriction and resistance at the end of the passive performed procedure. Based on these 3 SI-Tests, the observers had to judge whether or not SI-joint dysfunction existed. In Fig. 21, the 2 × 2 contingency table of this study is presented together with the kappa coefficient, prevalence of index condition and overall agreement.

Note that the kappa coefficient has risen to 0.70 just by changing the hypothesis of three SI-Tests (I, II, III) used in this reproducibility study. In the first study (see Fig. 20) the kappa coefficients of SI-Tests I, II and III were 0.11, −0.08 and −0.05 respectively.

Whatever diagnostic procedure is selected for a reproducibility study, step by step the whole diagnostic procedure and its final judgement has to be analysed for observers to agree about what they think the diagnostic procedure really tests.

Based on this agreement, the observers can define a more plausible hypothesis for the diagnostic procedure, which can completely contradict the hypothesis stated in the literature. Therefore, before analysing the diagnostic procedure, sometimes the originally described diagnostic procedure in the literature has to be renounced.

4. Characteristics and number of observers to be involved in a study

4.1 Number of observers

In published reproducibility studies, the number of observers participating in the study varies from 2 to sometimes 10. Because of a better clinical application of a diagnostic procedure, some authors advocate the use of more than two observers in a reproducibility study. Authors simply argue that the more observers agree about a diagnostic procedure, the better the reproducibility properties of that diagnostic procedure are.

However, this assumption is based on a serious logical error. Reproducibility studies are primarily meant to provide us with information about all the aspects of the reproducibility properties of a diagnostic procedure. This means that the number of observers in essence has no relation to the reproducibility properties of a diagnostic procedure as such in a reproducibility study. Before starting a reproducibility study, the two observers have to agree about all the details of the performance of the diagnostic procedure and its final judgement.

As will be explained in the reproducibility protocol format (see “VII. Protocol format reproducibility study”) this agreement is acquired by introducing a training phase in the protocol format of the study. If in a reproducibility study several observers who have not passed the training phase of the protocol are used, the final low kappa coefficients reflect more the personal interpretation or the comprehension of the non-trained observers instead of the reproducibility properties of the evaluated diagnostic procedure.

Therefore, only two observers are needed in a reproducibility study if only the reproducibility property of a diagnostic procedure have to be evaluated.

If a reproducibility study is meant to evaluate the effect of education on several participating observers by implementing several training phases in the study protocol, more than two observers can be used to participate in the study [25].

4.2 Characteristics of observers

In many reproducibility studies, observers with different levels of skills are involved. These levels are used as a predictive or explanatory factor for the level of the kappa coefficients found by the different observers involved in the study. For using observers with different levels of skills in reproducibility studies, the same objections count as for the idea to use more than 2 of observers in a study.

Reproducibility studies are primarily meant to provide us with information about all the aspects of the reproducibility properties of a diagnostic procedure. This means that level of skills of the observers in essence have no relationship with the reproducibility properties of a diagnostic procedure as such. Before starting a reproducibility study, the observers have, independently from their personal skills, to agree about all the details of the performance of the diagnostic procedure and its final judgement in the training phase.

As will be explained in the reproducibility protocol format (see “VII. Protocol format reproducibility study”), this agreement is acquired by introducing a training phase in the protocol format of the study, in case only the reproducibility properties of a diagnostic procedure have to be evaluated. If in a reproducibility study several observers who have not passed a training phase of the protocol are used, the final obtained kappa coefficient reflects for instance more the personal interpretation of the well-experienced observer and the comprehension of the evaluated diagnostic procedure of the less experienced student instead of the reproducibility properties of the evaluated diagnostic procedure.

Over the years of their profession, well-experienced practitioners in M/M Medicine have unconsciously developed their own personal interpretation about the performance and about the judgement of a diagnostic procedure. As a consequence, their diagnostic procedure may differ from the originally described diagnostic procedure in literature. In students, a lack of experience with the diagnostic procedure may play a role and influence the final kappa coefficient. It is emphasised in our protocol format (see “VII. Protocol format reproducibility study”, section “Training period”) to implement a training phase for each observer irrespective the level of skill. Only then is standardisation of the performance and judgement of a diagnostic procedure guaranteed.

5. Number of subjects to be involved in a reproducibility study

In previous editions of his protocol, a total of 40 subjects in study phase was arbitrarily chosen as a “statistical minimum” to perform these kinds of studies. From a practical point of view, a rounded number of 40 was chosen to make these kinds of reproducibility studies relatively easy and cheap to perform. In general, it was advised by a statistician that for simple reproducibility studies with dichotomous outcome and using kappa statistics, 40 subjects were sufficient. Nowadays, sample size calculations based on statistical power are advised to estimate study sample sizes. However, such calculations for sample size are only possible in case a null hypothesis can be formulated such as in randomised controlled trials (RCT). Because kappa statistics is not generally recommended for null hypothesis testing, sample size calculations based on power are not strictly relevant in reproducibility studies with a dichotomous outcome [13]. Instead and more important are the size and stability of the estimates determined by the width of the confidence intervals. Kappa statistics were designed for descriptive purposes and as a basis for statistical inference, but kappa statistics are typically not used as a null hypothesis-testing statistic [13, 26]. Other approaches have been developed, but were mainly meant for multiple observers with a dichotomous outcome variable [26].Very important to realize and strongly related with the problem of the sample size of a reproducibility is the fact that the kappa coefficient of a diagnostic procedure is not an absolute measure as such. Its value is always dependent on the prevalence of the index condition Pindex and to a lesser degree on the overall agreement Po (see “IV. Reproducibility studies: kappa statistics”, sections “Interpretation of kappa coefficient: dependency of the overall agreement” and “Interpretation of kappa coefficient: dependency of the prevalence of the index condition Pindex”). This means that the same kappa coefficient can have different levels of the Po and the Pindex. Furthermore, in a reproducibility study, the kappa coefficient and Pindex is only related with the positive judged diagnostic procedures. In Fig. 22, the squares with data a (yes/yes), b (yes/no) and c (no/yes) are exclusively decisive for the final kappa coefficient. The data d (no/no), illustrating the diagnostic procedures observers also agree about, is not included in the kappa coefficient as measure for the reproducibility of a diagnostic procedure. Only the overall agreement Po concerns both the positive and negative judged diagnostic procedures observers agree about and are depicted in the squares a (yes/yes) and b (no/no).

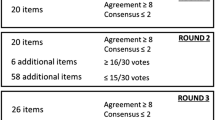

The overall agreement Po as such is an absolute measure and reflects more the daily practice of a clinician dealing with diagnostic procedures. As a clinician, one wants to know how reproducible the diagnostic procedure is, both for a positive and negative final judgement of the diagnostic procedure. The overall agreement Po is the most appropriate measure for conveying the relevant information in a 2 × 2 table and is most informative for clinicians [27]. However, when using dichotomous outcomes, we have to realise that the overall agreement Po is not corrected for the chance. In the previous IAMMM protocols a minimum level of the Po was chosen to guarantee kappa coefficients ≥0.6 in the final study [28]. If the Po of a reproducibility study is 0.79, no kappa coefficient ≥0.6 can be obtained.