Abstract

This paper introduces a new gaze-based Graphic User Interface (GUI) for Augmentative and Alternative Communication (AAC). In the state of the art, prediction methods to accelerate the production of textual, iconic and pictorial communication only by gaze control are still needed. The proposed GUI translates gaze inputs into words, phrases or symbols by the following methods and techniques: (i) a gaze-based information visualization technique, (ii) a prediction technique combining concurrent and retrospective methods, and (iii) an alternative prediction method based either on the recognition or morphing of spatial features. The system is designed for extending the communication function of individuals with severe motor disabilities, with the aim to allow end-users to independently hold a conversation without needing a human interpreter.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Augmentative and alternative communication

- Graphical user interfaces for disabled users

- Gaze based interaction

- Assistive technologies

1 Introduction

Many assistive technologies have been proposed to provide disabled people with means of communicating that enhance speech and language production, called Augmentative and Alternative Communication (AAC) methods. AAC methods include aids for language, speech and/or writing difficulties, and they are suited for different use cases, environments and contexts. AAC systems are not strictly related to a health condition, but they are addressed to a wide variety of functional needs of people of all ages and health conditions, such as learning difficulties, cerebral palsy, cognitive disabilities, head injury, multiple sclerosis, spinal cord injury, autism and more [1].

The term AAC refers to both low-tech and high-tech aided communication systems. Low-tech AAC systems are based on non-electronic devices that may use body gestures, expression or signs as communication media, for example, tangible boards and gaze communication boards such as the E-Tran (eye transfer) communication frame, which is a transparent board showing pictures, symbols or letters that can be selected by using gaze pointing and which must be placed between two people –one communicator and one translator. Hi-tech AAC systems are based on electronic devices such selection switches or gaze-based pointing boards [2].

Unless low-tech AAC systems are very inexpensive and simple to use, they often require a human interpreter that translates the user’s inputs into verbal commands and requests. Differently from low-tech assistive systems, hi-tech AAC systems can be easily suited for input by minimal movements (such as eye movements) and may increase users’ independence and autonomy from a human interpreter, thus increasing the quality of life to disabled persons. However, individuals with severe motor disabilities (e.g., locked-in syndrome, cerebral palsy, motor neuron disease, or spinal cord injury) are still prevented from enjoying the full benefits of AAC systems due to usability issues [3–6] and the constant need for support and assistance [7–10], often leading to the assistive technology non-use and abandonment [11].

As the COGAIN (COmmunication by GAze INteraction) network points out, most severe motor disabilities could take advantage of gaze-based communication technologies [12] since the eye movement control is usually the least affected by peripheral injuries or disorders, being directly controlled by the brain [13]. The muscles of the eyes are able to produce the fastest movements of the body, therefore they can be used as an efficient method to control user interfaces as alternative to a mouse pointer, touch-screen or voice-user interfaces. Moreover, eye gaze is an innate form of pointing since the first months of life, and natural eye movements are related to low levels of fatigue [14].

The term “gaze control” commonly refers to systems measuring the points of regard and wherein eye movements are used to control a device. Gaze control systems differ from each other by the user requirement that they aim to address, and the expertise that is asked to use gaze as an input device. Usually, gaze control is performed by pointing and dwelling on a graphic user-interface. When involuntary movements prevent eye fixations, blinks or simple eye gestures are used as switches.

In the state of the art, the most proposed gaze-based communication method is direct gaze typing, which uses direct gaze pointing to choose single letters on a keyboard displayed on a screen. In direct gaze typing, once a user selects a letter, the system returns a visual or an acoustic related feedback and the typed letter is then shown in the text field. Direct gaze typing communication can be very slow since human speech has high-speed entry rates (150–250 words per minute–wpm) compared to gaze typing on on-screen keyboards (10–15 wpm) [15]. Methods to speed-up the production of phrases and sentences are therefore needed. Different systems based on methods predicting words or/and phrases are proposed in the field to provide more efficient gaze text entry. However, the current prediction techniques force users to frequently shift gaze from the on-screen keyboard to the predicted word/phrases list, thus reducing the text entry efficiency and increasing cognitive load [15].

The aim of this paper is to propose a graphic user interface (GUI) that translates gaze inputs into communication words, phrases and symbols by new methods and techniques overcoming the limitations of currently used prediction methods for direct gaze speech communication. The GUI is an AAC system for both face-to-face and distance communication. The paper focuses on describing the following methods and techniques for writing and communicating by gaze control: (i) a gaze based information visualization technique, (ii) a prediction technique combining concurrent and retrospective methods, and (iii) an alternative prediction method based on the recognition of spatial features.

2 Design Methodology

2.1 Objectives

The AAC system here proposed is a gaze based GUI aiming to (i) avoid that the user’s gaze frequently shifts from the keyboard field to a separated text field (usually above the on-screen keyboard in traditional AAC systems) (ii) increase both efficiency and ease of use; and (iii) facilitate the selection of words or icons when a very high numbers of items have to be shown (e.g., a dictionary). Evaluating the usability and the user experience of the GUI with end-users is planned for future work.

2.2 Methods and Techniques

The GUI belongs to AAC systems that use gaze tracking for text entry for both face-to-face and distance communication. The GUI also provides users with both pictorial and iconic stimuli for people who are not able to read or write.

The system is designed by the following methods and techniques:

-

(i)

A gaze-based branched decision tree visualization technique, which arrays successive rounds of alphabetic letters to the right-hand side of the last choice, and depicts the choice, thus creating a directional path of all the choices made, until a word is formed.

-

(ii)

A retrospective and concurrent word prediction technique. The system may operate in two ways: (1) prospectively, to make it easier for the user to select the most likely next decision among the others and (2) retrospectively, to predict what a user would have meant to type, given a certain gaze input.

-

(iii)

A prediction method called Directed Discovery, which is based on the recognition of the spatial structures constituting items.

3 Results

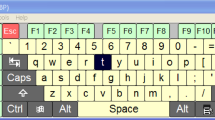

A gaze-based keyboard has been developed. The keyboard GUI navigation is based on three modules: (i) a branched decision-tree module, (ii) a word prediction module, and (iii) a direct discovery module.

3.1 Branched Decision Tree Module

The branched decision tree module prevents gaze shifting from an on-screen keyboard to predicted lists of words, and provides users with a navigable trail back through the history of choices made, so the user can easily look back, thus effectively undoing decisions made in error.

A branched decision tree is a bifurcation tree in which the next rounds of choices are arranged to one side of the last choice. The tree arrays the next round of choices to one side of the last choice, creating a directional depiction of the choices that have been made (Fig. 1).

The directionality allows simple navigation back up the tree, by looking towards the original choice. The directionality also provides momentum, because when making choices the user’s eyes always move in one direction (e.g., left to right), allowing the user to chase the solution. If a bifurcation tree has more choices than fit into the screen width, then the screen can scroll to the right.

3.2 Word Prediction Module

In branched decision trees, not all choices are equally likely to be selected. One example of an unequal likelihood decision tree is a gaze-based keyboard that presents a range of letters at each round, with every round selecting one letter until a word is formed (Fig. 2).

When typing a word, given that certain letters have been selected before, there is a higher likelihood that some other choices will be selected next. To make it easier for the user’s eyes to select the most likely next decision, but not preclude other less likely decisions, more likely choices may be emphasized. In the proposed GUI, emphasis is given by changing the visual properties of graphic elements (e.g., color, contrast) or drawing lines from the middle to the choices that are weighted by likelihood (Fig. 3).

Rather than only prospectively predict what a user will decide, the system is also able to retrospectively predict what a user did decide given an input. Given a path traversed by the eyes, it is possible to calculate all the possible meanings (including a degree of error) and choose the most likely choice intended. For example, if the user quickly sweeps their eyes through a gaze-based branch keyboard and selects an impossible combination of letters, then similar trajectories can be analyzed to see if they produce likely words, and all of these selections presented to the user at the final round to choose from (Fig. 4).

The system combines post-predictions with forward-predictions to present complete decisions as choices before reaching the final round (Fig. 5). For example in a keyboard, before a word is complete, one choice may be a complete phrase of words rather than a single letter.

3.3 Direct Discovery Module

A new selection method called Directed Discovery has been proposed to accommodate situations where selection from pre-existing objects is not appropriate, such as when there are so many choices that selection by sight would be inefficient (e.g., selecting words from a dictionary), when pictorial work is being created (e.g., drawing), or when abstract non-textual elements such as iconic objects are shown (e.g., peace).

Because gaze is particularly suited to make visual decisions, the Directed Discovery module shows to users’ eyes a range of graphic possibilities. However, differently from the word prediction module, the direct discovery method involves the user in directly creating the range of possibilities that (s)he will decide on, from sub-sequential rounds of shapes, and deciding which features change until the last choice is selected. The method is based on the theory that visual overt attention is guided by both task-related needs (top-down process) [16] and the visual saliency of certain features of an object (bottom-up processes), e.g., edge, orientation or termination of edges [17].

Different techniques using the Directed Discovery method have been created to allows users to make gaze-based discovery of non-pre-existing choices: (1) the Evolution technique; (2) the Directed Morph technique; (3) the Sculpture technique. Each technique uses blob-like graphic elements as nodes of the branched decision tree which are sub-sequentially linked to other children nodes, and final choices as leaves of the tree, i.e., elements with no children.

(1) Evolution technique. The technique randomly mutates the graphic element and lets the user’s visual overt attention evolve the product towards the desired result, through cycles of mutation and selection. These cycles of selection of the fittest would evolve the element towards the desired result, even if that result had never been seen before. Eye fixations last only fractions of a second (usually 200 ms), so preference selection may happen quickly, allowing many iterations of evolution to occur in a short time. In the Evolution technique, a text entry system is proposed whereby blob-like graphic elements are gradually mutated into the shape of the word required. At some point, the blob will unambiguously match a single word (Fig. 6).

Selecting a word from its outline makes text entry more similar to the way humans recognize words when reading, i.e. by the shape of the word not by the individual letters as current keyboards do [18]. Humans perceive words by their first, middle and last letters, which spatial relation gives them a peculiar shape, commonly called Bouma shape [19, 20]. Word recognition is based on visual gestalten, i.e. structured spatial wholes, which help the perceiver to rapidly recognize words among many others. The Evolution technique allows users to recognize words by the gradual mutation or the directed morph of a range of Bouma shapes, until one of them unambiguously matches to a single word.

(2) Directed Morph technique. In some circumstances the Evolution technique can take too long to produce the result needed. An alternative technique called Directed Morph has been designed to allow non-random mutation to occur, based on prediction of the desired result through cycles of mutation and selection over discrete rounds. These predictions may present choices that are completely resolved decisions (e.g., “this blob looks like the word ‘hello’, so the word ‘hello’ will present as a choice”), or it may result in presenting families of choices (Fig. 7), based on some defining characteristics of predictions (e.g., “if a blob looks like the Eiffel tower, then thin struts may be added to it”). The predictions may be offered alongside other non-predicted choices, so the user is not restricted by incorrect predictions.

Prediction can occur more effectively if the prediction system has knowledge of context. A categorical search tree is offered to users to work within a known sphere of knowledge chosen from a master list of conceptual or semantic domains (e.g. emotions, activities, tasks, etc.) rather than only perceptual categories. The prediction system may use the knowledge of the context to predict the shape/object that is being selected. In one example of Directed Morph, a text entry system is proposed that whereby blob-like graphic elements are gradually resolved into words (Fig. 8). Using prediction and context, a range of possible words may be offered alongside other mutated blobs, allowing the correct word to be selected using fewer choices.

(3) Sculpture technique. A different type of Directed Discovery GUI is not based on separate choices over discrete rounds, but constructs the solution continuously and in-place. The Sculpture technique is particularly suited for people who are not able to read or write and need to use pictorial and iconic stimuli to communicate. Instead of the sites for modification being randomly selected, they can be chosen by the user’s gaze. One simple form of sculpting is for the user’s eyes as form guidance, to locate an area of discrepancy. If a particular feature is missing or incorrect, it will attract the user’s visual overt attention and that area can be selectively mutated (Fig. 9).

Rather than use mutation, a more controlled form of sculpture is possible by parametric modification of the element. With parametric modification, the attributes of the object are modified (e.g., offset, curve diameter), until they no longer provoke the user’s eye(s) fixation (Fig. 10). For example, if the object was an animal, and the user was attending to the head, the parametric search might change the size of the head until it no longer provoked fixation. Because parametric sculpture is not based on generating options for selection, there is no need to present multiple choices to the user. Changes may be presented in-place, with the object modified according to the user’s sculpting eye fixation.

Most parts of shapes have more than one attribute, so the user needs to be able to select which attribute to modify. One technique is to modify each attribute in turn, while observing the user’s attention, to discover which attribute causes the user’s fixation to increase or decrease, indicating that the shape is closer to that expected (Fig. 11).

Modifying one attribute at a time would be very slow when creating complex graphic elements, and the modification causes changes that may attract the user’s attention thus causing incorrect sculpting. A more efficient technique consists in modifying many attributes at once, over the entire object, and tracking which of the modifications is increasing or decreasing user’s visual overt attention. By a process of combinations, the sensitive parameters and their preferred directions can be identified while the element evolves its way towards the wanted result (Fig. 12).

The parametric modifications to the shape do not need to be in the spatial domain. Frequency-domain changes may be performed on the shape, so large parts vary at once. Frequency domain modification allows a complex image to be constructed, first with low-frequency fundamental shapes, then later with details (Fig. 13).

Prediction can be used with sculpture, but because sculpture shapes one graphic element rather than display a range of choices, predictive features are presented in a different way: The presentation of the object is optimized to weight the user’s visual attention towards the predicted result (Fig. 14). This weighting may be performed in a variety of ways, including modifying the brightness, color or position of parts of the displayed object.

4 Conclusion

One challenge of assistive technologies for communication is reaching effective word prediction methods for increasing the autonomy of people with severe motor disabilities. The main objective of the proposed Augmentative and Alternative Communication system is to empower the sensorimotor users’ abilities and allow end-users to independently communicate without needing a human interpreter. The system proposed follows new information visualization methods and techniques to prevent gaze shifting from a display keyboard to lists of graphic elements and facilitates the selection of items among complex data sets. The system is specifically designed for users whose residual abilities and functions include: (1) eye motor abilities, (2) language comprehension, decoding and production, (3) symbolic communication abilities. In future experimental work, the usability and the user experience of end-users will be evaluated.

References

Millar, S., Scott, J.: What is augmentative and alternative communication? An introduction. Augmentative communication in practice 2. University of Edinburgh CALL Centre, Edinburgh (1998)

Lancioni, G.E., Singh, N.N., O’Reilly, M.F., Sigafoos, J., Oliva, D., Basili, G.: New rehabilitation opportunities for persons with multiple disabilities through the use of microswitch technology. In: Federici, S., Scherer, M.J. (eds.) Assistive Technology Assessment Handbook, pp. 399–419. CRC Press, New York (2012)

Borsci, S., Kurosu, M., Federici, S., Mele, M.L.: Computer Systems Experiences Of Users With And Without Disabilities: An Evaluation Guide For Professionals. CRC Press, Boca Raton (2013)

Borsci, S., Kurosu, M., Federici, S., Mele, M.L.: Systemic User Experience. In: Federici, S., Scherer, M.J. (eds.) Assistive Technology Assessment Handbook, pp. 337–359. CRC Press, Boca Raton (2012)

Federici, S., Scherer, M.J.: The Assistive Technology Assessment Model and Basic Definitions. In: Federici, S., Scherer, M.J. (eds.) Assistive Technology Assessment Handbook, pp. 1–10. CRC Press, Boca Raton (2012)

Federici, S., Corradi, F., Mele, M.L., Miesenberger, K.: From cognitive ergonomist to psychotechnologist: a new professional profile in a multidisciplinary team in a centre for technical aids. In: Gelderblom, G.J., Soede, M., Adriaens, L., Miesenberger, K. (eds.) Everyday Technology for Independence and Care: AAATE 2011, vol. 29, pp. 1178–1184. IOS Press, Amsterdam (2011)

Mele, M.L., Federici, S.: Gaze and eye-tracking solutions for psychological research. Cogn. Process. 13(1), 261–265 (2012)

Mele, M.L., Federici, S.: A psychotechnological review on eye-tracking systems: towards user experience. Disabil. Rehabil. Assist. Technol. 7(4), 261–281 (2012)

Federici, S., Corradi, F., Meloni, F., Borsci, S., Mele, M.L., Dandini de Sylva, S., Scherer, M.J.: A person-centered assistive technology service delivery model: a framework for device selection and assignment. Life Span Disabil. 17(2), 175–198 (2014)

Federci, S., Borsci, S., Mele, M.L.: Environmental evaluation of a rehabilitation aid interaction under the framework of the ideal model of assistive technology assessment process. In: Kurosu, M. (ed.) HCII/HCI 2013, Part I. LNCS, vol. 8004, pp. 203–210. Springer, Heidelberg (2013)

Federici, S., Borsci, S.: Providing assistive technology in Italy: the perceived delivery process quality as affecting abandonment. Disabil. Rehabil. Assist. Technol. 1–10 (2014)

Bates, R., Donegan, M., Istance, H.O., Hansen, J.P., Räihä, K.J.: Introducing COGAIN: communication by gaze interaction. Universal Access Inf. 6(2), 159–166 (2007)

Leigh, R.J., Zee, D.S.: The Neurology of Eye Movements, vol. 90. Oxford University Press, New York (1999)

Saito, S.: Does fatigue exist in a quantitative measurement of eye movements? Ergonomics 35(5/6), 607–615 (1992)

Majaranta, P.: Communication and text entry by gaze. In: Majaranta, P., Aoki, H., Donegan, M., Hansen, D.W., Hansen, J.P., Hyrskykari, A., Räihä, K.J. (eds.) Gaze Interaction and Applications of Eye Tracking: Advances in Assistive Technologies, pp. 63–77. IGI Global, Hershey (2012)

Yarbus, A.L.: Eye Movements and Vision. Plenum Press, New York (1967)

Itti, L., Koch, C., Niebur, E.: A model of saliency based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 20(11), 1254–1259 (1998)

Kintsch, W.: Models for Free Recall and Recognition. Models of Human Memory, vol. 124. Academic Press, New York (1970)

Bouma, H.: Visual interference in the parafoveal recognition of initial and final letters of words. Vision. Res. 13, 762–782 (1973)

Bouwhuis, D., Bouma, H.: Visual word recognition of three letter words as derived from the recognition of the constituent letters. Percept. Psychophys. 25, 12–22 (1979)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Mele, M.L., Millar, D., Rijnders, C.E. (2015). Beyond Direct Gaze Typing: A Predictive Graphic User Interface for Writing and Communicating by Gaze. In: Kurosu, M. (eds) Human-Computer Interaction: Interaction Technologies. HCI 2015. Lecture Notes in Computer Science(), vol 9170. Springer, Cham. https://doi.org/10.1007/978-3-319-20916-6_7

Download citation

DOI: https://doi.org/10.1007/978-3-319-20916-6_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20915-9

Online ISBN: 978-3-319-20916-6

eBook Packages: Computer ScienceComputer Science (R0)