Abstract

Given a three-point fourth-order boundary value problems

such that

where \(p,q,r,s,f \in C[a,b]\), we combine the application of variational iteration method and fixed point iteration process to construct an iterative scheme called variational-fixed point iteration method that approximates the solution of three-point boundary value problems. The success of the variational or weighted residual method of approximation from a practical point of view depends on the suitable selection of the basis function. The method is self correcting one and leads to fast convergence. Problems were experimented to show the effectiveness and accuracy of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The numerical solution of boundary value problems is of great importance as a result of its wide application in scientific and technological research [1]. Many researchers have developed various numerical methods, especially iterative methods to approximate different types of differential equations, see [2–5]. In recent years, there has been a growing interest in the treatment of iterative approximation such as variational iteration method, fixed point iteration and so on [6], variational iteration method has been used over the years to obtain an approximate solution of some boundary value problems, see [7, 8]. On the other hand, fixed point iteration is a method of computing fixed point of iterated function, it is a well-known method of approximation whose version is the variation iteration method, see [9, 10]. Obviously, these methods have been proved by many researchers to be powerful tools in solving boundary value problems [11]. However, there are noticeable shortcomings in implementations of these methods especially the use of arbitrary function as a starting value, an in-appropriate choice of starting function may affect the rate of convergence, see [11, 12].

In this paper, the propose method is an elegant combination of variational iteration method and fixed point iteration method with the use of finite element method to determine the starting function.

Analysis of variational-fixed point iterative scheme

The variational-fixed point iteration is the combination and application of variational iteration method and fixed point iterative process endowed with finite element method.

To illustrate the basic technique of variational iteration method, we consider the following general differential equation

where L is linear operator, N is nonlinear operator and g(x) is forcing term. According to variational iteration method, see [13, 14], a correctional functional of (1) can be constructed as follows

where \(\lambda\) is the Lagrange multiplier [15, 16] which can be determined by variational theory, thus

its stationary conditions can be obtained using integration by parts. The second term in the right is called correction and \(\tilde{u}u_{n}\) is considered as a restricted variation, i.e \(\delta u_{n}=0\). Thus, the Lagrange’s multiplier [17, 19] can be identified as

The given solution is considered as the fixed point of the following functional [11] under the suitable choice of initial approximation [21] \(u_{0}(x)\) at \(n=0\). We use finite element method to determine the starting function to avoid the arbitrary choice of starting function. After single iteration process we obtained \(u_{1}\); repeating the process iteratively the term to be integrated become larger and cumbersome to operate and the result of each iteration step diverges from exact solution. Based on this fact we introduce the application of fixed point iterative process to overcome it. Thus we have the following theorem:

Theorem 1

Let (E, d) be a complete metric space and T be a self map on E. Further, let \(y_{o} \in E\) and let \(y_{n+1}=f(T,y_{n})\) denotes an iteration procedure which gives a sequence \(\{y_{n}\}\) . Then T is an iteration process and defined for arbitrary \(y_{0}\) by

where \(\{a_{n}\}\) is a real sequence satisfying \(\alpha _{n}=1,\) \(0 \le \alpha _{n} \le 1\) for \(n \ge 0\) and \({\sum \nolimits _{n=0}^{\infty } \alpha _{n} = \infty }.\)

Proof

Let

such that

where \(p,q,r,s,f\in C[a,b]\), then the scheme

obtained by harnessing Mann and Banach fixed point iteration [6, 20], to yield

and converges for \(0\le \lambda _{n}\le 1\). Now let

Therefore, for any y(x) solution of the integral equation on [a, b]

where G(x, t) is a green function of the associated boundary value problem and v(x) is a solution of \(y^{(iv)}=0\) that satisfies boundary conditions. Now if we let \(T:C^{1}[a,b]\rightarrow C^{1}[a,b]\) be defined by

then T is an operator such that any y(x) is a solution of (6) at fixed point of T and can be referred as a fixed point operator. For convergence of (3) and (5) we let

From Eq. (8) it follows that

Therefore Eqs. (9) and 10 become

Also, combining Eqs. (7) and (11) yields

Therefore scheme (3) and (5) are convergent. This scheme is use to approximate boundary value problems iteratively with the use of arbitrary initial approximation \(y_{0}\) at \(n=0\). Since the variational iteration method and fixed point iteration methods are similar [10]. We let \(y_{0}=u_{1}\) to avoid the assumption of the arbitrary function \(y_{0}\) where the process will be carried out iteratively until convergence is obtained or the iteration is terminated. However, the finite element methods are the Galerkin method, collocation method, Raleigh-Ritz method, etc. Galerkin method is an approximate solution of boundary value problems suggested by Galerkin [18], based on the requirement that the basis function \(\phi _{0},\phi _{1},\phi _{2},\ldots ,\phi _{n}\) be orthogonal to the residual

\(i=0,1,2,3,\ldots ,n\). This gives rise to the following system of linear algebraic equations for the coefficients of the approximation solution

of the boundary value problem

such that

Therefore,

The weight functions are taken with the concept of inner product and orthogonality, it is obvious that the inner product of the two function in a certain domain is

which is used to determine the starting function of the variational iteration method instead of an arbitrary choice. \(\square\)

Numerical examples

In this section, two experiments are considered to demonstrate present methods:

Example 1

Consider

subject to the boundary conditions

The following must be observed: Galerkin method of approximation is used to determine the initial approximation of variational iteration method whose trial function

is called the basis function, the approximate solution we sought. Where \(U_{0}(x)=x, U_{1}=(x-x^{4}), U_{2}=(x-x^{5})\)

We differentiate the Eq. (14) successively to obtain second and fourth derivatives and then substitute in Eq. (13) to get the residual

A weight function is chosen within the bases function with the concepts of inner product and orthogonality

These are sets of n-order linear equations which is solved to obtain all C i coefficients as follows:

we obtain

Also

we get

solving these Eqs. (16) and (17) simultaneously we obtain \(C_{}=308/4331, C_{2}=42/4331\). We substitute these constants in (14), hence the approximation solution we sought for.

The second step is the use variational iteration method to determine the starting function of the fixed point iterative procedure; we construct the correct functional of (13) as follows:

at \(n=0\)

We let

we differentiate (20), i.e \(t_{0}(x)\) successively to obtain its second and fourth derivative and substitute same in Eq. (19) to have

When the process is repeated for further iterations, the function to be integrated is getting larger and complex, where iterated values diverge from the analytical solution. Based on this fact, We let \(y_{0}=U_{1}(x)\), where \(U_{1}(x)\) is the iterative function obtained after single iteration taken as an initial values for fixed point iterative technique. The scheme in (12) the fixed point iterative procedure can be used as follows

at \(n=0\)

But

We differentiate the Eq. (24) twice to get \(y_{0}^{\prime \prime }\) and then substituted it into the given Eq. (23) to obtain

To obtain \(y_{1}(x)\), we integrate (25) four times successively and imposing the boundary conditions to get

The repeat the process at \(n=2,3 \ldots\) until the iteration is terminated or it converges with the analytical solution, after few iterations we get

Example 2

Consider

subject to the boundary conditions

We let

which is the basis function where \(U_{0}(x)=13x, U_{1}=(x-x^{4}), U_{2}=(x-x^{5})\)

We differentiate Eq. (27) successively to obtain second and fourth derivatives and then substitute same in (26) the given differential equation to get the residual

A weight function are chosen within the basis function with the concepts of inner product and orthogonality

These are sets of n-order linear equations which is solved to obtain all C i coefficients as follows:

we obtain

Also

we get

solving Eqs. (29) and (30) simultaneously to obtain \(C_{1}=-635/4331, C_{2}=504/4331\). Then we substitute these constants in (27) called the basis function and is the approximate solution we want

and

Next we apply variational iteration method by constructing a correctional functional of (26)

at \(n=0\)

We let

we differentiate (33), i.e \(t_{0}(x)\) successively to obtain it second and fourth derivative and substitute in the given Eq. (32) to have

we simplify to get

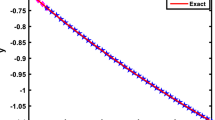

As the number of iterations increases, the function to be integrated is getting larger and cumbersome making the functions diverging from the analytical solutions at each iteration steps. Based on this fact, we apply the fixed point iterative technique where we use \(U_{1}(x)=y_{0}\) as a starting value after single iteration to avoid the use of arbitrary function (Fig. 1).

at \(n=0\)

But

We differentiate (36) twice to get \(y_{0}^{\prime \prime }\) and then substituted it into the given Eq. (35) to obtain

To obtain \(y_{1}(x)\), we integrate (37) four times successively and imposing the boundary conditions to get

Repeat the process at \(n=2,3 \ldots\) until the iteration is terminated or convergence is achieved, after few iterations we get

Discussion

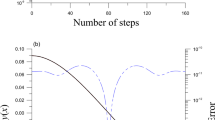

The accuracy and convergence of the method are of great significance in a numerical experiment of such type. Accuracy measures the degree of closeness of the numerical solution to the theoretical solution while convergence measures even the closer approach of successive iteration to the exact solution as the number of iteration increases. To asses the success of our method, the scheme was tested with some numerical examples whose results presented as Tables 1 and 2. These tables show the comparison with exact method, Galerkin method, variational iteration method and the approximate solution obtained by variational-fixed point iteration method. It is observed that with few iterations, the order of the error is quite encouraging, which indicates fast rate of convergence. It is clearly seen that as the iterations proceeds, the error decreases and convergence is assured (Fig. 2).

Conclusion

In this paper we have shown the performance of the variational-fixed point iterative scheme for the solution three-point boundary value problems with help of some experiments which indicates to be very powerful and an efficient technique with good convergence property when compared with the existing method. We can conclude the method have an advantage over the existing methods.

References

Wazwaz, A.M.: The variational iteration method for analytic treatment for linear and nonlinear ordinary differential equations. Appl. Math. Comput. 212, 120–134 (2009)

Mohyud-Din, S.T., Yildirim, A., Hossein, M.M.: Variational iteration method for initial and Boundary Value Problems Using He’s Polynomials. Int. J. Differ. Equ. 2010, Article ID426213, p 28. doi:10.1155/2010/426213 (2010)

Turkyilmazoglu, M.: An optimal variational Iteration method. Appl. Math. Lett. 24, 762–765 (2011)

Porshokouhi, M.G., Ghambari, B.: He’s variational iteration method for solving differential equation of fifth order. Gen. Math. Notes 1(2), 153–158 (2010)

Kılıçman, A., Adeboye, K.R., Wadai, M.: A Variational-Fixed Point Iterative Technique for the solution of second order Linear differential equations. Malays. J. Sci. 34(2) (2015) (in press)

Bildik, N., Bakir, Y., Mutlu, A.: The New Modified Ishikawa Iteration Method for the Approximate Solution of Different Types of Differential Equations. Fixed Point Theory Appl. 52 (2013)

Biaza, J., Ghazuvini, H.: He’s variational iteration method for fourth-order parabolic equations. Comput. Math. Appl. 54, 790–797 (2007)

Ghorbani, A., Nadjafi, J.S.: An effective modifications of He’s variational iteration method. Nonlinear Anal. Real World Anal. 10, 2828–2833 (2009)

Altintan, D., Ugur, O.: Solution of initial and boundary value problems by the variational iteration method. J. Comput. Appl. Math. 259, 790–797 (2014)

Khuri, S.A., Sayfy, A.: Variational iteration method: greens functions and fixed point iteration perspective. Appl. Math. Lett. 32, 28–34 (2014)

Lu, J.: Variational iteration method for two-point boundary value problems. J. Comput. Appl. Math. 207, 92–95 (2007)

He, J.H.: Variational iteration method—some recent results and new interpretation. J. Comput. Appl. Math. 207, 3–17 (2007)

Noor, M.A., Mohyud-Din, S.T.: Variational itertion method for solving higher order boundary value problems. Appl. Math. Comput. 189, 1929–1942 (2007)

He, J.H.: Variational iteration method: a kind of nonlinear analytical technique: some examples. Int. J. Nonlinear Mech. 34, 699–708 (1999)

He, J.H.: Variational iteration method for the autonomous ordinary differential system. Appl. Math. Comput. 114(2—-3), 115–123 (2009)

Inokuti, M., Sekine, H., Mura, T.: General use of the Lagrange multiplier in nonlinear mathematical physics. In: Nemat-Nasser, S. (ed.) Variational method in the mechanics of Solids, pp. 156–162. Pergamon Press, Oxford (1978)

Wu, G.C.: Challenge in the variational iteraation method-a new approach to identification of the lagrange multipliers. J. King Saud Univ. Sci. 25(2), 175–178 (2013)

Burden, R.L., Faires, J.D.: Numerical Analysis. 120–134 (2009)

Shang, X., Han, D.: Application of variational iteration method for the solving nth-order integro-differential equations. J. Comput. Appl. Math. 234, 1442–1447 (2010)

Herceg, D., Krejic, N.: Convergence Results for Fixed point Iteration in R. Comput. Math. Appl. 31(2), 7–10 (1996)

Momani, S., Abuasad, S., Odibat, Z.: Variational iteration method for the solving nonlinear boundary value problems. Appl. Math. Comput. 183, 1351–1358 (2006)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Author’s contributions

Both authors jointly worked on deriving the results and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kilicman, A., Wadai, M. On the solutions of three-point boundary value problems using variational-fixed point iteration method. Math Sci 10, 33–40 (2016). https://doi.org/10.1007/s40096-016-0175-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-016-0175-z