Abstract

Iron deficiency, with or without anemia, is extremely frequent worldwide, representing a major public health problem. Iron replacement therapy dates back to the seventeenth century, and has progressed relatively slowly until recently. Both oral and intravenous traditional iron formulations are known to be far from ideal, mainly because of tolerability and safety issues, respectively. At the beginning of this century, the discovery of hepcidin/ferroportin axis has represented a turning point in the knowledge of the pathophysiology of iron metabolism disorders, ushering a new era. In the meantime, advances in the pharmaceutical technologies are producing newer iron formulations aimed at minimizing the problems inherent with traditional approaches. The pharmacokinetic of oral and parenteral iron is substantially different, and diversities have become even clearer in light of the hepcidin master role in regulating systemic iron homeostasis. Here we review how iron therapy is changing because of such important advances in both pathophysiology and pharmacology.

Similar content being viewed by others

Background

Iron deficiency anemia: prevalence and etiology

Iron deficiency (ID), with or without anemia (iron deficiency anemia or IDA), represents a major global health problem affecting more than 2 billions people worldwide [1, 2], mainly because of poverty and malnutrition in developing countries. Individuals with increased requirement of the micronutrient, like preschool children, adolescents during the growth spurt, and women of childbearing age, are at the highest risk [3]. Nonetheless, IDA is also frequent in western countries, with a prevalence ranging from 4.5 to 18% of the population, where elderly with multimorbidity and polypharmacy represent an adjunctive subcategory at high risk [4].

IDA can be due to a wide range of different causes (summarized in Table 1), which can roughly grouped into three major categories: imbalance between iron intake and iron needs, blood losses (either occult or overt), and malabsorption. The coexistence of multiple causes or predisposing factors is not uncommon in certain patients, particularly those with severe and/or recurring IDA [1, 5], and in the elderly [4]. Complex overlap of different mechanisms can occur in the individual patient. Just as an example, gastrointestinal angiodysplasia represent a relatively frequent cause of occult bleeding in the elderly [6], which can be difficult to diagnose when localized in the small bowel unless wireless capsule endoscopy is performed. Angiodysplasia often associate (in 20–25% of cases) with calcific aortic stenosis, giving rise to the so-called Heyde’s syndrome [7]. Such syndrome includes an acquired coagulopathy further favoring bleeding from angiodysplasia, because of consumption of high molecular weight von Willebrand factor multimers during flux through the stenotic valve [7].

Treatment of IDA is based on two cornerstones. Recognition and management of the underlying cause(s) is mandatory, whenever possible. In the meantime, iron has to be reintegrated by selecting the most appropriate compound and route of administration in each individual patient.

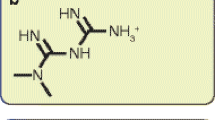

Pathophysiological advances in iron metabolism

Iron, a micronutrient essential for life, is particularly important for an adequate production of red blood cells (RBCs), which deliver oxygen to all body’s tissues. RBCs represent by far the most numerous cells of the human body. An adult man is made of near 30 trillions (3 × 1013) cells, excluding the microbiome [8]. RBCs account for near 84% (24.9 trillions) of total cells, and are produced with a rate of near 200 billions per day, i.e. near 2.4 millions per second. Such an impressive activity requires a daily supply of 20–25 mg of iron to erythroid precursors in the bone marrow [9]. The body iron content (~ 4 g in the adult male, ~ 3 g in the female) must be kept constant, to avoid either deficiency or overload, which can also be detrimental by facilitating the production of toxic reactive oxygen species [10]. In the recent years, enormous progresses have been made in understanding of the mechanisms regulating iron homeostasis at both cellular and systemic levels, so that our era has been defined “the golden age of iron” [11]. The turning points have been the discoveries of hepcidin [12,13,14], ferroportin [15,16,17], and their interaction [18], at the beginning of this century (history reviewed in detail elsewhere [19]). Hepcidin is a small cysteine-rich cationic peptide made of only 25 amino acids [20]. It is synthesized primarily by the hepatocytes, which accounts for the first part its name (“hep-”). The rest (“-cidin”) lies on the fact that it was originally discovered, partly by chance [19], during research focusing on defensins, i.e. naturally occurring peptides with antimicrobial activity [21]. Indeed, hepcidin retains some degree of antimicrobial activity, but this is exerted only indirectly, i.e. by subtracting iron to invading pathogens (see below). Hepcidin critically regulates systemic iron homeostasis by binding to its receptor ferroportin, the only known channel for exporting iron out of cells. Ferroportin, a multidomain transmembrane protein, is highly expressed in cells critical for iron handling like: (1) duodenal enterocytes, involved in absorption of dietary iron; (2) splenic red pulp macrophages, involved in iron recycling from senescent erythrocytes; and (3) hepatocytes, involved in iron storage. Hepcidin binding determines ferroportin internalization and degradation [18], thereby decreasing iron fluxes into the plasma through inhibition of both iron absorption and recycling. Of note, systemic iron homeostasis is highly conservative and “ecologic” (Fig. 1). Under physiological conditions, RBCs contain the largest proportion of body iron (i.e. near 2 g), and the 20–25 mg of iron needed for daily production of new RBCs derive almost totally from continuous recycling of the element through the phagocytosis of senescent erythrocytes. Only a minimum amount of iron (i.e. 1–2 mg, less than 0.05% of total body iron) is lost every day through skin and mucosal exfoliation, plus menses in fertile women. Such losses are obligated and there is no physiological way to regulate iron excretion. Hence, homeostasis of total body iron amount is maintained by regulating intestinal absorption in order to precisely match the losses, i.e. by absorbing just 1–2 mg/day of iron out of the 10–15 mg contained in an average western diet.

Essentials of systemic iron metabolism. Systemic iron metabolism is highly conservative of total body iron content (3–4 g), through the continuous recycling of iron from the senescent erythrocytes by splenic macrophages, which supplies the 20–25 mg/day of iron needed for bone marrow hematopoiesis (thick red arrows). Both iron deficiency and iron overload are detrimental and have to be avoided. Total body iron homeostasis is maintained by accurately matching unavoidable daily losses with intestinal absorption of dietary iron, (1–2 mg/day) (thin blue arrows). The master regulator is hepcidin, which neutralize ferroportin (black dotted arrows), i.e. the only known cell membrane iron exporter mainly expressed by macrophages and on the basolateral membrane of absorbing intestinal cells. Hepcidin production is stimulated by high iron concentration in tissues (via BMP6) and in the circulation (via saturated transferrin), as well as by pro-inflammatory cytokines. On the other hand, it is suppressed by iron deficiency, hypoxia, and increased erythropoiesis (see also the text)

The hepcidin/ferroportin axis is finely tuned to ensure the balance between erythropoiesis need and iron absorption. The regulation of hepcidin and ferroportin expression at molecular level is quite complex, and its description is beyond the scope of this article (for comprehensive reviews see [22,23,24]). From a clinical standpoint, hepcidin production is modulated by a number of physiological and pathological conditions that can exert opposite influences [25]. The three major determinants are body iron stores, erythropoietic activity, and inflammation [24] (Fig. 1). Hepcidin synthesis by hepatocytes is stimulated when body iron stores are replete, mainly through a paracrine release of Bone Morphogenetic Protein 6 (BMP6) [26, 27]. Indeed, BMP6 is produced by liver sinusoidal cells [28] in response to increased transferrin saturation [22], and stimulates the BMP/Small Mother Against Decapentaplegic (SMAD) signaling pathway critically involved in transcriptional regulation of hepcidin [26]. On the other hand, hepcidin is markedly suppressed in iron deficiency [29, 30] to ensure maximal absorption of iron from the gut. Hepcidin is also negatively regulated by erythropoietic activity in the bone marrow. For example, after an acute blood loss hepcidin is suppressed in order to match the increased iron need of erythroid precursors for rapid production of new RBCs. In murine models, a hormone named erythroferrone (ERFE) has been identified as the hepcidin suppressing agent produced by erythroblasts [9, 31]. In humans, the ERFE ortologue encoded by the gene FAM132B seems also involved in hepcidin suppression under conditions of increased erythropoiesis [32, 33], although in combination with other factors still poorly characterized [23]. Finally, inflammation strongly stimulates hepcidin synthesis through several interleukins (IL), mainly IL-6 [34] and IL-1β [35]. In acute inflammatory conditions hepcidin release from hepatocytes increases rapidly (within few hours) and exponentially (by more than 10–40-folds) [36, 37]. The ensuing rapid hypoferremia represents a protective factor in several acute infections, by subtracting iron to invading microbial agents avidly requiring the element for their growth [38,39,40]. On the other hand, hepcidin-induced iron sequestration into macrophages leads to iron-restricted erythropoiesis, a major driver of the “anemia of inflammation”, and, in the long term, of the “anemia of chronic diseases” [41, 42]. It is now increasingly clear that the hepcidin/ferroportin axis also critically influence the response to iron treatments.

Historical considerations

Iron therapy dates back to the seventeenth century, when Thomas Sydenham (1624–1689) first proposed the use of oral iron salts for the treatment of “chlorosis”, although the disorder was initially believed as an hysterical problem rather than due to IDA [43]. The first iron compound to be used for intravenous (IV) route (iron saccharide) entered the clinical scenario near to the second half of the past century [44]. Unfortunately, both oral and IV traditional iron formulations are known to be far from ideal, mainly because of tolerability and safety issues, respectively. From a pharmacological point of view, iron replacement therapy has progressed relatively slowly until recently. At the beginning of this century, concomitantly with the pathophysiological advances mentioned above, improvements in the pharmaceutical technologies have allowed the production of newer iron formulations, particularly for IV administration, aimed at minimizing the problems inherent with traditional compounds. Noteworthy, the pharmacokinetic of oral iron is completely different from that of IV iron (Fig. 2). Oral iron is incorporated into plasma transferrin after release from the basolateral membrane of intestinal cells, providing that no condition leading to malabsorption (i.e. celiac disease, autoimmune or HP-related chronic gastritis) is present [5]. By contrast, IV iron compounds are first taken up by macrophages and then released into the bloodstream. As the two treatments cannot be considered merely interchangeable and have different indications, we will examine them separately.

Different pharmacokinetic between oral and IV iron, revisited in the hepcidin era. Pharmacokinetic of oral iron requires the integrity of the mucosa of the stomach (acidity is needed to solubilize iron) and duodenum/proximal jejunum (where most of iron is absorbed). This integrity can be compromised by several conditions leading to malabsorption (see Table 1). The maximum absorption capacity during oral iron treatment is estimated to be near 25–30 mg/die, i.e. near ten to twenty-fold the typical daily absorption of dietary iron in steady-state condition (1–2 mg). Unabsorbed iron is mainly responsible of gastrointestinal adverse effects (AEs). IV iron has a completely different pharmacokinetic that circumvents these problems. The iron-carbohydrate complexes (see Fig. 3 for details) are rapidly taken up by macrophages, then iron atoms of the core are slowly released in the circulation through ferroportin. Both oral and IV iron requires ferroportin to be released in the plasma. Hepcidin production is typically suppressed in uncomplicated IDA, allowing maximal iron absorption. However, slightly elevated (or even inappropriately normal) hepcidin levels appear sufficient to inhibit intestinal ferroportin. This can be due to a genetic disorder (IRIDA), to concomitant low-grade inflammation (i.e. in chronic heart failure), or even to transient stimulation after a first dose of oral iron. This constitutes the basis for current recommendation of using oral iron on an alternate day schedule instead of the classical daily schedule (see the text for details). On the other hand macrophage ferroportin, whose expression is much higher than at the intestinal level, requires much more elevated hepcidin levels (i.e. like during acute inflammation) to be substantially suppressed

Oral iron therapy

Easy, cheap and often effective (not always)

Oral iron represents the mainstay of IDA treatment, being easy, cheap, and effective in the majority of mild to moderate cases, i.e. when hemoglobin is ≥ 11 g/dl, or ≤ 10.9 but ≥ 8.0 g/dl, respectively, according to the WHO (http://www.who.int/vmnis/indicators/haemoglobin/en). Indeed, in severe IDA (hemoglobin < 8.0 g/dl), whatever the cause, there is an increasing agreement on the use of new IV iron compounds (see below) as first-line therapy, because of their superior efficacy and rapidity [45]. Patients severely symptomatic, e.g. those with hemodynamic instability or signs of myocardial ischemia, need RBC transfusion, although such clinical presentation is uncommon in IDA.

A complex market scenario

Iron-containing oral preparations currently available in the market are innumerous, with a variety of pharmaceutical forms including pills, effervescent tablets, elixir, and so on. Their chemistry is also heterogeneous, including either trivalent (Fe3+, or ferric) or divalent (Fe2+, or ferrous) iron, in form of iron salts or iron polysaccharide complexes [46]. Many preparations are over the counter and often aggressively advertised as “ideal”, or the “most natural” way to reintegrate ID. Pharmacological iron is absorbed through the same pathway of non-heme dietary iron found in plant foods, which is exceedingly less efficient than absorption of heme–iron found in meat [47, 48]. Non-heme dietary iron is largely ferric, and, as such, highly insoluble. To be absorbed, it needs to be reduced by a brush-border ferrireductase (duodenal cytochrome b or DCYTB), allowing the resulting divalent iron to enter the luminal surface of enterocytes through a specialized transporter (Divalent Metal Transporter 1, or DMT1). By contrast, the absorption of heme–iron is less well understood [48], and attempts to produce heme–iron polypeptides has resulted in greater costs and insufficient clinical evaluation [49]. Thus, for the moment divalent iron salts appear the most appropriate form of oral iron replacement therapy, the most used being ferrous gluconate, ferrous fumarate, and ferrous sulfate (FS) (Table 2). In particular, FS represents the universally available compound considered by all guidelines [50, 51].

The traditional prescription. Be aware of elemental iron. Replace stores, not only hemoglobin

What really matter in different divalent oral iron preparations is the content of elemental iron. As a general rule, oral iron preparations do not contain more than 30% of elemental iron, but a source of confusion is represented by the fact that such proportion can vary by manufacturer, as well as in different countries. For example, typical FS tablets of nominal 325 mg salt, contain 65 mg of elemental iron in the US, and 105 mg in Europe. Other iron salt tablets, i.e. ferrous gluconate usually contain less elemental iron by weight (for example 28 mg/256 mg, 38–48 mg/325 mg) (Table 2). As the classically recommended daily dose for IDA treatment is 100–200 mg of elemental iron, physicians should always check this content before prescribing any preparation, including liquid ones like syrup, elixir, and drops. The most popular prescription for IDA is 2–3 tablets per day of FS, which should be assumed preferably on an empty stomach to maximize absorption [52]. Such relatively high doses are mainly based on traditional practice, and recent recalculations suggest they are likely excessive (see below). Noteworthy, only a minor fraction (no more than 10–20%) of a high dose of oral iron is effectively absorbed [52,53,54]. As absorption of ferrous salts is favored by a mildly acidic medium, ascorbic acid 250–500 mg/day is often concomitantly prescribed, although formal demonstration of a measurable advantage is lacking [55]. On the other hand, antacids, including proton pump inhibitors (PPI), are likely a cofactor of insufficient response to oral iron, particularly in certain populations like elderly patients assuming polypharmacy [4]. Optimal response to oral iron is generally defined as an hemoglobin increase of 2 g/dl after 3 weeks. However, the final goal of the treatment has to be not only the normalization of hemoglobin, but also repletion of iron stores, with an ideal target of ferritin > 100 μg/l [49, 51]. This often requires a prolonged treatment for at least 3 months [50], if not more. Stopping the treatment too early is a common error in clinical practice. In our experience at a referral center for iron disorders, this is particularly frequent in young premenopausal women, resulting in significant morbidity and risk of recurrence. By contrast, an hemoglobin increase less than 1 g/dl after 3 weeks notwithstanding adequate compliance defines “refractoriness” to oral iron. This should prompt appropriate investigations to exclude malabsorption, i.e. due to celiac disease, helicobacter pilory infection, or autoimmune gastritis [5].

A far from ideal treatment

Unfortunately, oral iron is frequently associated to adverse effects (AEs), mainly represented by gastrointestinal disturbs including metallic taste, nausea, vomiting, heartburn, epigastric pain, constipation, and diarrhea. They are likely due to direct toxicity of unabsorbed iron on the intestinal mucosa. Two recent meta-analyses including several thousands of patients receiving ferrous iron salts have reported gastrointestinal AEs in proportion variables from 30 to 70% of cases [56, 57]. The ensuing reduction of adherence, in combination with the need of prolonged treatment (see above), results in undertreatment of a significant proportion of IDA patients in daily clinical practice. In recent years, there has been an increasing awareness of a previously overlooked potentially negative effect of oral iron, i.e. the change in gut microbiome [58,59,60]. This is due to unabsorbed iron reaching the colon, and appears particularly detrimental in low-income populations. Studies in Kenyan infants consuming iron-fortified meals have documented a decrease of beneficial commensal bacteria (i.e. bifidobacteria and lactobacilli, requiring little or no iron), and an increase of enterobacteria, including iron-requiring enteropathogenic Escherichia coli strains [61]. Noteworthy, previous studies with iron fortification formulas in African children have raised serious concerns about the safety of indiscriminate iron supplementation in areas where either micronutrient deficiencies or infections are highly prevalent [62, 63].

Oral iron therapy in the hepcidin era

Optimal efficacy of oral iron requires not only the integrity of the gastrointestinal mucosa, but also an appropriate suppression of hepcidin to allow full activity of ferroportin expressed on the basolateral surface of enterocytes (Fig. 2). As mentioned before, in typical IDA serum hepcidin levels are extremely low, or even undetectable [29, 30]. Nevertheless, serum hepcidin levels reflect the balance of multiple opposing influence [25], and exception to this rule can be due to genetic or acquired conditions. Subjects homozygous for mutations in TMPRSS6, encoding the hepcidin inhibitor Matriptase-2, are affected by a rare genetic form of anemia named Iron Refractory Iron Deficiency Anemia (IRIDA, OMIM #206200) [64]. This condition should be suspected in patients presenting with IDA early in life, and poor or no response to oral iron without apparent cause [65, 66]. Indeed, the biochemical hallmark of IRIDA is the presence of high (or inappropriately normal) serum hepcidin levels [25], which are pathogenetically relevant. Relatively high or inappropriately normal hepcidin levels can be found also in ID patients with a concomitant inflammatory disorder [67, 68]. The best studied condition in this sense is chronic heart failure (CHF), where low-grade chronic inflammation is known to play an important role [69]. ID is quite common in CHF, involving at least 30% of patients [70, 71]. Multiple factors concur to determine ID in CHF, including decreased iron intake because of anorexia, malabsorption due to edema of the intestinal mucosa, and, possibly, occult bleeding favored by concomitant assumption of antithrombotic drugs. This results in decreased utilization of O2 by iron-dependent mitochondrial enzymes in cardiomyocytes [72], and an increased risk of hospitalization or even of death [70, 73]. Of note, IV iron therapy has been shown to be beneficial in CHF patients with ID (see also below) [71, 74, 75], while a recent trial with oral iron yielded negative results [76]. This refractoriness to oral iron was linked to relatively high (instead of suppressed) hepcidin levels in CHF ID patients [76]. Thus, CHF represents a paradigmatic condition where recent pathophysiological insights on hepcidin appear to influence the choice on the most appropriate route of iron administration. The same concept was previously suggested by two retrospective studies in cancer patients [77], and in unselected IDA patients [78], where baseline hepcidin levels predicted subsequent responsiveness to oral iron. After initial technical difficulties, hepcidin assays are continuously improving, and a number of them showing good accuracy and reproducibility have been internationally validated [25]. However, lack of harmonization (i.e. comparability between absolute values obtained in different laboratories) has prevented until now the definition of universal ranges for widespread clinical use [25, 79]. This problem is now nearly solved through the use of a commutable reference material, which will soon allow worldwide standardization and results traceable to SI units (Swinkels D. W., personal communication). Thus, in a near future baseline hepcidin measurement in IDA could actually help in tailoring iron therapy, by selecting the optimal route of administration in a given individual [25]. This would be particularly useful in certain “difficult” populations, such as the elderly [4] and children in developing countries [80].

An already established major advance in oral iron therapy deriving from hepcidin discovery is related to the administration schedule. An elegant pilot-study by Moretti and colleagues on non-anemic ID premenopausal women suggested that giving oral iron on an alternate day schedule might be as effective as the classical daily schedule based on divided doses [81]. The classical schedule was associated to a rapid response in hepcidin production that limited the absorption of a second dose given too early. By contrast, the alternate day regimen allowed a sufficient time for hepcidin return to baseline, hence maximizing fractional iron absorption. Moreover, it minimized gastrointestinal AEs. Such results have been recently confirmed by two prospective randomized controlled trials with similar design, again showing better absorption in non-anemic young women taking iron on alternate day [82]. Whether or not these results also apply to anemic patients with ID remain formally unproven, but it appears reasonable to assume that this will be the case. Indeed, some Authorities now consider such results sufficient to recommend the alternate day regimen as the preferable way for oral iron replacement therapy in IDA, although with prudence (grade 2 C) [83].

New preparations

Another area of active work is the search for new oral preparations as effective as the standard FS, but better tolerated. One of the most innovative preparation is “sucrosomial” iron (SI), that is a source of ferric pyrophosphate covered by phospholipids plus sucrose esters of fatty acids matrix [84]. In vitro experiments on human intestinal Caco-2 cells suggest that SI could be taken up through a DMT-1 independent mechanism [84], possibly through endocytosis and similarly to what happens with nanoparticles [85, 86] (see below). Whether this occurs also in vivo, as well as whether SI utilizes unique mechanisms also to enter the bloodstream remain to be proven. Anyway, some preliminary clinical studies with SI look promising. For example, a small randomized, open-label trial in non-dialysis chronic kidney disease (CKD) patients with IDA showed that low dose (30 mg/day) oral SI for 3 months was non-inferior to IV iron gluconate with regards to hemoglobin recovery [87]. On the other hand, IV iron remained superior with respect to replenishment of iron stores, while IDA recurred rapidly (after 1 months from suspension) in patients treated with oral SI. This study had several limitations, including the small sample size and the IV compound used as comparator (iron gluconate), which is now clearly surpassed by the newer IV iron formulations (see below). Nevertheless, the relatively good response obtained after 3 weeks with oral SI is intriguing, particularly considering the low dose (30 mg/day), as well as the peculiar IDA population studied (stage 3–5 CKD). Indeed, late stage CKD patients often have elevated hepcidin levels [88], so that some Authors have recently claimed hepcidin as a sort of new “uremic toxin” [89]. To explain such result, one should assume that absorption of oral SI is: (1) poorly affected by hepcidin inhibition; (2) nearly maximal, by contrast with traditional iron compounds, as it is well known, for example, that no more that 30 mg iron are absorbed when 100 mg elemental FS are administered (see also above). Of note, tolerability of oral SI was excellent. Such findings deserve further mechanistic studies on this novel compound, as well as confirmation by larger clinical trials in other IDA populations. Nevertheless, they pose a key general question regarding the optimal dose of oral iron, whatever the compound. When hepcidin is suppressed, as in common uncomplicated IDA, iron absorption is supposed to be maximal, but with a limit of 25–30 mg/day. Fractional absorption can vary substantially between different compounds, but what is clear is that most AEs are related to the unabsorbed fraction. A small study in elderly (> 80 years) with IDA conducted more than 10 years ago, showed that two low-dose schedules (15 or 50 mg/day of elemental iron in form of liquid ferrous gluconate) were equally effective as high “traditional” dose (150 mg/day of elemental iron in form of ferrous calcium citrate tablets) [90]. Low-doses also resulted in significantly lower AEs [90]. Overall, the new studies cited above, including those on alternate day regimen, suggest that it is time to reconsider our traditional way of giving oral iron. Large-scale studies on patients with mild to moderate uncomplicated IDA should definitively explore the efficacy and tolerability of alternate day low-dose oral iron.

A further active field of research, particularly to address IDA in developing countries, is the possibility of enriching food with iron nanoformulations [91, 92]. Early attempts using classical iron salts were disappointing, even because of changes in color and taste of food. Nanoparticles (NPs), including ferritin or ferritin-mimicking molecules [93], appear promising, and devoid of unwanted effects. Noteworthy, a very recent and elegant study has demonstrated that iron-containing NPs cross the cell membrane by DMT1-independent mechanisms, like endocytosis, or even by a non-endocytotic pathway allowing direct access to the cytoplasm [94].

IV iron

Historical considerations

First attempts to administer iron through the parenteral route date back to the first half of the past century [95], but were unacceptably painful when administered intramuscularly, and caused serious hemodynamic toxicity attributed to rapid release of labile-free iron. This led to the development of carbohydrate shells surrounding an iron core, in order to limit the unwanted rapid release of the element [96]. The first preparation to be used was iron saccharide in 1947, followed by High-Molecular Weight Dextran (HMWD) iron (Fig. 3a). Despite documented success in correcting IDA [97, 98], rare cases of severe hypersensitivity reactions were reported, some of them being fatal. This led to extreme caution in prescribing IV iron, which was deemed to be reserved only for conditions where oral iron could not be used [98]. In facts, the medical community experienced a long lasting generalized prejudice against IV iron, whatever the preparation used. Only relatively recently, it was realized that severe and potentially lethal reactions were almost exclusively due to HMWD-iron [99], which, in the meantime, was no longer produced since 1992 [100] and replaced by other preparations (Fig. 3a). Indeed, a retrospective analysis of > 30 million doses of IV iron reported absolute rates of life-threatening AEs of 0.6, 0.9, and 11.8 per million with iron sucrose, ferric gluconate, and HMWD-iron, respectively [99].

IV iron preparations: historical and chemical perspective. a A widespread use of IV iron has been historically hampered by unacceptable risks with early preparations, particularly anaphylactic reactions with high molecular weight dextran (HMWD) iron. b All IV iron preparations consist of an iron polynuclear core surrounded by a carbohydrate shell that acts as a stabilizer, preventing uncontrolled release of toxic free iron. What is different in various compounds is the identity of the carbohydrate moiety, which is unique for each compound and influences both immunogenicity and strength of stabilization (see also the text)

The chemistry of different IV iron preparations and its relation with adverse effects

All IV iron preparations share a common structure, being colloidal solutions of compounds made of a polynuclear core containing Fe3+ hydroxide particles surrounded by a carbohydrate shell (Fig. 3b). The most important differences between one preparation and another rely on the chemistry of the carbohydrate moiety forming the shell, as well as on the type and strength of its bonds with the iron core (Fig. 3b). These features are major determinants of the stability of the iron/carbohydrate complex, which in turn constitutes the factor limiting the maximum dose of iron administrable with a single infusion. As depicted in Fig. 2, the pharmacokinetic of IV iron is substantially different from that of oral iron. Once injected in the bloodstream (hence circumventing problems in intestinal absorption), IV iron is mainly taken up by macrophages, which subsequently release the element through ferroportin [101]. However, less stable complexes can release variable amount of ferric iron directly in the circulation before macrophage uptake. This leads to the presence of toxic labile-free iron, once the binding capability of transferrin is saturated. As a general rule, the more stable is the complex, the less will be the frequency of infusion reactions, which are usually mild, consisting of rash, palpitations, dizziness, myalgias, and chest discomfort in variable combination but without hypotension or respiratory symptoms [49]. Such minor infusion reactions occur roughly in 1:200 administrations [102], and resolve quickly by simply stopping the infusion without the need of any other treatment. Of note, there is increasing consensus on avoiding anti-histaminic drugs in such conditions, with particular reference to diphenhydramine, as it could determine hypotension and other unwanted side effects, leading to paradoxical aggravation rather than amelioration of the clinical picture [49, 103]. More serious “anaphylactoid” reactions, including hemodynamic and respiratory changes, can also occur with virtually all IV iron preparations, including the newer ones (see below), but appear exceedingly rare (see below). At variance with serious AEs with old compound like iron-HMWD, for whom in some cases a IgE-mediated mechanisms could be demonstrated [104, 105], such reactions are now increasingly attributed to complement activation-related pseudo-allergy (CARPA) [106]. This mechanism is thought to be activated by iron nanoparticles [106], thus, again, the stability of the carbohydrate shell and the different physico-chemical properties of the various compounds are likely critical. Anyway, a recent systematic meta-analysis of 103 trials including more than 10,000 patients treated with various IV iron preparations and more than 7000 patients treated with different comparators (oral iron, placebo) found serious AEs with IV iron extremely rare (< 1,200,000 doses), and no more frequent than comparators [107]. No fatal reaction or true anaphylaxis was reported. Only minor infusion reactions were consistently reported, with a RR = 2.47 for any IV iron preparation [107]. These results are particularly relevant if put in perspective with the frequency of AEs leading to major morbidity occurring with RBC transfusions, the only alternative to IV iron in certain circumstances. Indeed, such frequency appears higher than that related to IV iron, being estimated at a rate of near 1:21,000 [108].

The newer IV iron preparations

In the last decade three new preparations have entered the clinical scenario, which can be collectively grouped as “third generation” IV iron compounds (Fig. 3). These preparations share common features conferring superiority over the older products, so that they are relevantly changing the way we manage IDA in clinical practice. Such favorable features, as compared to traditional preparations, are illustrated in Table 3. The most important one is represented by the higher stability of the carbohydrate shell, which in turn allows to give the total replacement dose (usually 1–1.5 g, depending on the degree of anemia and body weight, according to the classical Ganzoni’s formula [109]) in just one or two infusions. Such an easy schedule is clearly more comfortable for patients than classical multiple infusion schemes, i.e. up to 7–10 infusions on consecutive days with ferric gluconate. The increasing costs per vial appear counterbalanced by reduced costs in terms of personnel, and in-hospital organization [110]. Moreover, the higher stability also results in a very good safety profile because of reduced probability to release of free iron. An extensive review on the use of single-dose IV iron is found elsewhere [111].

Iron isomaltoside is, for now, available only in few European nations, while Ferumoxytol and Ferric carboxymaltose (FCM) are becoming increasingly popular worldwide.

A peculiarity of Ferumoxytol consists on its core made of superparamagnetic iron oxide NPs, surrounded by low molecular weight semisynthetic carbohydrates. This compound was originally developed as enhancing agent for magnetic resonance imaging (MRI), and it is sometimes still used for this purpose [112]. Thus, while its administration for IDA does not definitively compromise the interpretation, should a patient undergo MRI within 3 months of administration, the radiologist should be notified [83].

Ferric carboxymaltose (FCM) is characterized by a tight binding of elemental iron to the carbohydrate polymer shell. This is consistent with studies on labile-free iron release of different preparations, showing the lowest levels with FCM [113]. Because of its high stability, FCM can be quickly administered at high dose, up to 1000 mg of elemental iron in 15 min [114]. Hypophosphatemia is not infrequent in the following days after FCM infusion [115], with a mechanism that appears peculiar to its unique carbohydrate moiety [116]. Indeed, FCM is able to induce the synthesis of fibroblast growth factor 23 (FGF-23), an osteocyte-derived hormone that regulates phosphate and vitamin D homeostasis [117], ultimately leading to increased renal excretion of phosphate [115]. However, FCM-induced hypophosphatemia does not appear clinically meaningful, as it is mild, transient, and without symptoms or sequelae. Serum phosphate levels do not need to be checked or monitored, with the only possible exception of patients with IDA in the context of severe malnutrition, where baseline phosphate levels could be already reduced. On the other hand, FCM has been successfully and safely used in a number of clinical settings, including CHF [74, 75], CKD [118], inflammatory bowel disease [119, 120], heavy uterine bleeding [121], as well as during pregnancy in the second and third trimester [122, 123].

Expanding spectrum of clinical use of IV iron

The availability of novel IV iron preparations safe and easy to use is gradually abating prejudices and misconceptions regarding this therapeutic approach [45]. From historical restrictions considering IV iron as “an extreme solution for severe IDA when other options were impracticable” [98], now there is a number of conditions where its use is well-established or increasingly considered as first-option approach [111] (Table 4). At variance with oral iron, compliance is assured and correction of IDA is more rapid without need of prolonged administration. From a pathophysiological point of view, the use of IV iron in CHF is of particular interest. As noted above, CHF patients are predisposed to develop ID or even IDA, but a precise laboratory definition of ID in this setting is difficult because of the interference of subclinical inflammation. This tends to increase serum ferritin above the threshold levels (< 15–30 μg/L) typical of uncomplicated IDA. Anker and colleagues first proposed wide and pragmatic criteria for defining ID in CHF, i.e. serum ferritin levels < 100 μg/L, or even < 300 μg/L if transferrin saturation is concomitantly < 20% [74]. Notwithstanding skepticism in the hematological community about this “extensive” definition, IV iron supplementation with FCM in CHF patients was effective in ameliorating either iron stores or cardiac function [74]. Such results have been consistently replicated [75, 124], so that most recent and authoritative guidelines suggest to systematically check for ID in CHF and IV iron treatment if ID is present [125,126,127]. Noteworthy, a sub-analysis of the seminal FAIR-HF trial [74] focusing on anemic CHF patients showed consistent Hb increase after IV iron, implicitly validating the above mentioned “extensive” criteria for ID [128]. As mentioned above, the beneficial effect of iron in CHF is seen only using IV preparation (for now FCM is the only compound tested in such condition), while a recent trial with oral iron was unsuccessful because of increased hepcidin levels [76]. As depicted in Fig. 2, IV iron also needs a permissive effect of ferroportin to be delivered to circulating transferrin and ultimately to erythroid precursors in the bone marrow. However, it enters the circulation mainly through macrophage ferroportin, at variance with oral iron that uses intestinal ferroportin (Fig. 2). According to most recent basic studies [129, 130], it is increasingly recognized that there are substantial quantitative differences between overall expression of ferroportin at intestinal level (normally dealing with low amount of iron, ~ 1–2 mg/die), as compared to the macrophage level (normally managing much more iron, ~ 20–25 mg/die). In other words, the amount of hepcidin needed for blocking iron absorption is likely much lower than that required for inhibiting ferroportin expressed by the innumerous iron recycling macrophages. Hence, the clinically confirmed success of IV iron in CHF represents an interesting paradigm in modern iron replacement therapy. Indeed, CHF recapitulates the features of a condition where ID is common, difficult to define by classical biochemical iron parameters, but effectively treatable with iron, although only using the IV route, being the hepcidin increase driven by low-grade inflammation sufficient to inhibit intestinal (but not macrophage) ferroportin.

Whether this applies to other chronic conditions where inflammation and ID often coexist remains to be determined, and active clinical research is needed [131]. A further novel example in this sense could be represented by chronic obstructive pulmonary disease (COPD), where a previously unrecognized detrimental role of ID would merit adequate addressing in the future [132].

Conclusions

The recent advances in pathophysiology of IDA, along with the availability of new iron preparations and the awareness of the implications of ID in a variety of clinical fields, is making iron replacement therapy more feasible and fascinating than ever. We are hopefully approaching a new era where we could eventually contrast more effectively the most important nutritional deficiency worldwide.

References

Camaschella C. Iron-deficiency anemia. N Engl J Med. 2015;372:1832–43.

Kassebaum NJ, Collaborators GBDA. The global burden of anemia. Hematol Oncol Clin North Am. 2016;30:247–308.

Stevens GA, Finucane MM, De-Regil LM, et al. Global, regional, and national trends in haemoglobin concentration and prevalence of total and severe anaemia in children and pregnant and non-pregnant women for 1995–2011: a systematic analysis of population-representative data. Lancet Glob Health. 2013;1:e16–25.

Busti F, Campostrini N, Martinelli N, et al. Iron deficiency in the elderly population, revisited in the hepcidin era. Front Pharmacol. 2014;5:83.

Hershko C, Camaschella C. How I treat unexplained refractory iron deficiency anemia. Blood. 2014;123:326–33.

Sami SS, Al-Araji SA, Ragunath K. Review article: gastrointestinal angiodysplasia—pathogenesis, diagnosis and management. Aliment Pharmacol Ther. 2014;39:15–34.

Loscalzo J. From clinical observation to mechanism—Heyde’s syndrome. N Engl J Med. 2012;367:1954–6.

Sender R, Fuchs S, Milo R. Revised estimates for the number of human and bacteria cells in the body. PLoS Biol. 2016;14:e1002533.

Kautz L, Nemeth E. Molecular liaisons between erythropoiesis and iron metabolism. Blood. 2014;124:479–82.

Pietrangelo A. Mechanisms of iron hepatotoxicity. J Hepatol. 2016;65:226–7.

Andrews NC. Forging a field: the golden age of iron biology. Blood. 2008;112:219–30.

Park CH, Valore EV, Waring AJ, et al. Hepcidin, a urinary antimicrobial peptide synthesized in the liver. J Biol Chem. 2001;276:7806–10.

Pigeon C, Ilyin G, Courselaud B, et al. A new mouse liver-specific gene, encoding a protein homologous to human antimicrobial peptide hepcidin, is overexpressed during iron overload. J Biol Chem. 2001;276:7811–9.

Nicolas G, Bennoun M, Devaux I, et al. Lack of hepcidin gene expression and severe tissue iron overload in upstream stimulatory factor 2 (USF2) knockout mice. Proc Natl Acad Sci USA. 2001;98:8780–5.

Abboud S, Haile DJ. A novel mammalian iron-regulated protein involved in intracellular iron metabolism. J Biol Chem. 2000;275:19906–12.

Donovan A, Brownlie A, Zhou Y, et al. Positional cloning of zebrafish ferroportin1 identifies a conserved vertebrate iron exporter. Nature. 2000;403:776–81.

McKie AT, Marciani P, Rolfs A, et al. A novel duodenal iron-regulated transporter, IREG1, implicated in the basolateral transfer of iron to the circulation. Mol Cell. 2000;5:299–309.

Nemeth E, Tuttle MS, Powelson J, et al. Hepcidin regulates cellular iron efflux by binding to ferroportin and inducing its internalization. Science. 2004;306:2090–3.

Ganz T. Hepcidin and iron regulation, 10 years later. Blood. 2011;117:4425–33.

Jordan JB, Poppe L, Haniu M, et al. Hepcidin revisited, disulfide connectivity, dynamics, and structure. J Biol Chem. 2009;284:24155–67.

Lehrer RI. Primate defensins. Nat Rev Microbiol. 2004;2:727–38.

Pietrangelo A. Genetics, genetic testing, and management of hemochromatosis: 15 years since hepcidin. Gastroenterology. 2015;149(1240–1251):e1244.

Muckenthaler MU, Rivella S, Hentze MW, et al. A red carpet for iron metabolism. Cell. 2017;168:344–61.

Ganz T. Systemic iron homeostasis. Physiol Rev. 2013;93:1721–41.

Girelli D, Nemeth E, Swinkels DW. Hepcidin in the diagnosis of iron disorders. Blood. 2016;127:2809–13.

Andriopoulos B, Corradini E, Xia Y, et al. BMP6 is a key endogenous regulator of hepcidin expression and iron metabolism. Nat Genet. 2009;41:482–7.

Piubelli C, Castagna A, Marchi G, et al. Identification of new BMP6 pro-peptide mutations in patients with iron overload. Am J Hematol. 2017;92:562–8.

Canali S, Zumbrennen-Bullough KB, Core AB, et al. Endothelial cells produce bone morphogenetic protein 6 required for iron homeostasis in mice. Blood. 2017;129:405–14.

Ganz T, Olbina G, Girelli D, et al. Immunoassay for human serum hepcidin. Blood. 2008;112:4292–7.

Bozzini C, Campostrini N, Trombini P, et al. Measurement of urinary hepcidin levels by SELDI-TOF-MS in HFE-hemochromatosis. Blood Cells Mol Dis. 2008;40:347–52.

Kautz L, Jung G, Valore EV, et al. Identification of erythroferrone as an erythroid regulator of iron metabolism. Nat Genet. 2014;46:678–84.

Russo R, Andolfo I, Manna F, et al. Increased levels of ERFE-encoding FAM132B in patients with congenital dyserythropoietic anemia type II. Blood. 2016;128:1899–902.

Ganz T, Jung G, Naeim A, et al. Immunoassay for human serum erythroferrone. Blood. 2017;130:1243–6.

Verga Falzacappa MV, Vujic Spasic M, Kessler R, et al. STAT3 mediates hepatic hepcidin expression and its inflammatory stimulation. Blood. 2007;109:353–8.

Shanmugam NK, Chen K, Cherayil BJ. Commensal bacteria-induced interleukin 1beta (IL-1beta) secreted by macrophages up-regulates hepcidin expression in hepatocytes by activating the bone morphogenetic protein signaling pathway. J Biol Chem. 2015;290:30637–47.

Nemeth E, Rivera S, Gabayan V, et al. IL-6 mediates hypoferremia of inflammation by inducing the synthesis of the iron regulatory hormone hepcidin. J Clin Invest. 2004;113:1271–6.

Kemna E, Pickkers P, Nemeth E, et al. Time-course analysis of hepcidin, serum iron, and plasma cytokine levels in humans injected with LPS. Blood. 2005;106:1864–6.

Ganz T. Iron in innate immunity: starve the invaders. Curr Opin Immunol. 2009;21:63–7.

Arezes J, Jung G, Gabayan V, et al. Hepcidin-induced hypoferremia is a critical host defense mechanism against the siderophilic bacterium Vibrio vulnificus. Cell Host Microbe. 2015;17:47–57.

Zeng C, Chen Q, Zhang K, et al. Hepatic hepcidin protects against polymicrobial sepsis in mice by regulating host iron status. Anesthesiology. 2015;122:374–86.

Weiss G, Goodnough LT. Anemia of chronic disease. N Engl J Med. 2005;352:1011–23.

Weiss G. Anemia of chronic disorders: new diagnostic tools and new treatment strategies. Semin Hematol. 2015;52:313–20.

Stockman R. The treatment of chlorosis by iron and some other drugs. Br Med J. 1893;1:942–4.

Nissim JA. Intravenous administration of iron. Lancet. 1947;2:49–51.

Munoz M, Gomez-Ramirez S, Besser M, et al. Current misconceptions in diagnosis and management of iron deficiency. Blood Transfus. 2017;15:422–37.

Santiago P. Ferrous versus ferric oral iron formulations for the treatment of iron deficiency: a clinical overview. Sci World J. 2012;2012:846824.

Bothwell TH, Pirzio-Biroli G, Finch CA. Iron absorption. I. Factors influencing absorption. J Lab Clin Med. 1958;51:24–36.

Gulec S, Anderson GJ, Collins JF. Mechanistic and regulatory aspects of intestinal iron absorption. Am J Physiol Gastrointest Liver Physiol. 2014;307:G397–409.

Auerbach M, Adamson JW. How we diagnose and treat iron deficiency anemia. Am J Hematol. 2016;91:31–8.

Goddard AF, James MW, McIntyre AS, et al. Guidelines for the management of iron deficiency anaemia. Gut. 2011;60:1309–16.

Peyrin-Biroulet L, Williet N, Cacoub P. Guidelines on the diagnosis and treatment of iron deficiency across indications: a systematic review. Am J Clin Nutr. 2015;102:1585–94.

Schrier SL. So you know how to treat iron deficiency anemia. Blood. 2015;126:1971.

Zimmermann MB, Hurrell RF. Nutritional iron deficiency. Lancet. 2007;370:511–20.

Tondeur MC, Schauer CS, Christofides AL, et al. Determination of iron absorption from intrinsically labeled microencapsulated ferrous fumarate (sprinkles) in infants with different iron and hematologic status by using a dual-stable-isotope method. Am J Clin Nutr. 2004;80:1436–44.

Hallberg L, Brune M, Rossander-Hulthen L. Is there a physiological role of vitamin C in iron absorption? Ann N Y Acad Sci. 1987;498:324–32.

Cancelo-Hidalgo MJ, Castelo-Branco C, Palacios S, et al. Tolerability of different oral iron supplements: a systematic review. Curr Med Res Opin. 2013;29:291–303.

Tolkien Z, Stecher L, Mander AP, et al. Ferrous sulfate supplementation causes significant gastrointestinal side-effects in adults: a systematic review and meta-analysis. PLoS One. 2015;10:e0117383.

Kortman GA, Raffatellu M, Swinkels DW, et al. Nutritional iron turned inside out: intestinal stress from a gut microbial perspective. FEMS Microbiol Rev. 2014;38:1202–34.

Zimmermann MB, Chassard C, Rohner F, et al. The effects of iron fortification on the gut microbiota in African children: a randomized controlled trial in Cote d’Ivoire. Am J Clin Nutr. 2010;92:1406–15.

Paganini D, Zimmermann MB. Effects of iron fortification and supplementation on the gut microbiome and diarrhea in infants and children: a review. Am J Clin Nutr 2017. http://doi.org/10.3945/ajcn.117.156067

Jaeggi T, Kortman GA, Moretti D, et al. Iron fortification adversely affects the gut microbiome, increases pathogen abundance and induces intestinal inflammation in Kenyan infants. Gut. 2015;64:731–42.

Sazawal S, Black RE, Ramsan M, et al. Effects of routine prophylactic supplementation with iron and folic acid on admission to hospital and mortality in preschool children in a high malaria transmission setting: community-based, randomised, placebo-controlled trial. Lancet. 2006;367:133–43.

Zlotkin S, Newton S, Aimone AM, et al. Effect of iron fortification on malaria incidence in infants and young children in Ghana: a randomized trial. JAMA. 2013;310:938–47.

Finberg KE, Heeney MM, Campagna DR, et al. Mutations in TMPRSS6 cause iron-refractory iron deficiency anemia (IRIDA). Nat Genet. 2008;40:569–71.

De Falco L, Silvestri L, Kannengiesser C, et al. Functional and clinical impact of novel TMPRSS6 variants in iron-refractory iron-deficiency anemia patients and genotype-phenotype studies. Hum Mutat. 2014;35:1321–9.

Donker AE, Raymakers RA, Vlasveld LT, et al. Practice guidelines for the diagnosis and management of microcytic anemias due to genetic disorders of iron metabolism or heme synthesis. Blood. 2014;123:3873–86.

van Santen S, van Dongen-Lases EC, de Vegt F, et al. Hepcidin and hemoglobin content parameters in the diagnosis of iron deficiency in rheumatoid arthritis patients with anemia. Arthritis Rheum. 2011;63:3672–80.

Bergamaschi G, Di Sabatino A, Albertini R, et al. Serum hepcidin in inflammatory bowel diseases: biological and clinical significance. Inflamm Bowel Dis. 2013;19:2166–72.

Dick SA, Epelman S. Chronic heart failure and inflammation: what do we really know? Circ Res. 2016;119:159–76.

Jankowska EA, Rozentryt P, Witkowska A, et al. Iron deficiency: an ominous sign in patients with systolic chronic heart failure. Eur Heart J. 2010;31:1872–80.

von Haehling S, Jankowska EA, van Veldhuisen DJ, et al. Iron deficiency and cardiovascular disease. Nat Rev Cardiol. 2015;12:659–69.

Haas JD. Brownlie Tt. Iron deficiency and reduced work capacity: a critical review of the research to determine a causal relationship. J Nutr. 2001;131:676S–88S (discussion 688S–690S).

Okonko DO, Mandal AK, Missouris CG, et al. Disordered iron homeostasis in chronic heart failure: prevalence, predictors, and relation to anemia, exercise capacity, and survival. J Am Coll Cardiol. 2011;58:1241–51.

Anker SD, Comin Colet J, Filippatos G, et al. Ferric carboxymaltose in patients with heart failure and iron deficiency. N Engl J Med. 2009;361:2436–48.

Ponikowski P, van Veldhuisen DJ, Comin-Colet J, et al. Beneficial effects of long-term intravenous iron therapy with ferric carboxymaltose in patients with symptomatic heart failure and iron deficiencydagger. Eur Heart J. 2015;36:657–68.

Lewis GD, Malhotra R, Hernandez AF, et al. Effect of oral iron repletion on exercise capacity in patients with heart failure with reduced ejection fraction and iron deficiency: the IRONOUT HF Randomized Clinical Trial. JAMA. 2017;317:1958–66.

Steensma DP, Sasu BJ, Sloan JA, et al. Serum hepcidin levels predict response to intravenous iron and darbepoetin in chemotherapy-associated anemia. Blood. 2015;125:3669–71.

Bregman DB, Morris D, Koch TA, et al. Hepcidin levels predict nonresponsiveness to oral iron therapy in patients with iron deficiency anemia. Am J Hematol. 2013;88:97–101.

Kroot JJ, van Herwaarden AE, Tjalsma H, et al. Second round robin for plasma hepcidin methods: first steps toward harmonization. Am J Hematol. 2012;87:977–83.

Prentice AM, Doherty CP, Abrams SA, et al. Hepcidin is the major predictor of erythrocyte iron incorporation in anemic African children. Blood. 2012;119:1922–8.

Moretti D, Goede JS, Zeder C, et al. Oral iron supplements increase hepcidin and decrease iron absorption from daily or twice-daily doses in iron-depleted young women. Blood. 2015;126:1981–9.

Stoffel NU, Cercamondi CI, Brittenham G, et al. Iron absorption from oral iron supplements given on consecutive versus alternate days and as single morning doses versus twice-daily split dosing in iron-depleted women: two open-label, randomised controlled trials. Lancet Haematol. 2017;4:e524–33.

Schrier SL, Auerbach M. Treatment of iron deficiency in adults. Wolters Kluwer: UpToDate; 2017.

Fabiano A, Brilli E, Fogli S, et al. Sucrosomial(R) iron absorption studied by in vitro and ex vivo models. Eur J Pharm Sci. 2017;111:425–31.

Pereira DI, Mergler BI, Faria N, et al. Caco-2 cell acquisition of dietary iron(III) invokes a nanoparticulate endocytic pathway. PLoS One. 2013;8:e81250.

Jahn MR, Nawroth T, Futterer S, et al. Iron oxide/hydroxide nanoparticles with negatively charged shells show increased uptake in Caco-2 cells. Mol Pharm. 2012;9:1628–37.

Pisani A, Riccio E, Sabbatini M, et al. Effect of oral liposomal iron versus intravenous iron for treatment of iron deficiency anaemia in CKD patients: a randomized trial. Nephrol Dial Transplant. 2015;30:645–52.

Valenti L, Messa P, Pelusi S, et al. Hepcidin levels in chronic hemodialysis patients: a critical evaluation. Clin Chem Lab Med. 2014;52:613–9.

Lee SW, Kim JM, Lim HJ, et al. Serum hepcidin may be a novel uremic toxin, which might be related to erythropoietin resistance. Sci Rep. 2017;7:4260.

Rimon E, Kagansky N, Kagansky M, et al. Are we giving too much iron? Low-dose iron therapy is effective in octogenarians. Am J Med. 2005;118:1142–7.

Hilty FM, Arnold M, Hilbe M, et al. Iron from nanocompounds containing iron and zinc is highly bioavailable in rats without tissue accumulation. Nat Nanotechnol. 2010;5:374–80.

Hosny KM, Banjar ZM, Hariri AH, et al. Solid lipid nanoparticles loaded with iron to overcome barriers for treatment of iron deficiency anemia. Drug Des Devel Ther. 2015;9:313–20.

Latunde-Dada GO, Pereira DI, Tempest B, et al. A nanoparticulate ferritin-core mimetic is well taken up by HuTu 80 duodenal cells and its absorption in mice is regulated by body iron. J Nutr. 2014;144:1896–902.

Zanella D, Bossi E, Gornati R, et al. Iron oxide nanoparticles can cross plasma membranes. Sci Rep. 2017;7:11413.

Heath CW, Strauss MB, Castle WB. Quantitative aspects of iron deficiency in hypochromic anemia: (the parenteral administration of iron). J Clin Invest. 1932;11:1293–312.

Baird IM, Podmore DA. Intramuscular iron therapy in iron-deficiency anaemia. Lancet. 1954;267:942–6.

Marchasin S, Wallerstein RO. The treatment of iron-deficiency anemia with intravenous iron dextran. Blood. 1964;23:354–8.

Hamstra RD, Block MH, Schocket AL. Intravenous iron dextran in clinical medicine. JAMA. 1980;243:1726–31.

Chertow GM, Mason PD, Vaage-Nilsen O, et al. Update on adverse drug events associated with parenteral iron. Nephrol Dial Transplant. 2006;21:378–82.

Auerbach M, Ballard H. Clinical use of intravenous iron: administration, efficacy, and safety. Hematol Am Soc Hematol Educ Program. 2010;2010:338–47.

Funk F, Ryle P, Canclini C, et al. The new generation of intravenous iron: chemistry, pharmacology, and toxicology of ferric carboxymaltose. Arzneimittelforschung. 2010;60:345–53.

Fishbane S. Review of issues relating to iron and infection. Am J Kidney Dis. 1999;34:S47–52.

Rampton D, Folkersen J, Fishbane S, et al. Hypersensitivity reactions to intravenous iron: guidance for risk minimization and management. Haematologica. 2014;99:1671–6.

Burns DL, Pomposelli JJ. Toxicity of parenteral iron dextran therapy. Kidney Int Suppl. 1999;69:S119–24.

Novey HS, Pahl M, Haydik I, et al. Immunologic studies of anaphylaxis to iron dextran in patients on renal dialysis. Ann Allergy. 1994;72:224–8.

Szebeni J, Fishbane S, Hedenus M, et al. Hypersensitivity to intravenous iron: classification, terminology, mechanisms and management. Br J Pharmacol. 2015;172:5025–36.

Avni T, Bieber A, Grossman A, et al. The safety of intravenous iron preparations: systematic review and meta-analysis. Mayo Clin Proc. 2015;90:12–23.

Bolton-Maggs PH, Cohen H. Serious hazards of transfusion (SHOT) haemovigilance and progress is improving transfusion safety. Br J Haematol. 2013;163:303–14.

Ganzoni AM. Disorders of hemoglobin synthesis (exclusive of iron deficiency). Schweiz Med Wochenschr. 1975;105:1081–7.

Calvet X, Ruiz MA, Dosal A, et al. Cost-minimization analysis favours intravenous ferric carboxymaltose over ferric sucrose for the ambulatory treatment of severe iron deficiency. PLoS One. 2012;7:e45604.

Auerbach M, Deloughery T. Single-dose intravenous iron for iron deficiency: a new paradigm. Hematol Am Soc Hematol Educ Program. 2016;2016:57–66.

Nguyen KL, Moriarty JM, Plotnik AN, et al. Ferumoxytol-enhanced MR angiography for vascular access mapping before transcatheter aortic valve replacement in patients with renal impairment: a step toward patient-specific care. Radiology. 2017:162899. http://doi.org/10.1148/radiol.2017162899

Neiser S, Rentsch D, Dippon U, et al. Physico-chemical properties of the new generation IV iron preparations ferumoxytol, iron isomaltoside 1000 and ferric carboxymaltose. Biometals. 2015;28:615–35.

Keating GM. Ferric carboxymaltose: a review of its use in iron deficiency. Drugs. 2015;75:101–27.

Bregman DB, Goodnough LT. Experience with intravenous ferric carboxymaltose in patients with iron deficiency anemia. Ther Adv Hematol. 2014;5:48–60.

Wolf M, Koch TA, Bregman DB. Effects of iron deficiency anemia and its treatment on fibroblast growth factor 23 and phosphate homeostasis in women. J Bone Miner Res. 2013;28:1793–803.

Courbebaisse M, Lanske B. biology of fibroblast growth factor 23: from physiology to pathology. Cold Spring Harb Perspect Med 2017. http://doi.org/10.1101/cshperspect.a031260

Macdougall IC. Intravenous iron therapy in non-dialysis CKD patients. Nephrol Dial Transplant. 2014;29:717–20.

Evstatiev R, Marteau P, Iqbal T, et al. FERGIcor, a randomized controlled trial on ferric carboxymaltose for iron deficiency anemia in inflammatory bowel disease. Gastroenterology. 2011;141(846–853):e841–2.

Kulnigg S, Stoinov S, Simanenkov V, et al. A novel intravenous iron formulation for treatment of anemia in inflammatory bowel disease: the ferric carboxymaltose (FERINJECT) randomized controlled trial. Am J Gastroenterol. 2008;103:1182–92.

Van Wyck DB, Mangione A, Morrison J, et al. Large-dose intravenous ferric carboxymaltose injection for iron deficiency anemia in heavy uterine bleeding: a randomized, controlled trial. Transfusion. 2009;49:2719–28.

Van Wyck DB, Martens MG, Seid MH, et al. Intravenous ferric carboxymaltose compared with oral iron in the treatment of postpartum anemia: a randomized controlled trial. Obstet Gynecol. 2007;110:267–78.

Breymann C, Gliga F, Bejenariu C, et al. Comparative efficacy and safety of intravenous ferric carboxymaltose in the treatment of postpartum iron deficiency anemia. Int J Gynaecol Obstet. 2008;101:67–73.

van Veldhuisen DJ, Ponikowski P, van der Meer P, et al. Effect of ferric carboxymaltose on exercise capacity in patients with chronic heart failure and iron deficiency. Circulation. 2017;136:1374–83.

Ponikowski P, Voors AA, Anker SD, et al. ESC guidelines for the diagnosis and treatment of acute and chronic heart failure: the task force for the diagnosis and treatment of acute and chronic heart failure of the European Society of Cardiology (ESC) Developed with the special contribution of the Heart Failure Association (HFA) of the ESC. Eur Heart J. 2016;37:2129–200.

Yancy CW, Jessup M, Bozkurt B, et al. ACC/AHA/HFSA focused update of the 2013 ACCF/AHA guideline for the management of heart failure: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines and the Heart Failure Society of America. J Am Coll Cardiol. 2017;70:776–803.

Ezekowitz JA, O’Meara E, McDonald MA, et al. Comprehensive update of the Canadian cardiovascular society guidelines for the management of heart failure. Can J Cardiol. 2017;33:1342–433.

Filippatos G, Farmakis D, Colet JC, et al. Intravenous ferric carboxymaltose in iron-deficient chronic heart failure patients with and without anaemia: a subanalysis of the FAIR-HF trial. Eur J Heart Fail. 2013;15:1267–76.

Drakesmith H, Nemeth E, Ganz T. Ironing out ferroportin. Cell Metab. 2015;22:777–87.

Sabelli M, Montosi G, Garuti C, et al. Human macrophage ferroportin biology and the basis for the ferroportin disease. Hepatology. 2017;65:1512–25.

Girelli D, Marchi G, Busti F. Iron replacement therapy: entering the new era without misconceptions, but more research is needed. Blood Transfus. 2017;15:379–81.

Cloonan SM, Mumby S, Adcock IM, et al. The “iron”-y of iron overload and iron deficiency in chronic obstructive pulmonary disease. Am J Respir Crit Care Med. 2017;196:1103–12.

Acknowledgements

D. G. has received funding for research on iron metabolism by Fondazione Cariverona (2014.0851), and the Veneto Region (PRIHTA no. 2014-00000451).

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Girelli, D., Ugolini, S., Busti, F. et al. Modern iron replacement therapy: clinical and pathophysiological insights. Int J Hematol 107, 16–30 (2018). https://doi.org/10.1007/s12185-017-2373-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12185-017-2373-3