Abstract

Objectives

Recent legislation in Pennsylvania mandates that forecasts of "future dangerousness" be provided to judges when sentences are given. Similar requirements already exist in other jurisdictions. Research has shown that machine learning can lead to usefully accurate forecasts of criminal behavior in such setting. But there are settings in which there is insufficient IT infrastructure to support machine learning. The intent of this paper is provide a prototype procedure for making forecasts of future dangerousness that could be used to inform sentencing decisions when machine learning is not practical. We consider how classification trees can be improved so that they may provide an acceptable second choice.

Methods

We apply an version of classifications trees available in R, with some technical enhancements to improve tree stability. Our approach is illustrated with real data that could be used to inform sentencing decisions.

Results

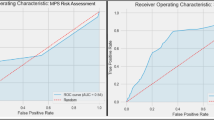

Modest sized trees grown from large samples can forecast well and in a stable fashion, especially if the small fraction of indecisive classifications are found and accounted for in a systematic manner. But machine learning is still to be preferred when practical.

Conclusions

Our enhanced version of classifications trees may well provide a viable alternative to machine learning when machine learning is beyond local IT capabilities.

Similar content being viewed by others

Notes

There can also be very different approaches to how a classification model is developed and evaluated. For example, proper concern about overfitting is sometimes not coupled with the use of held-out, test data when a classification tree is constructed. Out-of-sample performance is today’s gold standard.

Any predictor can be used as many times as needed, but with different splitting values. For instance, the first split for age might be at 21 years, and a later split might be at 32 years.

The procedure is formally a Bayes classifier if the terminal node proportions are treated as probabilities.

This formalization applies equally well when there are more than two outcome classes. Also, the 1/0 coding is convenient but any other binary labels will work. Because a class is categorical, the coding does not even need to be numerical. One might use, for instance, “F” for fail; and “NF” for not fail.

There is nothing special about the 5 to 1 cost ratio, and for the methodological issues raised in the paper, most any reasonable cost ratio would suffice. The cost ratio just could not be so extreme that the same class is assigned to essentially all cases. In that instance, the role of either false positives or false negatives would be obscured.

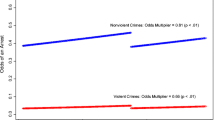

Cost ratios have ranged from 20 to 1 to 3 to 1. The relative costs of false negatives are more dear. Anecdotally, we have found that criminal justice officials and representatives from a variety of citizen groups broadly agree that at least for crimes of violence, false negatives are substantially more costly than false positives.

In this context, a random forest is an ensemble of classification trees that differ from one another by user-controlled chance processes. In one such process, when each tree is grown, a random sample of observations is “held out” and serves as test data for that tree. There is no need for the researcher to assemble test data before the statistical analysis begins.

Overall model error is reported at the lower right corner of the table. However, when the loss function is asymmetric, the overall proportion correct (or in error) is misleading. Symmetric costs are being assumed.

One important reason stems from the large imbalance in the outcome distribution favoring the absence of violent crime. The absence of violent crime is far more common and, therefore, easier to predict correctly. Random forests earns its keep by forecasting rare events.

We used the same tuning parameter values so that the trees were comparable. We were trying to isolate the impact of new data for a tree of a given complexity. Had we altered the tuning parameter values, changes in how cases were classified could result from either the new data or differing tuning parameters. The cost ratio was also unchanged.

One reason Kuhnert and Mengersen suggest additional measures of stability is that they apparently do not find sufficiently strong justification for using a Bayes classifier. In our context, where the relative costs of false negatives and false positives are elicited from stakeholders, their concerns seem far less troubling.

Even using the same data, it is likely that a machine learning procedure would do better. Recall, however, that in this paper we are assuming that machine learning is not a practical option.

References

Banks S, Robbins PC, Silver E, Vesselinov R, Steadman HJ, Monahan J, Mulvey EP, Applebaum PS, Grisso RT, Roth LH (2004) A multiple-models approach to violence risk assessment among people with mental disorder. Crim Justice Behav 31:324–340

Barnes GC, Ahlman L, Gill C, Sherman LW, Kurtz E, Malvestuto R (2010) Low intensity community supervision for low-risk offenders: a randomized, controlled trial. J Exp Criminol 6:159-189

Berk RA (2008a) Forecasting methods in crime and justice. In: Hagan J, Schepple KL, Tyler TR (eds) Annual review of law and social science, vol 4. Annual Reviews, Palo Alto, pp 173–192

Berk RA (2008b) Statistical learning from a regression perspective. Springer, New York

Berk RA (2009) The role of race in forecasts of violent crime. Race Soc Probl 1(4):231–242

Berk RA (2011) Asymmetric loss functions for forecasting in criminal justice settings. J Quant Criminol 27:107–123

Berk RA (2012) Criminal justice forecasts of risk: a machine learning approach. Springer, New York

Berk RA (2013) Algorithmic criminology. Secur Inform 2(5) (forthcoming)

Berk RA, Sorenson SB, He Y (2005) Developing a practical forecasting screener for domestic violence incidents. Eval Rev 29(4):358–382

Berk RA, Kriegler B, Baek J-H (2006) Forecasting dangerous inmate misconduct: an application of ensemble statistical procedures. J Quant Criminol 22(2):135–145

Berk RA, Sherman L, Barnes G, Kurtz E, Ahlman L (2009) Forecasting murder within a population of probationers and parolees: a high stakes application of statistical learning. J R Stat Soc Ser A 172(part I):191–211

Borden HG (1928) Factors predicting parole success. J Am Inst Crim Law Criminol 19:328–336

Breiman L (2001a) Random forests. Mach Learn 45:5–32

Breiman L (2001b) Statistical modeling: two cultures (with discussion). Stat Sci 16:199–231

Breiman L, Friedman JH, Olshen RA, Stone CJ (1984) Classification and regression trees. Wadsworth Press, Monterey

Burgess EM (1928) Factors determining success or failure on parole. In: Bruce AA, Harno AJ, Burgess EW, Landesco EW (eds) The working of the indeterminate sentence law and the parole system in Illinois. State Board of Parole, Springfield, pp 205–249

Bushway S (2011) Albany Law Rev 74(3)

Casey PM, Warren RK, Elek JK (2011) Using offender risk and needs assessment information at sentencing: guidance from a national working group. National Center for State Courts. http://www.ncsconline.org/

Chipman HA, George EI, McCulloch RE (2010) BART: Bayesian additive regression trees. Ann Appl Stat 4(1):266–298

Farrington DP, Tarling R (2003) Prediction in criminology. SUNY Press, Albany

Feeley M, Simon J (1994) Actuarial justice: the emerging new criminal law. In: Nelken D (ed) The futures of criminology. Sage, London, pp 173–201

Friedman JH (2002) Stochastic gradient boosting. Comput Stat Data Anal 38:367–378

Gottfredson SD, Moriarty LJ (2006) Statistical risk assessment: old problems and new applications. Crime Delinq 52(1):178–200

Harcourt BW (2007) Against prediction: profiling, policing, and punishing in an actuarial age. University of Chicago Press, Chicago

Hastie R, Dawes RM (2001) Rational choice in an uncertain world. Sage, Thousand Oaks

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning, 2nd edn. Springer, New York

Holmes S (2003) Bootstrapping phylogenetic trees: theory and methods. Stat Sci 18(2):241–255

Hyatt JM, Chanenson L, Bergstrom MH (2011) Reform in motion: the promise and profiles of incorporating risk assessments and cost-benefit analysis into Pennsylvania sentencing. Duquesne Law Rev 49(4):707–749

Kleiman M, Ostrom BJ, Cheeman FL (2007) Using risk assessment to inform sentencing decisions for nonviolent offenders in Virginia. Crime Delinq 53(1):1–27

Kuhnert PM, Mengersen K (2003) Reliability measures for local nodes assessment in classification trees. J Comput Graph Stat 12(2):398–426

Messinger SL, Berk RA (1987) Dangerous people: a review of the NAS report on career criminals. Criminology 25(3):767–781

Monahan J (1981) Predicting violent behavior: an assessment of clinical techniques. Sage, Newbury Park

Pew Center of the States, Public Safety Performance Project (2011) Risk/needs assessment 101: science reveals new tools to manage offenders. The Pew Center of the States. http://www.pewcenteronthestates.org/publicsafety.

Silver E, Chow-Martin L (2002) A multiple models approach to assessing recidivism risk: implications for judicial decision making. Crim Justice Behav 29:538–569

Skeem JL, Monahan J (2011) Current directions in violence risk assessment. Curr Dir Psychol Sci 21(1):38–42

Tóth N (2008) Handling classification uncertainty with decision trees in biomedical diagnostic systems. PhD thesis, Department of Measurement and Information Systems, Budapest University of Technology and Economics

Turner S, Hess J, Jannetta J (2009) Development of the California Risk Assessment Instrument. Center for Evidence Based Corrections, The University of California, Irvine

Author information

Authors and Affiliations

Corresponding author

Appendix: Outline of Software for Stability Assessments

Appendix: Outline of Software for Stability Assessments

The steps that follow summarize the R code used to discard unreliable forecasts.

-

1.

Construct a classification tree using a loss-matrix with the desired costs.

-

2.

For each case, find the terminal node where it was classified.

-

3.

Create a table that shows for each terminal node, how many observations in that node were labelled “Fail” vs. “No Fail.”

-

4.

To understand how close the vote in each node was, re-weight the “Fails” in each terminal node by their weight as if they were false negatives (e.g., assign a cost of 5.0 to each failure). This now allows one to check the majority vote (using weighted fails) to see which classification won.

-

5.

Let F be the weighted sum of all of the “Fails” (e.g., 400 fails × 5.0 cost = 2,000) and NF be total number of non-failures, each with a weight of 1.0. Let p = F/(F + NF), the proportion of the weighted total number of votes that are “Fail” in a given terminal node. This can be done symmetrically with (1 − p) as well.

-

6.

Set a desired margin. Check if |p − .5| < c is too close to call. How close is too close will require some trial and error.

-

7.

Store which nodes are too close and which are not. Use the output from the tree with the additional label that some nodes are now too close too call.

-

8.

One can exclude these too-close observations and run a cross-check against the bootstrapped trees to see how the stability improves.

Rights and permissions

About this article

Cite this article

Berk, R., Bleich, J. Forecasts of Violence to Inform Sentencing Decisions. J Quant Criminol 30, 79–96 (2014). https://doi.org/10.1007/s10940-013-9195-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10940-013-9195-0