Abstract

Discovering the most interesting patterns is the key problem in the field of pattern mining. While ranking or selecting patterns is well-studied for itemsets it is surprisingly under-researched for other, more complex, pattern types. In this paper we propose a new quality measure for episodes. An episode is essentially a set of events with possible restrictions on the order of events. We say that an episode is significant if its occurrence is abnormally compact, that is, only few gap events occur between the actual episode events, when compared to the expected length according to the independence model. We can apply this measure as a post-pruning step by first discovering frequent episodes and then rank them according to this measure. In order to compute the score we will need to compute the mean and the variance according to the independence model. As a main technical contribution we introduce a technique that allows us to compute these values. Such a task is surprisingly complex and in order to solve it we develop intricate finite state machines that allow us to compute the needed statistics. We also show that asymptotically our score can be interpreted as a \(P\) value. In our experiments we demonstrate that despite its intricacy our ranking is fast: we can rank tens of thousands episodes in seconds. Our experiments with text data demonstrate that our measure ranks interpretable episodes high.

Similar content being viewed by others

Notes

The book was obtained from http://www.gutenberg.org/etext/15.

The abstracts were obtained from http://kdd.ics.uci.edu/databases/nsfabs/nsfawards.html.

The addresses were obtained from http://www.bartleby.com/124/pres68.

The abstracts were obtained from http://jmlr.csail.mit.edu/.

An episode is closed if there are no superepisode with the same support.

References

Achar A, Laxman S, Viswanathan R, Sastry PS (2012) Discovering injective episodes with general partial orders. Data Min Knowl Discov 25(1):67–108

Billingsley P (1995) Probability and measure, 3rd edn. Wiley, New York

Calders T, Dexters N, Goethals B (2007) Mining frequent itemsets in a stream. In: Proceedings of the 7th IEEE international conference on data mining (ICDM 2007), pp 83–92

Casas-Garriga G (2003) Discovering unbounded episodes in sequential data. In: Knowledge discovery in databases: PKDD 2003, 7th European conference on principles and practice of knowledge discovery in databases, pp 83–94

Cule B, Goethals B, Robardet C (2009) A new constraint for mining sets in sequences. In: Proceedings of the SIAM international conference on data mining (SDM 2009), pp 317–328

Gwadera R, Atallah MJ, Szpankowski W (2005a) Markov models for identification of significant episodes. In: Proceedings of the SIAM international conference on data mining (SDM 2005), pp 404–414

Gwadera R, Atallah MJ, Szpankowski W (2005b) Reliable detection of episodes in event sequences. Knowl Inf Syst 7(4):415–437

Hirao M, Inenaga S, Shinohara A, Takeda M, Arikawa S (2001) A practical algorithm to find the best episode patterns. In: Discovery science, pp 435–440

Mannila H, Toivonen H, Verkamo AI (1997) Discovery of frequent episodes in event sequences. Data Min Knowl Discov 1(3):259–289. doi:10.1023/A:1009748302351

Méger N, Rigotti C (2004) Constraint-based mining of episode rules and optimal window sizes. In: Knowledge discovery in databases: PKDD 2004, 8th European conference on principles and practice of knowledge discovery in databases, pp 313–324

Pei J, Wang H, Liu J, Wang K, Wang J, Yu PS (2006) Discovering frequent closed partial orders from strings. IEEE Trans Knowl Data Eng 18(11):1467–1481

Tatti N (2009) Significance of episodes based on minimal windows. In: Proceedings of the 9th IEEE international conference on data mining (ICDM 2009), pp 513–522

Tatti N, Cule B (2011) Mining closed episodes with simultaneous events. In: Proceedings of the 17th ACM SIGKDD conference on knowledge discovery and data mining (KDD 2011), pp 1172–1180

Tatti N, Cule B (2012) Mining closed strict episodes. Data Min Knowl Discov 25(1):34–66

Tatti N, Vreeken J (2012) The long and the short of it: summarising event sequences with serial episodes. In: The 18th ACM SIGKDD international conference on knowledge discovery and data mining, 2012, pp 462–470

Tronícek Z (2001) Episode matching. In: Combinatorial pattern matching, pp 143–146

van der Vaart AW (1998) Asymptotic statistics. Cambridge series in statistical and probabilistic mathematics. Cambridge University Press, Cambridge

Webb GI (2007) Discovering significant patterns. Mach Learn 68(1):1–33

Webb GI (2010) Self-sufficient itemsets: an approach to screening potentially interesting associations between items. TKDD 4(1): 1–20

Acknowledgments

Nikolaj Tatti was partly supported by a Post-Doctoral Fellowship of the Research Foundation—Flanders (fwo).

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Eamonn Keogh.

Appendix: Proofs

Appendix: Proofs

Proof

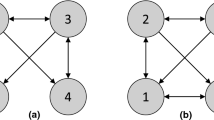

(Proof of Proposition 2) We will prove this by induction. Let \(i\) be the source state of \(M\). The proposition holds trivially when \(X = \left\{ i\right\} \), a source state. Assume now that the proposition holds for all parent states of \(X\).

Assume that \(s\) covers \(X\). Let \(t\) be a subsequence of \(s\) that leads \({{sm}}\mathopen {}\left( M\right) \) from the source state \(\left\{ i\right\} \) to \(X\). Let \(s_e\) be the last symbol of \(s\) occurring in \(t\). Then a parent state \(Y = \left\{ y_1 ,\ldots , y_L\right\} = {{par}}\mathopen {}\left( X; s_e\right) \) is covered by \(s[1, e - 1]\). By the induction assumption at least one \(y_k\) is covered by \(s[1, e - 1]\). If there is \(x_j \in X\) such that \(x_j = y_k\), then \(x_j\) is covered by \(s\), otherwise there is \(x_j\) that has \(y_k\) as a parent state. The edge connecting \(x_j\) and \(y_k\) is labelled with \(s_e\). Hence \(s\) covers \(x_j\) also.

To prove the other direction assume that \(s\) covers \(x_j\). Let \(t\) be a sub-sequence that leads \(M\) from \(i\) to \(x_j\). Let \(s_e\) be the last symbol occurring in \(t\). Let \(y\) be the parent state of \(x_j\) connected by an edge labelled with \(s_e\). Since \(s_e \in {{in}}\mathopen {}\left( X\right) \), we must have \(Y\) as a parent state of \(X\) such that \(y \in Y\). By the induction assumption, \(s[1, e - 1]\) covers \(Y\). Hence \(s\) covers \(X\). \(\square \)

In order to prove Proposition 3 we need the following lemma.

Lemma 2

Let \(G\) be an episode and assume a sequence \(s = \left( s_1 ,\ldots , s_L\right) \) that covers \(G\). Let \(\mathcal{H } = \left\{ G - v; v \in {{sinks}}\mathopen {}\left( G\right) , {{lab}}\mathopen {}\left( v\right) = s_L\right\} \). If \(\mathcal{H }\) is empty, then \(s[1, L - 1]\) covers \(G\). Otherwise, there is an episode \(H \in \mathcal{H }\) that is covered by \(s[1, L - 1]\).

Proof

Let \(f\) be a valid mapping of \(V(G)\) to indices of \(s\) corresponding to the coverage. If \(\mathcal{H }\) is empty, then \(L\) is not in the range of \(f\), then \(s[1, L - 1]\) covers \(G\). If \(\mathcal{H }\) is not empty but \(L\) is not in the range of \(f\), then \(s[1, L - 1]\) covers \(G\), and any episode in \(\mathcal{H }\).

Assume now that \(L\) is in range of \(f\), that is, there is a sink \(v\) with a label \(s_L\). Episode \(G - v\) is in \(\mathcal{H }\). Moreover, \(f\) restricted to \(G - v\) provides the needed mapping in order to \(s[1, L - 1]\) to cover \(G - v\). \(\square \)

Proof

(Proof of Proposition 3) If \({{g}}\mathopen {}\left( X, s\right) = \left\{ i\right\} \), then it is trivial to see that \(s\) covers \(X\).

Assume that \(s\) covers \(X\). We will prove this direction by induction over \(L\), the length of \(s\). The proposition holds for \(L = 0\). Assume that \(L > 0\) and that proposition holds for all sequences of length \(L - 1\).

Let \(Y = {{g}}\mathopen {}\left( X, s_L\right) \). Note that \({{g}}\mathopen {}\left( X, s\right) = {{g}}\mathopen {}\left( Y, s[1, L - 1]\right) \). Hence, to prove the proposition we need to show that \(s[1, L - 1]\) covers \(Y\).

If \(Y = \left\{ i\right\} \), then \(s[1, L - 1]\) covers \(Y\). Hence, we can assume that \(Y \ne \left\{ i\right\} \), that is, \(Y = {{sub}}\mathopen {}\left( X; s_L\right) \cup {{stay}}\mathopen {}\left( X; s_L\right) \).

Proposition 2 implies that one of the states of \(M_G\), say \(x \in X\), is covered by \(s\). Proposition 1 states that the corresponding episode, say \(H\), is covered by \(s\).

Assume that \(x \in Y\). This is possibly only if \(x \in {{stay}}\mathopen {}\left( X; s_L\right) \) that is there is no sink node in \(H\) labelled as \(s_L\). Lemma 2 implies that \(s[1, L - 1]\) covers \(H\), Propositions 1 and 2 imply that \(s[1, L - 1]\) covers \(Y\).

Assume that \(x \notin Y\), Then \({{sub}}\mathopen {}\left( X; s_L\right) \subseteq Y\) contains all states of \(M_G\) corresponding to the episodes of form \(H - v\), where \(v\) is sink node of \(H\) with a label \(s_L\). According to Lemma 2, \(s[1, L - 1]\) covers one of these episodes, Propositions 1 and 2 imply that \(s[1, L - 1]\) covers \(Y\). \(\square \)

Proof

(Proof of Proposition 4) We will prove the proposition by induction over \(L\), the length of \(s\). The proposition holds when \(L = 0\). Assume that \(L > 0\) and that proposition holds for sequence of length \(L - 1\).

Let \(\beta = (y_1, y_2) = {{g}}\mathopen {}\left( \alpha , s_L\right) \). Then, by definition of \(M^*\), \(y_i = {{g}}\mathopen {}\left( x_i, s_L\right) \). Write \(t = s[1, L - 1]\). Since

and, because of induction assumption, \({{g}}\mathopen {}\left( \beta , t\right) = ({{g}}\mathopen {}\left( y_1, t\right) , {{g}}\mathopen {}\left( y_2, t\right) )\), we have \({{g}}\mathopen {}\left( \alpha , s\right) = ({{g}}\mathopen {}\left( x_1, s\right) , {{g}}\mathopen {}\left( x_2, s\right) )\). \(\square \)

Proof

(Proof of Proposition 5) Assume that \(s\) is a minimal window for \(G\). Since \(s\) covers \(S\) in \(M\), \({{g}}\mathopen {}\left( S, s; M\right) = I\). This implies that \({{g}}\mathopen {}\left( S, s; M_1\right) = I\) or \({{g}}\mathopen {}\left( S, s; M_1\right) = J\). The latter case implies that \(s[2, L]\) covers \(S\) in \(M\), which is a contradiction. Hence, \({{g}}\mathopen {}\left( S, s; M_1\right) = I\). Let \(Z = {{g}}\mathopen {}\left( T, s; M_2\right) \). If \(Z = I\), then \(s[1, L - 1]\) covers \(S\) in \(M\), which is a contradiction. Hence \(Z \ne I\). Proposition 4 implies that \({{g}}\mathopen {}\left( \alpha , s\right) = (I, Z)\).

Assume that \({{g}}\mathopen {}\left( \alpha , s\right) = (I, Y)\) such that \(Y \ne I\). Proposition 4 implies that \({{g}}\mathopen {}\left( S, s; M_1\right) = I\) and \({{g}}\mathopen {}\left( T, s; M_2\right) \ne I\). The former implication leads to \({{g}}\mathopen {}\left( S, s; M\right) = I\) which implies that \(s\) covers \(G\).

If \(s[2, L]\) covers \(G\), then \({{g}}\mathopen {}\left( S, s[2, L]; M\right) = I\) and so \({{g}}\mathopen {}\left( S, s; M_1\right) = J\), which is a contradiction. Hence \(s[2, L]\) does not cover \(G\). The latter implication leads to \({{g}}\mathopen {}\left( S, s[1, L - 1]; M\right) \ne I\) which implies that \(s[1, L - 1]\) does not cover \(G\). This proves the proposition. \(\square \)

Proof

(Proof of Proposition 6) If \(L = 0\), then \({{g}}\mathopen {}\left( x, s\right) = x\) which immediately implies the proposition. Assume that \(L > 0\). Note that \({{g}}\mathopen {}\left( x, s\right) = {{g}}\mathopen {}\left( {{g}}\mathopen {}\left( x, s_L\right) , s[1, L - 1]\right) \).

Since individual symbols in \(s\) are independent, it follows that

This proves the proposition. \(\square \)

Proof

(Proof of Lemma 1) Define \(q = \sqrt{1 - \min _{a \in \Sigma } p(a)}\). Note that \(q < 1\). We claim that for each \(x\) there is a constant \(C_x\) such that \({{pg}}\mathopen {}\left( x, Y, L\right) \le C_xq^L = O(q^{L})\) which in turns proves the lemma. To prove the claim we use induction over parenthood of \(x\) and \(L\).

Since the source node is not in \(Y\), the first step follows immediately. Assume that the result holds for all parent states of \(x\). Define

Since \(C_x \ge 1\), the case of \(L = 0\) holds. Assume that the the induction assumption holds for \(C_y\) and for \(C_x\) up to \(L - 1\). Let \(r = 1 - \sum _{a \in {{in}}\mathopen {}\left( x\right) } p(a)\). Note that \(r \le q^2\). According to Proposition 6 we have

This proves that \({{pg}}\mathopen {}\left( x, Y, L\right) \) decays at exponential rate. \(\square \)

Proof

(Proof of Proposition 8) The proposition follows by a straightforward manipulation of Eq. 1. First note that

Equation 1 implies that

Combining Eqs. 5 and 6 and solving \({{m}}\mathopen {}\left( x, f, Y\right) \) gives us the result. \(\square \)

To prove the asymptotic normality we will use the following theorem.

Theorem 1

(Theorem 27.4 in Billingsley 1995) Assume that \(U_k\) is a stationary sequence with \(\mathrm{E }\left[ U_k\right] = 0\), \(\mathrm{E }\left[ U_k^{12}\right] < \infty \), and is \(\alpha \)-mixing with \(\alpha (n) = O(n^{-5})\), where \(\alpha (n)\) is the strong mixing coefficient,

where \(A\) is an event depending only on \(U_{-\infty }, \ldots , U_k\) and \(B\) is an event depending only on \(U_{k + n}, \ldots ,U_{\infty }\). Let \(S_k = U_1 + \cdots + U_k\). Then \(\sigma ^2 = \lim _k 1/k \mathrm{E }\left[ S_k\right] \) exists and \(S_k / \sqrt{k}\) converges to \(N(0, \sigma ^2)\) and \(\sigma ^2 = \mathrm{E }\left[ U_1^2\right] + 2\sum _{k = 2}^\infty \mathrm{E }\left[ U_1U_k\right] \).

Proof

(Proof of Proposition 10) Let us write \(T_k = (Z_k, X_k) - (q, p)\) and \(S_L = 1/\sqrt{L}\sum _{k = 1}^L T_k\). Assume that we are given a vector \(r = (r_1, r_2)\) and write \(U_k = r^TT_k\). We will first prove that \(r^TS_L\) converges to a normal distribution using Theorem 1.

First note that \(\mathrm{E }\left[ U_k\right] = 0\) and that

Since every moment of \(Z_k\) and \(X_k\) is finite, \(\mathrm{E }\left[ U_k^{12}\right] \) is also finite. We will prove now that \(U_k\) is \(\alpha \)-mixing.

Fix \(k\) and \(N\). Write \(W\) to be an event that \(s[k + 1, N]\) covers \(G\). If \(W\) is true, then \(X_l\) and \(Z_l\) (and hence \(U_l\)) for \(l \le k\) depends only \(s[l, N]\), that is, either there is a minimal window \(s[l, N^{\prime }]\), where \(N^{\prime } < N\) or \(X_l = Z_l = 0\).

Let \(A\) be an event depending only on \(U_{-\infty }, \ldots , U_k\) and \(B\) be an event depending only on \(U_{N + 1}, \ldots ,U_{\infty }\). Then \(p(A,B \mid W) = p(A \mid W)p(B \mid W)\). We can rephrase this and bound \(\alpha (n) \le p(s[1, n - 1] \text{ does } \text{ not } \text{ covers } G)\). To bound the right side, let \(M = {{sm}}\mathopen {}\left( M_G\right) \), let \(v\) be its sink state and let \(V\) be all states save the source state. Then the probability is equal to

Since \(V\) does not contain the source node, the moment \({{m}}\mathopen {}\left( v, n \rightarrow n^5, V\right) \) is finite. Consequently, \(n^5{{pg}}\mathopen {}\left( v, V, n\right) \rightarrow 0\) which implies that \(\alpha (n) = O(n^{-5})\). Thus Theorem 1 implies that \(r^TS_L\) converges to a normal distribution with the variance \(\sigma ^2 = r_1^2C_{11} + 2r_1r_2C_{12} + r_2^2C_{22} = r^TCr\). Levy’s continuity theorem (Theorem 2.13 Vaart 1998) now implies that the characteristic function of \(r^TS_L\) converges to a characteristic function of normal distribution \(N(0, \sigma ^2)\),

The left side is a characteristic function of \(S_L\) (with \(tr\) as a parameter). Similarly, the right side is a characteristic function of \(N(0, C)\). Levy’s continuity theorem now implies that \(S_L\) converges into \(N(0, C)\). \(\square \)

Proof

(Proof of Proposition 11) Function \(f(x, y) = x/y\) is differentiable at \((q, p)\). Since \(1/\sqrt{L}\left( \sum _{k = 1}^L (Z_k, X_k) - (q, p)\right) \) converges to normal distribution, we can apply Theorem 3.1 in Vaart (1998) so that

converges to \(N(0, \sigma ^2)\), where \(\sigma ^2 = \nabla f(q, p)^T C \nabla f(q, p)\). The gradient of \(f\) is equal to \(\nabla f(q, p) = (1/p, -\mu /p)\). The proposition follows. \(\square \)

Proof

(Proof of Proposition 12) To prove all four cases simultaneously, let us write write \(A\) to be either \(X_1\) or \(Z_1\) and let \(B_k\) to be either \(X_k\) or \(Z_k\). Let \(a = \mathrm{E }\left[ A\right] \) and \(b = \mathrm{E }\left[ B_k\right] \). First note that \(\mathrm{E }\left[ (A - a)(B_k - b)\right] = \mathrm{E }\left[ A(B_k - b)\right] \), which allows us to ignore \(a\) inside the mean.

Assume that we have \(0 < n < k\). Then given that \(Y_1 = n\), \(A\) and \(X_1\) depends only on \(n\) first symbols of sequence. Since \(B_k\) does not depend on \(k - 1\) first symbols, this implies that

which in turns implies that \(\mathrm{E }\left[ A (B_k - b) \mid Y_1 = n\right] = 0\).

Note that for \(A = 0\) whenever \(Y_1 = 0\). Consequently, we have

where the second last equality holds because \(\sum _{k = 2}^{Y_1} 1 = Y_1 - X_1\) and the last equality follows since \(X_k = X_k^2\) and \(Z_k = X_kZ_k\) for any \(k\). \(\square \)

Rights and permissions

About this article

Cite this article

Tatti, N. Discovering episodes with compact minimal windows. Data Min Knowl Disc 28, 1046–1077 (2014). https://doi.org/10.1007/s10618-013-0327-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-013-0327-9