Abstract

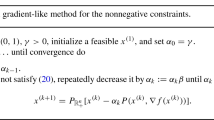

We consider concave minimization problems over nonconvex sets. Optimization problems with this structure arise in sparse principal component analysis. We analyze both a gradient projection algorithm and an approximate Newton algorithm where the Hessian approximation is a multiple of the identity. Convergence results are established. In numerical experiments arising in sparse principal component analysis, it is seen that the performance of the gradient projection algorithm is very similar to that of the truncated power method and the generalized power method. In some cases, the approximate Newton algorithm with a Barzilai–Borwein Hessian approximation and a nonmonotone line search can be substantially faster than the other algorithms, and can converge to a better solution.

Similar content being viewed by others

References

Alizadeh, A.A., et al.: Distinct types of diffuse large b-cell lymphoma identified by gene expression profiling. Nature 403, 503–511 (2000)

Barzilai, J., Borwein, J.M.: Two point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Beck, A., Teboulle, M.: Mirror descent and nonlinear projected subgradient methods for convex optimization. Oper. Res. Lett. 31, 167–175 (2003)

Bertsekas, D.P.: Projected Newton methods for optimization problems with simple constraints. SIAM J. Control Optim. 20, 221–246 (1982)

Bhaskara, A., Charikar, M., Chlamtac, E., Feige, U., Vijayaraghavan, A.: Detecting high log-densities: an \(o (n 1/4)\) approximation for densest k-subgraph. In: Proceedings of the Forty-Second ACM Symposium on Theory of Computing, ACM, pp. 201–210 (2010)

Boldi, P., Rosa, M., Santini, M., Vigna, S.: Layered label propagation: A multiresolution coordinate-free ordering for compressing social networks. In: Proceedings of the 20th International Conference on World Wide Web, ACM Press (2011)

Boldi, P., Vigna, S.: The WebGraph framework I: Compression techniques. In: Proceedings of the Thirteenth International World Wide Web Conference (WWW 2004), Manhattan, USA, ACM Press, pp. 595–601 (2004)

Cadima, J., Jolliffe, I.T.: Loading and correlations in the interpretation of principle compenents. J. Appl. Stat. 22, 203–214 (1995)

Candes, E., Wakin, M.: An introduction to compressive sampling. IEEE Signal Process. Mag. 25, 21–30 (2008)

Chen, S.S., Donoho, D.L., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20, 33–61 (1998)

Clarkson, K.L.: Coresets, sparse greedy approximation, and the Frank-Wolfe algorithm. ACM Trans. Algorithms (TALG) 6, 63 (2010)

d’Aspremont, A., Bach, F., Ghaoui, L.E.: Optimal solutions for sparse principal component analysis. J. Mach. Learn. Res. 9, 1269–1294 (2008)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inform. Theory 52, 1289–1306 (2006)

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression. Ann. Stat. 32, 407–499 (2004)

Frank, M., Wolfe, P.: An algorithm for quadratic programming. Nav. Res. Logist. Q 3, 95–110 (1956)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Hager, W.W., Zhang, H.: A new active set algorithm for box constrained optimization. SIAM J. Optim. 17, 526–557 (2006)

Hazan, E., Kale, S.: Projection-free online learning, In: Langford, J., Pineau, J. (eds.) Proceedings of the 29th International Conference on Machine Learning, Omnipress, pp. 521–528 (2012)

Jaggi, M.: Revisiting Frank-Wolfe: Projection-free sparse convex optimization. In: Dasgupta, S., McAllester, D. (eds.) Proceedings of the 30th International Conference on Machine Learning, vol. 28, pp. 427–435 (2013)

Jeffers, J.: Two case studies in the application of principal components. Appl. Stat. 16, 225–236 (1967)

Jenatton, R., Obozinski, G., Bach, F.: Structured sparse principal component analysis. In: International Conference on Artificial Intelligence and Statistics (AISTATS) (2010)

Jolliffe, I.T., Trendafilov, N.T., Uddin, M.: A modified principal component technique based on the LASSO. J. Comput. Graph. Stat. 12, 531–547 (2003)

Journée, M., Nesterov, Y., Richtárik, P., Sepulchre, R.: Generalized power method for sparse principal component analysis. J. Mach. Learn. Res. 11, 517–553 (2010)

Khuller, S., Saha, B.: On finding dense subgraphs, In: Albers, S., Marchetti-Spaccamela, A., Matias, Y., Nikoletseas, S., Thomas, W. (eds.) Automata, Languages and Programming, pp. 597–608. Springer, New York (2009)

Lacoste-Julien, S., Jaggi, M., Schmidt, M., Pletscher, P.: Block-coordinate Frank-Wolfe optimization for structural SVMs. In: Dasgupta, S., McAllester, D. (eds.) Proceedings of the 30th International Conference on Machine Learning, vol. 28, pp. 53–61 (2013)

Luss, R., Teboulle, M.: Convex approximations to sparse PCA via Lagrangian duality. Oper. Res. Lett. 39, 57–61 (2011)

Luss, R., Teboulle, M.: Conditional gradient algorithms for rank-one matrix approximations with a sparsity constraint. SIAM Rev. 55, 65–98 (2013)

Ramaswamy, S., Tamayo, P., Rifkin, R., Mukherjee, S., Yeang, C.-H., Angelo, M., Ladd, C., Reich, M., Latulippe, E., Mesirov, J.P., et al.: Multiclass cancer diagnosis using tumor gene expression signatures. Proc. Natl. Acad. Sci. USA 98, 15149–15154 (2001)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Sriperumbudur, B.K., Torres, D.A., Lanckriet, G.R.: A majorization-minimization approach to the sparse generalized eigenvalue problem. Mach. Learn. 85, 3–39 (2011)

Takeda, A., Niranjan, M., Gotoh, J.-Y., Kawahara, Y.: Simultaneous pursuit of out-of-sample performance and sparsity in index tracking portfolios. Comput. Manag. Sci. 10, 21–49 (2013)

van den Berg, E., Friedlander, M.P.: Probing the pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31, 890–912 (2009)

Wright, S.J., Nowak, R.D., Figueiredo, M.A.T.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57, 2479–2493 (2009)

Ye, Y., Zhang, J.: Approximation of dense-n/2-subgraph and the complement of min-bisection. J. Glob. Optim. 25, 55–73 (2003)

Yuan, X.-T., Zhang, T.: Truncated power method for sparse eigenvalue problems. J. Mach. Learn. Res. 14, 899–925 (2013)

Zou, H., Hastie, T., Tibshirani, R.: Sparse principal component analysis. J. Comput. Graph. Stat. 15, 265–286 (2006)

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors gratefully acknowledge support by the National Science Foundation under Grants 1115568 and 1522629 and by the Office of Naval Research under Grants N00014-11-1-0068 and N00014-15-1-2048.

Rights and permissions

About this article

Cite this article

Hager, W.W., Phan, D.T. & Zhu, J. Projection algorithms for nonconvex minimization with application to sparse principal component analysis. J Glob Optim 65, 657–676 (2016). https://doi.org/10.1007/s10898-016-0402-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-016-0402-z