Abstract

While it is generally accepted that the observed reduction of the Northern Hemisphere spring snow cover extent (SCE) is linked to warming of the climate system caused by human induced greenhouse gas emissions, it has been difficult to robustly quantify the anthropogenic contribution to the observed change. This study addresses the challenge by undertaking a formal detection and attribution analysis of SCE changes based on several observational datasets with different structural characteristics, in order to account for the substantial observational uncertainty. The datasets considered include a blended in situ-satellite dataset extending from 1923 to 2012 (Brown), the National Oceanic and Atmospheric Administration (NOAA) snow chart Climate Data Record for 1968–2012, the Global Land Data Assimilation System version 2.0 (GLDAS-2 Noah) reanalysis for 1951–2010, and the NOAA 20th-century reanalysis, version 2 (20CR2) covering 1948–2012. We analyse observed early spring (March-April) and late spring (May-June) NH SCE extent changes in these datasets using climate simulations of the responses to anthropogenic and natural forcings combined (ALL) and to natural forcings alone (NAT) from the Coupled Model Intercomparison Project Phase 5 (CMIP5). The ALL-forcing response is detected in all of the observed records, indicating that observed changes are inconsistent with internal variability. The analysis also shows that the ALL-forcing simulations substantially underestimate the observed changes as recorded in the Brown and NOAA datasets, but that they are more consistent with changes seen in the GLDAS and 20CR2 reanalyses. A two-signal analysis of the GLDAS data is able to detect the influence of the anthropogenic component of the observed SCE changes separately from the effect of natural forcing. Despite dataset and modelling uncertainty, these results, together with the understanding of the causes of observed warming over the past century, provide substantial evidence of a human contribution to the observed decline in Northern Hemisphere spring snow cover extent.

Similar content being viewed by others

1 Introduction

Northern Hemisphere (NH) snow cover extent (SCE) has declined over the past 90 years with the greatest reduction (~3.3 million km2) observed during the 1980s (Vaughan et al. 2013). Studies have generally focused on the spring season, when higher snow albedo feedbacks occur, including March-April (Brown and Robinson 2011; Brutel-Vuilmet et al. 2013; Rupp et al. 2013), May-June(Brown et al. 2010; Callaghan et al. 2011), April-June (Derksen and Brown 2012; Déry and Brown 2007), and March-May (Robinson and Frei 2000).

Although SCE reductions would substantially affect water supply and hydroclimatic extremes (Najafi and Moradkhani 2015; Yang et al. 2003), only few studies have addressed the role of anthropogenic forcing agents such as greenhouse gases on decreasing SCE trends. The IPCC Fourth Assessment Report (Hegerl et al. 2007a) noted the consistency between the decline in global SCE and warming, but did not formally attribute the observed SCE changes to human influence on the climate system. Recently, Rupp et al. (2013) detected the response to a combination of anthropogenic and natural forcings in observed SCE by comparing observed March and April SCE changes over the period 1922–2005 based on the dataset of Brown and Robinson (2011; see also Brown, 2000) with an ensemble average of CMIP5 (Coupled Model Intercomparison Project Phase 5) simulations that were driven with historical natural and anthropogenic forcings combined (ALL). They showed that while SCE in the models declined, as expected, the model-simulated trends were only half as strong as observed. They did not investigate whether the response to anthropogenic forcings (ANT) can be separated from the response to natural forcing (NAT) in observations, and also did not consider observational uncertainty, for example, as represented by differences between different datasets (Brown et al. 2010; Mudryk et al. 2015). Earlier Pierce et al. (2008), in a regional study of the western US, had detected anthropogenic influence on the mass of the April 1st snow pack, measured as snow water equivalent (SWE), normalized by the amount of precipitation that had been received during the preceding 6-months (i.e., the antecedent October-March period).

This study, undertakes a detailed assessment of the observed and simulated SCE decline over the Northern Hemisphere, North America, and Eurasia using multiple observed datasets. We compare simulated SCE changes with observed changes in several datasets that are based on in-situ measurements, remotely sensed records, a combination of the two, and from reanalyses of various types from which it is possible to estimate observationally constrained historical SCE changes. The differences between these products underscore that there are large observational uncertainties in estimates of SCE (Vaughan et al. 2013). We therefore use multiple observational products to ensure the robustness of SCE detection and attribution results and account for observational uncertainty. It should be noted that some reanalysis datasets with relatively recent start dates, such as the Modern-Era Retrospective Analysis for Research and Applications (MERRA; (Rienecker et al. 2011)) and European Centre for Medium Range Forcecasting Interm Reanalysis (ERA-Interim; (Dee et al. 2011)), both starting in 1979, are likely too short to detect anthropogenic signals at sub-hemispheric scales and thus are not included in this study. We consider observed March-April and May-June SCE changes in several datasets starting before 1979 to determine whether changes are the consequence of external forcing.

2 Data and methods

Four observational datasets based on satellite measurements, blended in-situ and satellite measurements, a global land surface data assimilation system and an atmospheric reanalysis are considered.

The satellite-based weekly SCE dataset of Robinson et al. (2012) for 1968–2012 is based on NOAA’s National Climatic Data Center (NCDC) Climate Data Record (CDR). It is the longest available satellite record of NH SCE and has been used in numerous studies (Brown and Derksen 2013; Brown and Robinson 2011; Derksen and Brown 2012; Déry and Brown 2007; Dye 2002; Flanner et al. 2009). Both the satellite instruments and retrieval methods that underpin this record have evolved over time. From October 1966 to June 1999 SCE was estimated by visually assessing satellite shortwave imagery. Weekly SCE maps were generated on an 89 × 89 line grid overlaid on a NH stereographic map with spatial resolution ranging from 16,000 km2 to 42,000 km2 (Robinson et al. 1993). Each grid cell was flagged as snow covered if more than 50 % of its area had visible snow cover; otherwise it was categorized as snow free. The National Ice Center daily Interactive Multisensor Snow and Ice Mapping System (IMS) replaced the manual approach in June 1999 (Ramsay 1998). IMS relies on the visible satellite imagery such as MODIS, and utilizes station observations and passive microwave data to improve snow monitoring during cloudy or nighttime conditions. Resolution increased from 24 km to 4 km in 2004. From June, 1999 onwards, the CDR reports pseudo-weekly charts based on the IMS data on the same 89 × 89 mesh that was used for the visually assessed product. The snow cover mapping improvements over time, along with other factors such as cloud cover changes, may have induced non-climatic temporal changes in estimated SCE as discussed in previous studies (Brown and Derksen 2013; Wang et al. 2005).

Using the weekly snow charts from the CDR we obtained annual March-April and May-June mean snow cover fractions (SCF) for each grid cell by calculating the fraction of the period when snow cover was present. The area of each grid cell was then multiplied by its SCF to obtain the corresponding snow cover extent.

The blended insitu-satellite dataset considered in this study is that of Brown and Robinson (2011), who provide estimates of NH March-April SCE over 1923–2012 based on historical station records and the NOAA weekly satellite snow cover data described above. Pre-satellite SCE data in this dataset is based on monthly snow cover and daily snow depth observations available in the former Soviet Union, the People’s Republic of China, Canada and the United States, and on reconstructed monthly snow cover duration from daily temperature and precipitation station data.

A third dataset considered in this study is based on monthly snow water equivalent (SWE) obtained from the Global Land Data Assimilation System version 2.0 (i.e. GLDAS-2 Noah) reanalysis for the period 1951–2010 (Rodell et al. 2004). GLDAS-2 Noah estimates the observed land surface state, including SWE, soil moisture and surface fluxes such as evaporation, by using a global meteorological forcing dataset from Princeton University (Sheffield et al. 2006) to drive the Noah global land surface model. The analysed SWE that is produced by this system, which is available at a 1° spatial resolution, is updated daily by assimilating remotely sensed MODIS snow cover records. The GLDAS data assimilation adjusts the surface snow cover in accordance with MODIS observations by either removing snow from a grid cell if MODIS indicates no snow cover, or adding a small amount of snow (10 mm SWE) if MODIS indicates a grid cell is snow covered while the land surface model simulates snow free conditions (Rodell et al. 2004). We classify grid cells with more than 5 kg/m2 (~5 mm) snow water equivalent as being snow covered (Brutel-Vuilmet et al. 2013). Monthly SCE is estimated by calculating the area with non-zero SCF.

Finally, we consider a dataset based on the 20th century reanalysis version 2 (20CR2) ensemble mean for the period 1948–2012 (Compo et al. 2011). The 20CR2 is a 56 member ensemble reanalysis of the atmosphere and its underlying land surface. It uses observed sea surface temperature and sea ice as lower boundary conditions over oceans and assimilates only surface pressure data to constrain the atmosphere. This data is available at 2° spatial resolution. Snow cover fraction calculated from 20CR2 has been shown to represent well the onset of Eurasian snow cover (Peings et al. 2013).

All available data products considered in this paper have limitations. All of the observational products may be affected by inhomogeneities that result from evolving observing technology (e.g., changing coverage of in-situ networks, the transition from in-situ to remote sensing, and changing remote sensing technology and resolution). In addition, GLDAS may be affected by limitations in the land surface model that is the heart of the analysis system. The 20CR2 reanalysis also has these limitations, but is also further limited by having assimilated only surface pressure observations and thus lacks constraints from MODIS, surface temperature and precipitation observations. This presumably provides a weaker constraint on the meteorological drivers of the 20CR2 land surface component than would have been the case if a more comprehensive suite of meteorological variables had been assimilated. Moreover, none of the products are completely independent, although GLDAS and 20CR2 are structurally very different from the Brown and NOAA datasets, and from each other.

For each dataset, SCE anomalies are calculated relative to a 1971–2000 climatology, and are used subsequently throughout the rest of the study. Figure 1 shows the changes in the annual means of the observational records. Since there are negligible differences between the NOAA and Brown datasets in March-April during 1968–2012, only the latter is considered and shown in the Figure as it covers a longer time period. Here, GLDAS is shown over the period of 1948–2010 however the detection and attribution analyses of GLDAS SCE changes that will be described below are based on non-overlapping 5-year averages covering the period 1951–2010. According to Fig. 1, Brown and NOAA show larger variability compared to GLDAS and 20CR2 reanalysis datasets. Similar trends are seen in all datasets in March-April. In contrast, May-June changes are considerably more uncertain as NOAA shows a substantially larger declining SCE trend compared to GLDAS and 20CR2. Brown et al. (2010), in a study of Arctic SCE, showed that June SCE from the NOAA products before utilizing the Ice Mapping System (IMS) and from IMS-24 km were outliers compared to a range of other independent datasets. They found that these datasets had lower correlations and higher RMSEs compared to the multi-dataset average than other data sources.

We compare SCE changes in the observed datasets with changes simulated by models participating in CMIP5 (Taylor et al. 2012). We use SWE from CMIP5 simulations with historical natural forcings only (NAT, 8 models, 32 simulations) that end in 2012, and with historical anthropogenic and natural forcings combined (ALL, 33 models, 121 simulations). CMIP5 historical ALL simulations that end in 2005 were extended to 2012 using the corresponding Representative Concentration Pathway (RCP 4.5) simulations. All available simulations are first used to characterize model-simulated SCE changes. However, we use only the CMIP5 models for which both ALL and NAT simulations were available in our detection and attribution analysis (Table S1). This helps us avoid confounding differences in the responses to these two combinations of forcings with differences that result from using different selections of models in the two cases. We also use SWE from 17,000 years of pre-industrial control simulations obtained from 37 CMIP5 models to estimate internal climate variability. Model simulated SCF and SCE were determined as for GLDAS, using the same 5 kg/m2 SWE criterion for the presence of snow cover in a grid cell. For each simulation (ALL, NAT and control segments of equivalent duration), model simulated SCE anomalies were calculated relative to the 1971–2000 climatology of that simulation, similar to the observations.

Detection and attribution analyses were performed to separately compare observed and simulated long-term March-April and May-June SCE changes for the entire Northern Hemisphere and for North America and Eurasia individually, based on non-overlapping 5-year means. We use an optimal fingerprinting approach, which is based on a generalized linear regression model (Allen and Stott 2003; Hasselmann 1997; Ribes and Terray 2013):

where SCE obs represents the observed SCE anomalies, SCE ALL and SCE NAT represent responses to ALL and NAT forcings, β’s are the corresponding scaling factors and ε is the residual variability in SCE that is generated internally in the climate system.

The response to ALL forcings (the SCE ALL fingerprint), required to fit the regression model to observations, is estimated by averaging SCE anomalies across the ALL simulations from the 8 models that performed both ALL and NAT simulations with equal weights assigned to each ensemble member. The SCE NAT fingerprint is estimated similarly from the corresponding collection of NAT simulations. The covariance structure of the internal climate variability, which is required to fit the regression model and to quantify uncertainty in the estimated scaling factors, is estimated using the unforced control simulations. Two full-rank covariance matrix estimates are constructed using the regularization approach of (Ribes et al. 2013), each using half of the available control simulations, for use in regression model fitting and uncertainty quantification. The regularized optimal fingerprinting method has been shown to produce accurate covariance matrix estimates (Ribes et al. 2013). Furthermore, because the covariance matrix estimates are of full rank and well estimated, EOF projection is not required to reduce the covariance matrix size, and is therefore more objective as it avoids the subjective choice of a truncation value.

The regression model is fitted to observations using the total least squares approach to account for uncertainties in the fingerprints from internal variability that remains after multi-model averaging. That is, the fitting procedure represents SCE ALL and SCE NAT as

and

where \( {\varepsilon}_{SC{E}_{ALL}} \) and \( {\varepsilon}_{SC{E}_{NAT}} \) represent the remaining effects of internal variability after averaging across a finite number of forced simulations, and \( SC{E}_{ALL}^{*} \)and \( SC{E}_{NAT}^{*} \)are expected responses that would be obtained if infinitely large ensembles of simulations were available. Scaling factors for SCE ANT (representing SCE responses to the anthropogenic forcing) and SCE NAT are obtained by decomposing SCE ALL into SCE ALL = SCE ANT + SCE NAT , and substituting as follows:

Estimation of β 1 and β 2, and thus β ANT and β NAT, involves the first covariance matrix estimate, while the determination of the scaling factor confidence intervals involves the second, independent estimate (e.g, see Allen and Stott 2003). Because scaling factors are estimated using the Total Least Squares (TLS) algorithm, confidence intervals may be asymmetric. A scaling factor with 5 %-95 % confidence range that exceeds zero indicates that the corresponding response signal is detected in the observations. A residual consistency test was conducted to compare model simulated internal variability with that estimated from observations (regression residuals).

3 Results

3.1 Observed and simulated changes

The declining trends in the observed annual average NH SCE are statistically significant at the 2.5 % significance level (in a 1-sided test) based on a linear model fitted using the generalised least squares and considering an autoregressive process of order 1 (Table 1). The estimated decline in May-June snow cover over 1968–2012 based on NOAA observations is 0.94 × 106 km2 per decade, consistent with May and June trends previously reported for 1967–2012 (0.90 × 106 km2 and 1.31 × 106 km2 per decade respectively, Vaughan et al. 2013). The March-April SCE decline over the extended 1923–2012 period is estimated to be 0.32 × 106 km2 per decade based on the Brown dataset, with a more rapid rate of decline of ~0.8 × 106 km2 per decade after 1970. The GLDAS and 20CR2 reanalyses show March-April rates of decline that are comparable to those seen in the Brown dataset for the same period, but exhibit lower rates of SCE decreases in May-June compared to March-April.

Turning now to the models, more than half of the full ensemble of 121 ALL simulations show statistically significant decreases in NH SCE in March-April while more than 80 % show a significant decrease in May-June (Table 1). However, CMIP5 ALL simulations generally underestimate the magnitude of the observed March-April changes as previously reported (Brutel-Vuilmet et al. 2013; Rupp et al. 2013). This is also the case for May-June when comparing with the NOAA and Brown datasets (Derksen and Brown 2012). In contrast the ALL simulations show May-June changes that are similar to those seen in the GLDAS and 20CR2 reanalyses.

As expected, almost none of the historical natural forcing simulations show significant NH SCE negative trends during either March-April or May-June. The overall multi-model average NAT trend, based on the full ensemble of 32 runs from 8 models, is close to zero with the largest simulated rate of May-June SCE change (−0.07 × 106 km2 per decade) having a 90 % confidence range of [−0.21, 0.16] × 106 km2 per decade. While no ALL simulation has significant increases, one of the NAT ensemble runs (corresponding to GISS-E2-H) shows a significant increasing trend. This is not unexpected in one-sided trend tests conducted at the 2.5 % significance level as we expect false rejection to occur in such a test at the rate of 0.8 rejections per 32 tests when there is no underlying trend. The results corresponding to each GCM are shown in Table S2.

The contributions from the Eurasian (EUR) and North American (NA) sectors to observed NH SCE change are also quantified in Table 1. Brown and Robinson (2011) showed that most of the NH SCE change is driven by the Eurasian sector in March, but that significant trends are evident in April in both Eurasia and North America. In addition, they found larger uncertainties in the observed Eurasian spring SCE than for North America. It should be noted that the larger contribution of Eurasian SCE trend is mainly because it has a larger snow-affected area than North America. For example, based on NOAA observations for 1968–2012, Eurasia contributes 60 % of the climatological March-April snow covered area in the NH while North America contributes 35 %. In May-June the Eurasian and North American sectors contribute 50 % and 40 % of the climatological NH SCE respectively. Based on Table 1, the Eurasian sector contributes 94 %, 56 % and 40 % of the decline in NH SCE trends considering Brown, during 1923–2012, GLDAS, during 1951–2010, and 20CR2 during 1948–2012 respectively in March-April. The declining trend in the North American sector SCE is statistically significant except for Brown in March-April.

Considering the model simulations, more than ~40 % of individual ALL simulations (Table 1) show significant decreases in Eurasian March-April SCE, while a slightly smaller number (more than ~30 % of simulations) do so in North America. Over ~60 % of individual ALL simulations show significant declines in May-June Eurasian as well as North American SCE. Overall, only a very small fraction of the NAT simulations show significant declining trends at continental scales.

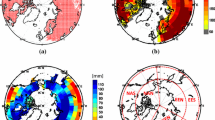

We next analyze the spatial distribution of snow cover fraction changes. To facilitate comparison, we interpolated both observational and model simulated SCF to a common 5° × 5° spatial resolution. Figure 2 shows the changes in the observed March-April and May-June average SCF (in %/decade) for NOAA (1968–2012), GLDAS (1951–2010) and 20CR2 (1948–2012). White areas are those with snow cover fraction changes that are close to zero. Overall, major SCF decline occurs in the mid-latitudes in March-April, with more intense decline apparent at higher latitudes in May-June. Based on NOAA, most areas show decreasing SCF trends. Exceptions are seen in northwest and southeast Eurasia in March-April and May-June, and in northwest and northeast North America in March-April. The declines are more marked in May-June, particularly at higher latitudes. This is consistent with previous studies (Déry and Brown 2007), except for parts of western China (Dahe et al. 2006) and northern Canada, which experienced increasing trends. GLDAS also shows overall decreasing SCF trends except in northwest and southeast Eurasia as well as northeast North America in March-April. As in NOAA, the decline is shifted towards higher latitudes in May-June, particularly in northeast Eurasia and northwest North America, although GLDAS differs in that it shows either no changes in other regions or increasing trends, especially in central north Eurasia and northeast North America. The 20CR2 reanalysis shows decreasing SCF trends except in northwest Eurasia, northwest North America, and southwest and northeast parts of China in March-April. Similarly to NOAA and GLDAS, the decline is shifted towards higher latitudes in May-June, particularly in northeast Eurasia, as well as northeast and northwest North America. As in GLDAS, 20CR2 also has a few regions with increasing SCF trends.

Maps of linear trends snow cover fraction (%/decade) in March-April and May-June based on NOAA, GLDAS and 20CR observations along with CMIP5 multi-model ensemble averages of ALL (121 ensemble members) and NAT (32 ensemble members) simulations corresponding to NOAA. All datasets were regridded to a common 5°×5° latitude-longitude resolution prior to calculating trends

The 121-member multi-model ALL ensemble average exhibits declining trends over all snow-covered regions. Similarly to NOAA, the rate of decline is stronger in the mid-latitudes during March-April and at high-latitudes during May-June. However, the ensemble average generally shows smaller SCF reductions than observed. The ALL forcings response captures the observed spatial variations in trend such as for the western USA during May-June where both NOAA and ALL simulations show SCF decline. According to NOAA, SCF trends are positive over approximately 12 % and 5 % of the NH land area in March-April and May-June respectively, while the ALL simulations have positive trends over [2.2 %-7.5 %] and [2 %-9.1 %] of the NH land area (5 %-95 % range of individual simulations) respectively for March-April and May-June.

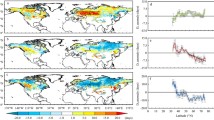

In order to increase the signal-to-noise ratio for our detection and attribution analysis, SCE anomalies are averaged across large spatial domains including the Northern Hemisphere, Eurasia and North America, and over non-overlapping 5-year intervals. Greenland is excluded from the spatial averages as it contains essentially perennial snow cover. The resulting time series of 5-year mean March-April and May-June SCE are shown in Fig. 3.

Observed 5-year mean SCE anomalies (black) based on Brown (in MAR-APR), NOAA (in MAY-JUN), 20CR2 and GLDAS (in both MAR-APR and MAY-JUN) datasets compared with the mean simulated responses to anthropogenic and natural forcings combined (ALL, red), and natural forcings only (NAT, blue) for MAR-APR and MAY-JUN. Red shading, blue and green dashed lines represent the 5 %-95 % ranges of ALL, NAT and CTL simulations respectively

Consistent with the Brown dataset, the ALL simulations show no substantial SCE trend prior to the 1980s, although with a lower range of variation compared with the observed variability. The simulated rate of decline after the 1980s is lower than observed, with Eurasian SCE observations tending to fall slightly below the lower 5 % of ALL simulated SCE anomalies during the recent period. Compared with NAT forcing simulations, the ALL simulations nevertheless demonstrate substantially better consistency with the Brown observations than the NAT simulations. A similar conclusion would be drawn considering the NOAA May-June satellite observations for 1968–2012.

The GLDAS reanalysis exhibits lower climate variability compared to Brown and has a declining trend from 1951 to 2010. The multi-model estimated NAT signal does not follow the GLDAS declining trend contrary to the response to the estimated ALL forcings signal, and thus we would again conclude that, despite observational uncertainty, the ALL simulations demonstrate substantially better consistency with observations than the NAT simulations. The 20CR2 reanalysis shows a downward trend in NH SCE from 1948 to 2012 in March-April and from the late 1960s to present in May-June. The variability in 20CR2 SCE is higher than the range of variations simulated by climate models from 1948 to the early 1970s in North America. The SCE changes vary between the Eurasian and North American sectors with the former showing a more substantial decline in all datasets except for 20CR2.

Overall, simulated SCE changes under ALL forcing appear to be largely consistent with observed changes in March-April from the 1970’s onwards, with a tendency for observations to show larger SCE anomalies than simulated by the models prior to the 1970s, suggesting that models under-simulate climatological March-April SCE prior to the most recent period of rapid warming that began in the mid-1970s. Long-term consistency between models and observations is more difficult to judge for late spring (May-June) SCE because the longest available datasets begin in 1948 or later, and because observational uncertainty is greater at this time of year (Brown et al. 2010).

3.2 Detection and attribution analysis

The observed trends are regressed onto the simulated responses to ALL and NAT forcing to determine whether the effects of anthropogenic forcings (ANT), natural forcings (NAT) as well as anthropogenic and natural forcing signals combined (ALL) are reflected in the observations. For this analysis, forced responses are estimated using 32 CMIP5 simulations from the 8 models (Table S1) that performed both ALL and NAT experiments (as shown in Figures S1-S2).

We first consider whether the response to ALL forcings is detectable in observations by means of a one-signal regression analysis. The resulting regression scaling factor estimates for March-April and May-June based on the Brown, NOAA, GLDAS and 20CR2 datasets are displayed in Fig. 4a-b. Best estimates are shown as triangles and 90 % confidence intervals are shown as bars. An asterisk “*” below a bar indicates that the residual consistency test is not passed and that the model simulated variability is too low compared to the residual variability from observations. Similarly, a “#” sign shows the test has failed and that the model simulated variability is too high. Underestimation of internal variability by the models is a particular concern because it implies that scaling factor uncertainty could be underestimated, possibly leading to overconfident detection and attribution. The response to ALL is detected in all cases for both March-April and May-June. However, consistent with Fig. 3 and the discussion above, CMIP5 simulations significantly underestimated the long-term changes in Eurasian and NH SCE compared to the Brown observations, with scaling factors of ~2. Rupp et al. (2013) also found similar degrees of underestimation by GCMs in their analysis of both NH absolute and relative SCE anomalies during 1922–2005. There is also evidence that models simulated lower internal SCE variability in these two domains compared to the 90-yr. Brown dataset. Similar findings are obtained for May-June based on the shorter NOAA record. In contrast, the model-simulated ALL forcing signal is consistent with the changes in the GLDAS data for March-April, as indicated by scaling factors that are consistent with unity, and exhibits somewhat larger May-June changes as indicated by scaling factors that are somewhat less than unity. Internal variability appears to be well represented in both cases. Results based on 20CR2 are similar to GLDAS except for North America where scaling factors of ~4 and ~2 are found in March-April and May-June respectively. This is not unexpected, particularly for March-April, given the apparent inhomogeneity that is present in the 20CR2 SCE anomaly time series for North America prior to ~1970 (Fig. 3). These results are consistent with previous studies that compared observed SCE trends in March-April and June with the ensemble of CMIP5 simulations (Derksen and Brown 2012; Rupp et al. 2013).

Scaling factors and their corresponding 5 %-95 % confidence intervals for a anthropogenic and natural forcings combined (ALL) in a 1-signal analysis during March-April, b ALL during May-June, c anthropogenic forcings (ANT) and natural forcings (NAT) in a 2-signal analysis based on GLDAS; signals are based on multi-model ensemble averages of 32 simulations from eight models

Given the consistency of the simulated ALL response with observed changes in the GLDAS dataset, a further two-signal analysis was performed to attempt to separate the contributions from natural and anthropogenic forcings to the GLDAS SCE changes (Fig. 4c). The response to ANT is detected in the GLDAS March-April SCE changes, albeit with large uncertainty and scaling factors that are significantly greater than unity, suggesting underestimation of the observed changes both on the continental scale and for the NH as a whole. The response to ANT forcing is also detected in the May-June GLDAS data in all areas (including NH, EUR and NA) with scaling factors that are modestly less than unity. NAT is not detectable in any of the three areas. Repeating the analysis using the full ALL ensemble provides similar results, as might be expected since uncertainty in the analysis is dominated by the weak NAT signal (Ribes et al., 2015).

As mentioned previously, we used control simulations from 37 models (~17,000 years in total) in order to reduce uncertainty in the estimates of the covariance matrix. As a sensitivity test, we repeated the analysis of NH SCE changes using covariance matrices estimated from ~4600 years of pre-industrial control simulations obtained from the same 8 models that provided the ALL and NAT simulations (see Table S1). Results are robust to the choice of control simulations (Fig. 3S).

4 Discussion and conclusions

A formal detection and attribution analysis of SCE changes was performed using several different observational datasets: Brown from 1923 to 2012, NOAA for 1968–2012, GLDAS-2 Noah for 1951–2010, and 20CR2 covering 1948–2012. We analysed observed early spring (March-April) and late spring (May-June) NH SCE changes in these datasets using climate simulations of the responses to anthropogenic and natural forcings combined (ALL) and to natural forcings alone (NAT) from CMIP5. Using a one-signal analysis based on the ALL-forcing response, we showed that, while there are substantial differences between observational datasets, all exhibit changes that are inconsistent with internal variability. A two-signal analysis of the GLDAS data was able to separately detect the influence of the anthropogenic component of the observed SCE changes, but was not able to reliably detect the effects of natural forcing.

On balance, these results, together with the understanding of the causes of observed warming over the past century (Bindoff et al. 2013; Hegerl et al. 2007b; Najafi et al. 2015) provide substantial evidence of a human contribution to the observed decline in Northern Hemisphere spring snow cover extent. Nevertheless, quantification of that contribution remains difficult due to considerable observational and modelling uncertainty. The fact that the NH SCE changes recorded in the NOAA and Brown datasets are much larger than those in long reanalyses that provide SWE data, reduces the confidence with which it is possible to quantify the change in NH SCE that is due to human influence on the climate system.

Two of the datasets (i.e. GLDAS and 20CR2) use SCE inferred from SWE analyses that are observationally constrained by other climate elements. Those datasets are thus limited by the fidelity of the land surface models that are used in their production, amongst other factors. Land surface modelling limitations would also affect the simulation of SCE in CMIP5 models, whose performance would be further affected by biases in the mean state and variability of their climates (Flato et al. 2013). Rupp et al. (2013) found a positive association between the scaling factors and climatological SCE biases in CMIP5 models. They also found that approximately 30 % of the scaling factor variance in ALL simulations, when considering SCE response signals from different models, is associated with these biases. Climate biases could affect SCE variability and the strength of simulated SCE changes. In particular, CMIP5 models tend to be biased cold in winter in the Northern Hemisphere (Flato et al. 2013). This would suggest the possibility of reduced sensitivity of SCE to warming in the models, or spatially displaced sensitivity, since one would expect the strongest SCE response to warming to occur in places where the mean climate warms past the 0 °C threshold over the periods studied in the March-April or May-June “seasons”. Despite this, our assessment is that the largest impediment to a more robust attribution assessment is the large observational uncertainty in SCE reflected in large differences between datasets. A systematic attempt to validate these datasets and identify those with the most realistic long-term SCE changes would allow stronger conclusions to be drawn.

References

Allen M, Stott P (2003) Estimating signal amplitudes in optimal fingerprinting, part I: theory. Clim Dyn 21:477–491

Bindoff N, Stott P, AchutaRao K, Allen M, Gillett N, Gutzler D, Hansingo K, Hegerl G, Hu Y, Jain S (2013) Detection and attribution of climate change: from global to regional. Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change:12

Brown R, Derksen C (2013) Is Eurasian October snow cover extent increasing? Environ Res Lett 8:024006

Brown R, Robinson D (2011) Northern Hemisphere spring snow cover variability and change over 1922–2010 including an assessment of uncertainty. Cryosphere 5:219–229

Brown R, Derksen C, Wang L (2010) A multi-data set analysis of variability and change in Arctic spring snow cover extent, 1967–2008. Journal of Geophysical Research: Atmospheres 1984–2012:115

Brutel-Vuilmet C, Ménégoz M, Krinner G (2013) An analysis of present and future seasonal Northern Hemisphere land snow cover simulated by CMIP5 coupled climate models. Cryosphere 7:67–80

Callaghan TV, Johansson M, Brown RD, Groisman PY, Labba N, Radionov V, Barry RG, Bulygina ON, Essery RL, Frolov D (2011) The changing face of Arctic snow cover: A synthesis of observed and projected changes. Ambio 40:17–31

Compo GP, Whitaker JS, Sardeshmukh PD, Matsui N, Allan RJ, Yin X, Gleason BE, Vose R, Rutledge G, Bessemoulin P (2011) The twentieth century reanalysis project. Q J R Meteorol Soc 137:1–28

Dahe Q, Shiyin L, Peiji L (2006) Snow cover distribution, variability, and response to climate change in Western China. J Clim 19:1820–1833

Dee D, Uppala S, Simmons A, Berrisford P, Poli P, Kobayashi S, Andrae U, Balmaseda M, Balsamo G, Bauer P (2011) The ERA-interim reanalysis: configuration and performance of the data assimilation system. Q J R Meteorol Soc 137:553–597

Derksen C, Brown R (2012) Spring snow cover extent reductions in the 2008–2012 period exceeding climate model projections. Geophys Res Lett 39

Déry SJ, Brown RD (2007) Recent Northern Hemisphere snow cover extent trends and implications for the snow-albedo feedback. Geophys Res Lett 34

Dye DG (2002) Variability and trends in the annual snow-cover cycle in Northern Hemisphere land areas, 1972–2000. Hydrol Process 16:3065–3077

Flanner MG, Zender CS, Hess P, Mahowald NM, Painter TH, Ramanathan V, Rasch P (2009) Springtime warming and reduced snow cover from carbonaceous particles. Atmos Chem Phys 9:2481–2497

Flato G, Marotzke J, Abiodun B, Braconnot P, Chou S, Collins W, Cox P, Driouech F, Emori S, Eyring V (2013) Evaluation of climate models. Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, pp. 741–866

Hasselmann K (1997) Multi-pattern fingerprint method for detection and attribution of climate change. Clim Dyn 13:601–611

Hegerl G, Zwiers F, Braconnot P, Gillet N, Luo Y, Marengo J, Nicholls N, Penner J, Stott P (2007a) Understanding and attributing climate change. In: Climate Change 2007: The Physical Science Basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change [Solomon, S. D. Qin, M. Manning, Z. Chen, M. Marquis, K. B. Averyt, M. Tignor and H. L. Miller (eds.)]. Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA

Hegerl GC, Crowley TJ, Allen M, Hyde WT, Pollack HN, Smerdon J, Zorita E (2007b) Detection of human influence on a new, validated 1500-year temperature reconstruction. J Clim 20:650–666

Mudryk L, Derksen C, Kushner P, Brown R (2015) Characterization of Northern Hemisphere snow Water equivalent datasets, 1981–2010. Journal of Climate

Najafi MR, Moradkhani H (2015) Multi-model ensemble analysis of runoff extremes for climate change impact assessments. J Hydrol 525:352–361

Najafi MR, Zwiers FW, Gillett N (2015) Attribution of Arctic Temperature Change to Greenhouse Gas and Aerosol Influences. Nat Clim Chang 5:246–249

Peings Y, Brun E, Mauvais V, Douville H (2013) How stationary is the relationship between Siberian snow and Arctic oscillation over the 20th century? Geophys Res Lett 40:183–188

Pierce DW, Barnett TP, Hidalgo HG, Das T, Bonfils C, Santer BD, Bala G, Dettinger MD, Cayan DR, Mirin A (2008) Attribution of declining Western US snowpack to human effects. J Clim 21:6425–6444

Ramsay BH (1998) The interactive multisensor snow and ice mapping system. Hydrol Process 12:1537–1546

Ribes A, Terray L (2013) Application of regularised optimal fingerprinting to attribution. Part II: Application to Global Near-Surface Temperature. Clim Dyn 41:2837–2853

Ribes A, Planton S, Terray L (2013) Application of regularised optimal fingerprinting to attribution. part I: method, properties and idealised analysis. Clim Dyn 41:2817–2836

Rienecker MM, Suarez MJ, Gelaro R, Todling R, Bacmeister J, Liu E, Bosilovich MG, Schubert SD, Takacs L, Kim G-K (2011) MERRA: NASA's modern-era retrospective analysis for research and applications. J Clim 24:3624–3648

Robinson D, Frei A (2000) Seasonal variability of Northern Hemisphere snow extent using visible satellite data. Prof Geogr 52:307–315

Robinson DA, Dewey KF, Heim RR Jr (1993) Global snow cover monitoring: An update. Bull Am Meteorol Soc 74:1689–1696

Robinson DA, Estilow TW, Program NC (2012) NOAA climate date record (CDR) of Northern Hemisphere (NH) snow cover extent (SCE), version 1. NOAA National Climatic Data Center

Rodell M, Houser P, Uea J, Gottschalck J, Mitchell K, Meng C, Arsenault K, Cosgrove B, Radakovich J, Bosilovich M (2004) The global land data assimilation system. Bull Am Meteorol Soc 85:381–394

Rupp DE, Mote PW, Bindoff NL, Stott PA, Robinson DA (2013) Detection and attribution of observed changes in Northern Hemisphere spring snow cover. J Clim 26:6904–6914

Sheffield J, Goteti G, Wood EF (2006) Development of a 50-year high-resolution global dataset of meteorological forcings for land surface modeling. J Clim 19:3088–3111

Taylor KE, Stouffer RJ, Meehl GA (2012) An overview of CMIP5 and the experiment design. Bull Am Meteorol Soc 93:485–498

Vaughan DG, Comiso JC, Allison I, Carrasco J, Kaser G, Kwok R, Mote P, Murray T, Paul F, Ren J (2013) Observations: cryosphere. Climate Change: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change [Stocker, T.F., D. Qin, G.-K. Plattner, M. Tignor, S.K. Allen, J. Boschung, A. Nauels, Y. Xia, V. Bex and P.M. Midgley (eds.)]. Cambridge University Press, Cambridge.

Wang L, Sharp M, Brown R, Derksen C, Rivard B (2005) Evaluation of spring snow covered area depletion in the Canadian Arctic from NOAA snow charts. Remote Sens Environ 95:453–463

Yang D, Robinson D, Zhao Y, Estilow T, Ye B (2003) Streamflow response to seasonal snow cover extent changes in large Siberian watersheds. Journal of Geophysical Research: Atmospheres 1984–2012:108

Acknowledgments

We acknowledge the Program for Climate Model Diagnosis and Intercomparison and the World Climate Research Programme’s Working Group on Coupled Modelling for their roles in making the WCRP CMIP5 multi-model datasets available. This work is supported by the NSERC Canadian Sea Ice and Snow Evolution (CanSISE) Network. We also thank Ross Brown for providing the observed SCE datasets and for his helpful comments.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Supplemental Figure 1

(DOCX 406 kb)

Supplemental Figure 2

(DOCX 399 kb)

Supplemental Figure 3

(DOCX 105 kb)

Supplementary Table 1

(DOCX 23 kb)

Supplementary Table 2

(DOCX 23 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Najafi, M.R., Zwiers, F.W. & Gillett, N.P. Attribution of the spring snow cover extent decline in the Northern Hemisphere, Eurasia and North America to anthropogenic influence. Climatic Change 136, 571–586 (2016). https://doi.org/10.1007/s10584-016-1632-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-016-1632-2