Abstract

Estimating parameters of drift and diffusion coefficients for multidimensional stochastic delay equations with small noise are considered. The delay structure is written as an integral form with respect to a delay measure. Our contrast function is based on a local-Gauss approximation to the transition probability density of the process. We show consistency and asymptotic normality of the minimum-contrast estimator when a small dispersion coefficient \(\varepsilon \rightarrow 0\) and sample size \(n\rightarrow \infty \) simultaneously.

Similar content being viewed by others

1 Introduction

Let \((\Omega ,{\mathscr {F}},\{{\mathscr {F}}_t\},P)\) be a stochastic basis satisfying the usual conditions. We consider a family of d-dimensional stochastic functional differential equations (SFDEs): for \(\varepsilon \in (0,1]\) and \(\delta >0\),

where \(\theta _0=(\alpha _0,\beta _0)\in \mathring{\Theta }\) with \(\Theta =\overline{\Theta }_{\alpha }\times \overline{\Theta }_{\beta }\) for open bounded convex subsets \(\Theta _{\alpha }\) and \(\Theta _{\beta }\) of \({\mathbb {R}}^p \) and \({\mathbb {R}}^q\), respectively; \(b=(b_1,\dots ,b_d):{\mathbb {R}}^d\times {\mathbb {R}}^d\times \Theta \rightarrow {\mathbb {R}}^d\) and \(\sigma =(\sigma _{ij})_{d\times r}:{\mathbb {R}}^d\times {\mathbb {R}}^d\times \overline{\Theta }_{\beta }\rightarrow {\mathbb {R}}^{d}\otimes {\mathbb {R}}^r\) are known functions; \(W_t=(W_t^1, \dots , W_t^r)\) is an r-dimensional Wiener process. Moreover, \(\phi ^\varepsilon (t)\) is an \({\mathscr {F}}_0\) measurable \({\mathbb {R}}^d\)-valued random variable for each \(t \in [-\delta ,0]\) and \(\varepsilon \in (0,1]\). Letting C(A; B) be the space of continuous functions from A to B, we also define a functional \(H:C([0,\delta ];{\mathbb {R}}^d)\rightarrow {\mathbb {R}}^d\) as follows: for a continuous function \(F_{t-\cdot }: u\mapsto F_{t-u}\) in \(u\in [0,\delta ]\),

where \(\mu \) is a finite measure on \([0,\delta ]\). We call the Eq. (1.1) stochastic functional delay equations (SFDEs) since the functional H provides a delay structure in the SDE. Especially, when the measure \(\mu \) is a Dirac measure, the SDE is just called a stochastic delay differential equation (SDDE).

We assume that the process \(\{X_t^\varepsilon \}\) is observed at regularly spaced time points \(\{t_k=k/n\,|\,k=0,\dots ,n\}\cup \{-i/n\,|\,i=1,\dots ,\lfloor n\delta \rfloor \}\) with the floor function \(\lfloor \cdot \rfloor \). Our goal is to construct an estimator of \(\theta _0\) from discrete observations \(\{X_{t_k}^\varepsilon \}\cup \{X_{-i/n}^\varepsilon \}\), and to investigate the asymptotic behavior when \(\varepsilon \rightarrow 0\) as well as \(n\rightarrow \infty \).

There are many applications of (deterministic) delay differential equations (DDEs) in biology, epidemiology, physics, finance and insurance. For example, Mackey and Glass (1977) consider a homogeneous population of mature circulating cells (white blood cell, red blood cell, or platelet). Hu and Wang (2002) take account of the dynamics of controlled mechanical systems, among others. Due to those, their corresponding stochastic versions (SDDEs) of those differential equations also has been well investigated. Volterra (1959) considers predator–prey models; Guttrop and Kulperger (1984) take the effect of random elements into account, and they change Volterra’s model from DDEs to SDDEs; Fu (2019) considers a stochastic SIR model with delay for an epidemic model, and also, De la Sen and Ibeas (2021) consider SE(Is)(Ih)AR model as a COVID-19 model. From a theoretical point of view, Mohammed (1984), and Arriojas (1997) have generalized those models to SFDEs. Furthermore, the delay structure can appear in finance and insurance applications, e.g., Wang (2023) and their references, where the drift coefficient can include the diffusion parameter \(\beta \); e.g., Example 1 in Wang (2023). Therefore our general setting in SFDE (1.1), where \(\theta \) includes \(\beta \) makes sense in applications.

Turnning our attention to statistical inference for SDDEs and SFDEs, there have been many works so far. Gushchin and Küchler (1999) and Küchler and Kutoyants (2000) study the asymptotic behavior of the maximum likelihood type estimators; Küchler and Sørensen (2013) consider the pseudo-likelihood estimator for SDDEs; Küchler and Vasiliev (2005) propose a sequential procedure with a given accuracy in the \(L_2\) sense. Moreover, Reiss (2004, 2005) investigate nonparametric inference for affine SDDEs; Ren and Wu (2019) consider least squares estimates for path-dependent McKean-Vlasov SDEs from discrete observations.

Although all of those are studied in the ergodic context, we are interested in the small noise case, \(\epsilon \rightarrow 0\), which is useful to justify the validity of the estimators since, in most applications of SFDEs, the ergodicity is often not expected. There are several initiative works by Kutoyants (1988, 1994) as for the small nose SFDEs from continuous observations, and the statistical problem should be separated according to parameters. For example, the parameters \(\theta \) in (1.1) can usually be regular, but if the delay functional H includes unknown parameters, which are non-regular, the estimation problem becomes non-standard; see Kutoyants (1988). Therefore, we consider the former case only and suppose that the functional H is known in this paper.

In this paper, we consider a local-Gauss type contrast function and show the asymptotic normality of the minimum contrast estimators.

The paper is organized as follows. In Sect. 2, we make notation and assumptions and state our main results in Sect. 3. In Sect. 4, we provide some numerical studies to support our results. All the mathematical proofs are put in Sect. 5.

2 Notation and assumptions

2.1 Notation

-

(N1)

\(X_t^0\) is the solution of the ordinary differential equations under the true value of the drift parameter for \(t\in [0,1]\): \(X_0^0=\phi (0)=x_0\) and

$$\begin{aligned} \left\{ \begin{array}{ll} \textrm{d}X_t^0 = b\left( X_t^0,H(X_{t-\cdot }^0),\theta _0\right) \,\textrm{d}t, &{} t\in [0,1];\\ X_t^0 = \phi (t), &{} t\in [-\delta ,0), \end{array} \right. \end{aligned}$$where \(\phi \in C([-\delta , 0];{\mathbb {R}}^d)\) and \(x_0\) is constant. As for the existence and uniqueness of the solution, see the proof of Theorem 3.7 and Remark 3.8 by Smith (2011).

-

(N2)

For matrix A, the (i, j)th element is written by \(A^{ij}\), and that

$$\begin{aligned} |A|^2=\textrm{tr}\left( AA^\top \right) , \end{aligned}$$where \(A^\top \) is the transpose of A and \(\textrm{tr}\left( AA^\top \right) \) is the trace of \(AA^\top \).

-

(N3)

For multi-index \(m=(m_1,\dots ,m_k)\), a derivative operator in \(z\in {\mathbb {R}}^k\) is given by

$$\begin{aligned} \partial _z^m:=\partial _{z_1}^{m_1}\cdots \partial _{z_k}^{m_k},\qquad \partial _{z_i}^{m_i}:=\left( \partial /\partial _{z_i}\right) ^{m_i}. \end{aligned}$$ -

(N4)

Let \(C^{j,k,l}({\mathbb {R}}^d\times {\mathbb {R}}^d\times \Theta ;{\mathbb {R}}^N)\) be the space of all functions f satisfying that \(f(x,y,\theta )\) is a \({\mathbb {R}}^N\)-valued function on \({\mathbb {R}}^d\times {\mathbb {R}}^d\times \Theta \) which j, k and l times continuously differentiable with respect to x, y and \(\theta \), respectively.

-

(N5)

\(C_{\uparrow }^{j,k,l}({\mathbb {R}}^d\times {\mathbb {R}}^d\times \Theta ;{\mathbb {R}}^N)\) is a class of \(C^{j,k,l}({\mathbb {R}}^d\times {\mathbb {R}}^d\times \Theta ;{\mathbb {R}}^N)\) satisfying that

$$\begin{aligned} \sup _{\theta \in \Theta }|\partial ^\mu _\theta \partial ^\nu _y\partial ^\xi _x f(x,y,\theta )|\le C(1+|x|+|y|)^\lambda , \end{aligned}$$for universal positive constants C and \(\lambda \), where for \(M=\textrm{dim}(\Theta )\), \(\mu =(\mu _1,\dots ,\mu _M)\), \(\nu =(\nu _1,\dots ,\nu _d)\) and \(\xi =(\xi _1,\dots ,\xi _d)\) are multi-indices with \(0\le \sum _{i=1}^M\mu _i\le l\), \(0\le \sum _{i=1}^d\nu _i\le k\) and \(0\le \sum _{i=1}^d\xi _i\le j\), respectively.

-

(N6)

Denote by \(G({{\gamma }})\) the set of all permutations on \(\{1,\dots ,{{\gamma }}\}\).

-

(N7)

For elements \(\left\{ b^i\right\} \) and \(\left\{ [\sigma \sigma ^\top ]^{ij}\right\} \), we denote by

$$\begin{aligned} b^i_{t,H}(\theta ):=b^i\left( X_t^\varepsilon ,H\left( X_{t-\cdot }^\varepsilon \right) ,\theta \right) ,\quad [\sigma \sigma ^\top ]_{t,H}^{ij}(\beta ):=[\sigma \sigma ^\top ]^{ij}\left( X_t^\varepsilon ,H\left( X_{t-\cdot }^\varepsilon \right) ,\beta \right) . \end{aligned}$$ -

(N8)

Denote by \(\Delta _kX^\varepsilon := X_{t_k}^\varepsilon -X_{t_{k-1}}^\varepsilon \) and

$$\begin{aligned} B\left( X_t^0, \theta _0, \theta \right) := b\left( X_t^0,H\left( X_{t-\cdot }^0\right) ,\theta _0\right) -b\left( X_t^0,H\left( X_{t-\cdot }^0\right) ,\theta \right) . \end{aligned}$$

2.2 Assumptions

We make the following assumptions:

-

(A1)

There exists a constant \(K>0\) such that

$$\begin{aligned} |b(x,y,\theta )-b({\tilde{x}},{\tilde{y}},\theta )|+|\sigma (x,y,{\beta })-\sigma ({\tilde{x}},{\tilde{y}},{\beta })|&\le K\left( |x-{\tilde{x}}|+|y-{\tilde{y}}|\right) ,\\ |b(x,y,\theta )|+|\sigma (x,y,{\beta })|&\le K\left( 1+|x|+|y|\right) , \end{aligned}$$for each \(x,{\tilde{x}},y,{\tilde{y}}\in {\mathbb {R}}^d\), \(\theta \in \Theta \) and \(\beta \in \overline{\Theta }_{\beta }\).

-

(A2)

For any \({{\gamma }}\ge 1\),

$$\begin{aligned} \sup _{\varepsilon \in (0,1]}E\left[ \sup _{t\in [-\delta ,0]}|\phi ^\varepsilon (t)|^{{\gamma }} \right] <\infty , \end{aligned}$$and there exists a constant \(K_1,K_2>0\) such that

$$\begin{aligned} E\left[ |\phi ^\varepsilon (t)-\phi ^\varepsilon (s)|^{{\gamma }} \right] \le K_1|t-s|^{{\gamma }} + K_2\varepsilon ^{{\gamma }} |t-s|^{{{\gamma }}/2}. \end{aligned}$$Moreover, as \(\varepsilon \rightarrow 0\),

$$\begin{aligned} E\left[ \sup _{-\delta \le t\le 0}|\phi ^{\varepsilon }(t)-\phi (t)|^{{\gamma }} \right] =O(\varepsilon ^{{\gamma }} ). \end{aligned}$$ -

(A3)

\(b\left( \cdot ,\cdot ,\cdot \right) \in C_{\uparrow }^{2,1,3}\left( {\mathbb {R}}^d\times {\mathbb {R}}^d\times \Theta ;{\mathbb {R}}^d\right) \), \(\sigma (\cdot ,\cdot ,\cdot )\in C_{\uparrow }^{2,1,3}\left( {\mathbb {R}}^d\times {\mathbb {R}}^d\times \overline{\Theta }_{\beta };{\mathbb {R}}^d\otimes {\mathbb {R}}^r\right) \).

-

(A4)

The matrix \([\sigma \sigma ^\top ]\left( x,y,\beta \right) \) is positive definite for each \(x,y\in {\mathbb {R}}^d\) and \(\beta \in \overline{\Theta }_{\beta }\), and that

$$\begin{aligned} \inf _{x,y\in {\mathbb {R}}^d,\beta \in \overline{\Theta }_{\beta }}\det [\sigma \sigma ^\top ]\left( x,y,\beta \right) >0. \end{aligned}$$Moreover, \([\sigma \sigma ^\top ]^{-1}(\cdot ,\cdot ,\cdot )\in C_{\uparrow }^{1,1,3}\left( {\mathbb {R}}^d\times {\mathbb {R}}^d\times \overline{\Theta }_{\beta };{\mathbb {R}}^d\otimes {\mathbb {R}}^d\right) \).

-

(A5)

If \(\theta \ne \theta _0\) then \(b(X_t^0,H(X_{t-\cdot }^0),\theta )\ne b(X_t^0,H(X_{t-\cdot }^0),\theta _0)\); If \(\beta \ne \beta _0\) then \([\sigma \sigma ^\top ](X_t^0,H(X_{t-\cdot }^0),\beta )\ne [\sigma \sigma ^\top ](X_t^0,H(X_{t-\cdot }^0),\beta _0)\), for at least one value of t, respectively.

-

(A6)

The matrix

$$\begin{aligned} I(\theta _0)= \left( \begin{array}{cc} \left( I_b^{ij}(\theta _0)\right) _{1\le i,j\le p} &{} 0\\ 0 &{} \left( I_\sigma ^{ij}(\theta _0)\right) _{1\le i,j\le q}\\ \end{array} \right) , \end{aligned}$$is positive definite, where

$$\begin{aligned} I_b^{ij}(\theta _0)&=\int _0^1\bigg (\frac{\partial }{\partial \alpha _i}b\left( X_{s}^0,H\left( X_{s-\cdot }^0\right) ,\theta _0\right) \bigg )^{\top }[\sigma \sigma ^\top ]^{-1}\left( X_s^0,H\left( X_{s-\cdot }^0\right) ,\beta _0\right) \\&\quad \bigg (\frac{\partial }{\partial \alpha _j}b\left( X_{s}^0,H\left( X_{s-\cdot }^0\right) ,\theta _0\right) \bigg )\,\textrm{d}s,\\ I_\sigma ^{ij}(\theta _0)&=\frac{1}{2}\int _0^1\textrm{tr}\Bigg [\bigg (\frac{\partial }{\partial \beta _i}[\sigma \sigma ^{\top }]\bigg )[\sigma \sigma ^\top ]^{-1}\bigg (\frac{\partial }{\partial \beta _j} [\sigma \sigma ^\top ]\bigg )[\sigma \sigma ^\top ]^{-1}\left( X_s^0,H\left( X_{s-\cdot }^0\right) ,\beta _0\right) \Bigg ]\,\textrm{d}s. \end{aligned}$$

Remark 1

Although the assumption (A4) seems a bit restrictive, it is the same assumption as [A3’] in Gloter and Sørensen (2009).

3 Main theorems

For estimation of \(\theta \in \Theta \) in (1.1), we consider the following local-Gauss type contrast function:

where

and \(\delta _n:= \lfloor n\delta \rfloor /n\).

Definition 1

A minimum contrast estimator \(\widehat{\theta }_{n,\varepsilon }=(\widehat{\alpha }_{n,\varepsilon },\widehat{\beta }_{n,\varepsilon })\) is defined as

The consistency of our estimator \(\widehat{\theta }_{n,\varepsilon }\) is given as follows.

Theorem 1

Suppose the assumptions (A1)–(A5). Then we have

if \((\sqrt{n}\varepsilon )^{-1}\rightarrow 0\) as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \).

The next theorem gives the asymptotic normal distribution of \(\widehat{\theta }_{n,\varepsilon }\).

Theorem 2

Suppose the assumptions (A1)–(A6). Then we have

if \((\sqrt{n}\varepsilon )^{-1}\rightarrow 0\) as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \).

4 Simulations

We consider the following 2 -dimensional SFDE:

for \(t\in [0,1]\),

for \(t\in [-\delta ,0]\), where \(\delta =1/10\), \(\left( X_{-\delta }^{(1)},X_{-\delta }^{(2)}\right) =(1,2)\) and \(H(X_{t-\cdot })=X_{t-\delta }\). In this example, the estimator is given explicitly as follows:

where

and \(H_n(X_{t-\cdot })=X_{t-\delta _n}\).

In the experiments, we generate discrete samples \(\{X_{t_{k}}\}_{k=1}^n\) and \(\{X_{-i/n}\}_{i=1}^{n\delta _n}\) by the Euler-Maruyama method (see Backwar (2006)). We show means and standard deviations of estimators through 1000 times replications according to several values of \((n,\varepsilon )\) in Tables 1, 2 and 3, which illustrate the consistency of our estimator.

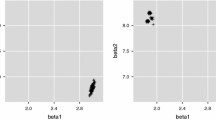

We also show the results of normal Q-Q plot in the ideal case where \((n,\varepsilon ) = (10000, 0.01)\) in Figs. 1, 2, 3 and 4, which illustrate the asymptotic normality of each marginal of \(\widehat{\theta }_{n,\varepsilon }\). Moreover, Fig. 5 shows that the distribution of the bilinear form of the estimator follows the \(\chi ^2(4)\)-distribution, which illustrates the (joint) asymptotic normality of \(\widehat{\theta }_{n,\varepsilon }\).

Chi square(4) Q-Q plot as \(\theta =(1.0, 2.0, 3.0, 4.0), \varepsilon =0.01, n=10,000\) for 1000 iterated samples of \(\big (\varepsilon ^{-1}(\widehat{\alpha }_{n,\varepsilon }-\alpha ), \sqrt{n}(\widehat{\beta }_{n,\varepsilon ,}-\beta )\big )^{\top }I(\theta _0)\big (\varepsilon ^{-1}(\widehat{\alpha }_{n,\varepsilon }-\alpha ), \sqrt{n}(\widehat{\beta }_{n,\varepsilon ,}-\beta )\big )\)

5 Proofs

We first establish some preliminary lemmas. The idea of the proof of Lemma 1 is due to that of Lemma 2.2.1 by Nualart (2006).

First, we will give a result for the existence of a strong solution for (1.1). Although different conditions for more general types of diffusions are seen in, e.g., Liptser and Shiryayev (2001), Theorem 4.6, we will concentrate on a more specific case, where the conditions become simpler for practical use.

Lemma 1

Suppose that (A1) and (A2) hold true. Then there exists a strong solution \(\left\{ X_t^\varepsilon \right\} \) for \(\varepsilon \in (0,1]\). Moreover, for \({{\gamma }}\ge 2\), it holds true:

Proof

Let

and for \(n\ge 0\),

First, we show that there exists a strong solution \(\left\{ X_t^\varepsilon \right\} \). From Lemma 2.2.1 of Nualart (2006), it suffices to show that

and

for any \({{\gamma }}\ge 2\). By a recursive argument, we can show that the inequality (5.1) holds. By using the Burkholder-Davis-Gundy inequality and (A1),

where \(C_{{\gamma }}\) and \(C'_{{\gamma }}\) are constants depending only on \({{\gamma }}\). For (5.2), by applying the Burkholder-Davis-Gundy inequality and (A1) again, we have

Consequently, we have the inequality (5.2) by (5.1).

Finally, we shall prove that the solution of (1.1) is unique. We assume that \({\tilde{X}}_t^\varepsilon \) is the solution of (1.1). Then, it follows by (A1) that

Hence, it follows from Gronwall’s inequality that

The proof is completed. \(\square \)

For Lemma 2, we shall use the notations:

-

(N9)

Denote by \(Y_t^{n,\varepsilon }:=X_{\lfloor nt \rfloor /t}^\varepsilon \) and \(Y_{t-\cdot }^{n,\varepsilon }:=X_{\lfloor nt \rfloor /t-\cdot }^\varepsilon \) for the stochastic process \(X^\varepsilon \) defined by (1.1).

-

(N10)

For \(X_{t-\cdot }^\varepsilon \in C\left( [0,\delta ];{\mathbb {R}}^d\right) \), denote by \(\left\| X_{t-\cdot }^\varepsilon \right\| _\infty :=\sup _{0\le u \le \delta }\left| X_{t-u}^\varepsilon \right| .\)

In Lemma 2, the proof ideas follow Long et al. (2013)

Lemma 2

Suppose that (A1) and (A2) hold true. Then, it follows for \({{\gamma }}\ge 1\),

as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \).

Proof

Since it is easy to see that

it suffices to show that \(E\left[ \sup _{0\le t\le 1}\left\| Y_{t-\cdot }^{n,\varepsilon }-X_{t-\cdot }^{0}\right\| _{\infty }^{{\gamma }} \right] =O\left( \varepsilon ^{{\gamma }} \right) +O\left( 1/n^{{\gamma }} \right) \) as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \). We shall prove only the case where \(\delta \ge 1\) because the proof for \(\delta \le 1\) is almost the same. It follows from (A1) and the Burkholder-Davis-Gundy inequality that

where \(C_{{\gamma }}\) is a constant depending only on p. It holds from Gronwall’s inequality and (A2) that

as \(\varepsilon \rightarrow 0\). From the continuity of \(X_t^0\), the proof is completed. \(\square \)

Remark 2

It is satisfied from the proof of Lemma 2 and (A2) that \(\sup _{\varepsilon \in (0,1]} E\left[ \sup _{-\delta \le t\le 1}\left| X_t^\varepsilon \right| ^{{\gamma }} \right] <\infty \) for \({{\gamma }}\ge 1\).

Lemma 3

Suppose the conditions (A1) and (A2). Then the following (i) and (ii) hold as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \): for any \({{\gamma }}\ge 1\),

-

(i)

\(\displaystyle E\left[ \sup _{t\in [0,1]}\left| H\left( Y_{t-\cdot }^{n,\varepsilon }\right) -H\left( X_{t-\cdot }^0\right) \right| ^{{\gamma }} \right] =O\left( \varepsilon ^{{\gamma }} \right) +O\left( 1/n^{{\gamma }} \right) . \)

-

(ii)

\(\displaystyle E\left[ \sup _{t\in [0,1]}\left| H_n\left( Y_{t-\cdot }^{n,\varepsilon }\right) -H\left( X_{t-\cdot }^0\right) \right| ^{{\gamma }} \right] =O\left( \varepsilon ^{{\gamma }} \right) +O\left( 1/n^{{\gamma }} \right) .\)

Proof

-

(i)

From Lemma 2,

$$\begin{aligned} E\left[ \sup _{t\in [0,1]}\left| H\left( Y_{t-\cdot }^{n,\varepsilon }\right) -H\left( X_{t-\cdot }^0\right) \right| ^{{\gamma }} \right]&\le \mu \left( [0,\delta ]\right) E\left[ \sup _{t\in [0,1]}\left\| Y_{t-\cdot }^{n,\varepsilon }-X_{t-\cdot }^{0}\right\| _{\infty }^{{\gamma }} \right] \\&= O\left( \varepsilon ^{{\gamma }} \right) +O\left( 1/n^{{\gamma }} \right) , \end{aligned}$$as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \).

-

(ii)

From Lemma 2 and the continuity of \(X^0_t\), we have

$$\begin{aligned} E\left[ \sup _{t\in [0,1]}\left| H_n\left( Y_{t-\cdot }^{n,\epsilon }\right) -H\left( X_{t-\cdot }^0\right) \right| ^{{{\gamma }}}\right]&\le \int _{0}^{\delta }E\left[ \sup _{t\in [0,1]}\left| Y_{t-\lfloor ns \rfloor /n}^{n,\epsilon }-X_{t-s}^0\right| ^{{{\gamma }}}\right] \,\mu (\textrm{d}s)\\&\le 2^{{{\gamma }}-1}\int _{0}^{\delta }\Bigg \{E\left[ \sup _{t\in [0,1]}\left| Y_{t-\lfloor ns \rfloor /n}^{n,\epsilon }-X_{\lfloor nt \rfloor /n-\lfloor ns \rfloor /n}^0\right| ^{{\gamma }} \right] \\&\quad +E\left[ \sup _{t\in [0,1]}\left| X_{\lfloor nt \rfloor /n-\lfloor ns \rfloor /n}^0-X_{t-s}^0\right| ^{{\gamma }} \right] \Bigg \}\,\mu (\textrm{d}s)\\&= O\left( \epsilon ^{{\gamma }} \right) +O\left( 1/n^{{\gamma }} \right) , \end{aligned}$$as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \).

\(\square \)

Lemma 4

Suppose the conditions (A1) and (A2). Then, it holds that

for \({{\gamma }}\ge 1\).

Proof

From (A1) and (A2), we find that

\(\square \)

For the following Lemmas, we shall use the notations:

-

(N11)

let \(R_{k-1}^{{\varepsilon }}\) denote a function \((0,1]\rightarrow {\mathbb {R}}\) for which there exist some constants \(C_1\)and \(C_2\) such that

$$\begin{aligned} \left| R_{k-1}^{{\varepsilon }}(a)\right| \le a{C_1}\left( 1+\Vert X_{t_{k-1}-\cdot }^{{\varepsilon }}\Vert _{\infty }\right) ^{C_2}, \end{aligned}$$for all \(a > 0\) and \(\varepsilon \in (0,1]\).

Lemma 5

Suppose the conditions (A1) and (A2). for \({{\gamma }}\ge 1\) and \(t_{k-1}\le t\le t_k\), it holds that

where \(\Phi _p^\varepsilon (\cdot )\) is a function:

Proof

In the same way as Lemma 6 in Kessler (1997), we have

where \(C_{{\gamma }}\) is constant depending only on p. We find that

Next, we consider three cases:

(b1) \(t_{k-1}> s\); (b2) \(t_{k-1}\le s < t\); (b3) \(t\le s\).

(b1) In the same way as (5.3), we find that

(b2) In the same way as (5.3),

where \({\tilde{C}}_p\) is constant depending only on p.

(b3) It is easy to find that

From (5.4), (5.5) and (5.6), we have

By (A1), (5.3), and Gronwall’s inequality, we obtain the conclusion. \(\square \)

Remark 3

Lemma 5 is satisfied for \(t_{k-1}\ge \delta \) and for \(t\ge \delta \).

For Lemma 6, we use some abbreviations:

-

(N12)

Denote by \(b^i_{t,H}=b^i\left( X_t^\varepsilon ,H\left( X_{t-\cdot }^\varepsilon \right) ,\theta _0\right) \) and

$$\begin{aligned}{}[\sigma \sigma ^\top ]_{t,H}^{ij}=[\sigma \sigma ^\top ]^{ij}\left( X_t^\varepsilon ,H\left( X_{t-\cdot }^\varepsilon \right) ,\beta _0\right) \end{aligned}$$for \(\left\{ b^i\right\} \) and \(\left\{ [\sigma \sigma ^{\top }]^{ij}\right\} \).

Lemma 6

Suppose the condition (A1). Then the following (i)-(iv) hold true:

-

(i)

$$\begin{aligned} E\left[ P_{k}^{i_{1}}(\theta _{0})\Big |{\mathscr {F}}_{t_{k-1}}\right] =\frac{1}{n}\left( b^{i_{1}}_{t_{k-1},H}-b^{i_{1}}_{t_{k-1},H_n}\right) +\int _{t_{k-1}}^{t_k}\Phi _1^\varepsilon (s)~ds+R_{k-1}^{{\varepsilon }}\left( \frac{1}{n^2}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon }{n\sqrt{n}}\right) . \end{aligned}$$

-

(ii)

$$\begin{aligned} E\left[ P_{k}^{i_{1}}P_{k}^{i_{2}}\left( \theta _{0}\right) \Big |{\mathscr {F}}_{t_{k-1}}\right]&= \frac{\varepsilon ^{2}}{n}[\sigma \sigma ^\top ]_{t_{k-1},H}^{i_{1}i_{2}}+\frac{1}{n^2}\left( b_{t_{k-1},H}^{i_1}-b_{t_{k-1},H_n}^{i_1}\right) \left( b_{t_{k-1},H}^{i_2}-b_{t_{k-1},H_n}^{i_2}\right) \\&\quad +\int _{t_{k-1}}^{t_k}\left\{ \Phi _2^\varepsilon (s)+\varepsilon ^2\Phi _1^\varepsilon (s)\right\} \,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n}\right) \int _{t_{k-1}}^{t_k}\Phi _1^\varepsilon (s)\,\textrm{d}s+R_{k-1}^{{\varepsilon }}(1)\int _{t_{k-1}}^{t_k}\int _{t_{k-1}}^{s}\Phi _1^\varepsilon (u)\,\textrm{d}u\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n^3}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon }{n^2\sqrt{n}}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon ^2}{n^2}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon ^3}{n\sqrt{n}}\right) . \end{aligned}$$

-

(iii)

$$\begin{aligned} E\left[ P_{k}^{i_{1}}P_{k}^{i_{2}}P_{k}^{i_{3}}(\theta _{0})\Big |{\mathscr {F}}_{t_{k-1}}\right]&= \frac{1}{n^3}\left( b_{t_{k-1},H}^{i_1}-b_{t_{k-1},H_n}^{i_1}\right) \left( b_{t_{k-1},H}^{i_2}-b_{t_{k-1},H_n}^{i_2}\right) \left( b_{t_{k-1},H}^{i_3}-b_{t_{k-1},H_n}^{i_3}\right) \\&\quad +\frac{\varepsilon ^2}{2n^2}\sum _{{\tilde{\sigma }}\in G(3)}[\sigma \sigma ^\top ]^{i_{{\tilde{\sigma }}(1)}i_{{\tilde{\sigma }}(2)}}\left( b_{t_{k-1},H}^{i_{{\tilde{\sigma }}(3)}}-b_{t_{k-1},H_n}^{i_{{\tilde{\sigma }}(3)}}\right) \\&\quad +\int _{t_{k-1}}^{t_k}\left\{ \Phi _3^\varepsilon (s)+\varepsilon ^2\Phi _2^\varepsilon (s)\right\} \,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n}\right) \int _{t_{k-1}}^{t_k}\{\Phi _2^\varepsilon (s)+\varepsilon ^2\Phi _1^\varepsilon (s)\}\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n^2}\right) b_{t_{k-1},H_n}^{i_{{\tilde{\sigma }}(2)}}\int _{t_{k-1}}^{t_k}\Phi _1^\varepsilon (s)\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}(1)\int _{t_{k-1}}^{t_k}\int _{t_{k-1}}^{s}\{\Phi _2^\varepsilon (u)+\varepsilon ^2\Phi _1^\varepsilon (u)\}\,\textrm{d}u\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n}\right) \int _{t_{k-1}}^{t_k}\int _{t_{k-1}}^s\Phi _1^\varepsilon (u)\,\textrm{d}u\,\textrm{d}s\\&\quad +\sum _{{\tilde{\sigma }}\in G(3)}b_{t_{k-1},H}^{i_{{\tilde{\sigma }}(1)}}b_{t_{k-1},H}^{i_{{\tilde{\sigma }}(2)}}\int _{t_{k-1}}^{t_k}\int _{t_{k-1}}^{s}\int _{t_{k-1}}^{u}\Phi _1^\varepsilon (v)\,\textrm{d}v\,\textrm{d}u\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n^4}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon }{n^3\sqrt{n}}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon ^2}{n^3}\right) \\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon ^3}{n^2\sqrt{n}}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon ^4}{n^2}\right) . \end{aligned}$$

-

(iv)

$$\begin{aligned} E\left[ P_{k}^{i_{1}}P_{k}^{i_{2}}P_{k}^{i_{3}}P_{k}^{i_{4}}(\theta _{0})\Big |{\mathscr {F}}_{t_{k-1}}\right]&= \frac{\varepsilon ^4}{n^2}\Bigg ([\sigma \sigma ^\top ]_{t_{k-1},H}^{i_1i_2}[\sigma \sigma ^\top ]_{t_{k-1},H}^{i_3i_4}+[\sigma \sigma ^\top ]_{t_{k-1},H}^{i_1i_3}[\sigma \sigma ^\top ]_{t_{k-1},H}^{i_2i_4}\\&\qquad +[\sigma \sigma ^\top ]_{t_{k-1},H}^{i_1i_4}[\sigma \sigma ^\top ]_{t_{k-1},H}^{i_2i_3}\Bigg )\\&\quad +\frac{1}{n^4}\left( b_{t_{k-1},H}^{i_1}-b_{t_{k-1},H_n}^{i_1}\right) \left( b_{t_{k-1},H}^{i_2}-b_{t_{k-1},H_n}^{i_2}\right) \times \\&\qquad \left( b_{t_{k-1},H}^{i_3}-b_{t_{k-1},H_n}^{i_3}\right) \left( b_{t_{k-1},H}^{i_4}-b_{t_{k-1},H_n}^{i_4}\right) \\&\quad +\frac{\varepsilon ^2}{4n^3}\sum _{{\tilde{\sigma }}\in G(4)}[\sigma \sigma ^{\top }]^{i_{{\tilde{\sigma }}(1)}i_{{\tilde{\sigma }}(2)}}\left( b_{t_{k-1},H}^{i_{{\tilde{\sigma }}(3)}}-b_{t_{k-1},H_n}^{i_{{\tilde{\sigma }}(3)}}\right) \\&\qquad \left( b_{t_{k-1},H}^{i_{{\tilde{\sigma }}(4)}}-b_{t_{k-1},H_n}^{i_{{\tilde{\sigma }}(4)}}\right) \\&\quad +\int _{t_{k-1}}^{t_k}\left\{ \Phi _4^\varepsilon (s)+\varepsilon ^2\Phi _3^\varepsilon (s)\right\} \,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n}\right) \int _{t_{k-1}}^{t_k}\{\Phi _3^\varepsilon (u)+\varepsilon ^2\Phi _2^\varepsilon (s)\}\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n^2}\right) \int _{t_{k-1}}^{t_k}\{\Phi _2^\varepsilon (s)+\varepsilon ^2\Phi _1^\varepsilon (s)\}\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}(1)\int _{t_{k-1}}^{s}\{\Phi _3^\varepsilon (u)+\varepsilon ^2\Phi _2^\varepsilon (u)\}\,\textrm{d}u\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n}\right) \int _{t_{k-1}}^{t_k}\int _{t_{k-1}}^{s}\{\Phi _2^\varepsilon (u)+\varepsilon ^2\Phi _1^\varepsilon (u)\}\,\textrm{d}u\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}(\varepsilon ^2)\int _{t_{k-1}}^{t_k}\int _{t_{k-1}}^{s}\{\Phi _2^\varepsilon (u)+\varepsilon ^2\Phi _1^\varepsilon (u)\}\,\textrm{d}u\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}(1)\int _{t_{k-1}}^{t_k}\int _{t_{k-1}}^{s}\int _{t_{k-1}}^{u}\left\{ \Phi _2^\varepsilon (v)+\varepsilon ^2\Phi _1^\varepsilon (v)\right\} \,\textrm{d}v\,\textrm{d}u\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}(1)\int _{t_{k-1}}^{t_k}\int _{t_{k-1}}^s\int _{t_{k-1}}^u\int _{t_{k-1}}^v\Phi _1^\varepsilon (w)\,\textrm{d}w\,\textrm{d}v\,\textrm{d}u\,\textrm{d}s\\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{1}{n^5}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon }{n^4\sqrt{n}}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon ^2}{n^4}\right) \\&\quad +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon ^3}{n^3\sqrt{n}}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon ^4}{n^3}\right) +R_{k-1}^{{\varepsilon }}\left( \frac{\varepsilon ^5}{n^2\sqrt{n}}\right) . \end{aligned}$$

Proof

(i) It is satisfied from the Lipschitz condition on b in (A1) that

From Lemma 5, the proof of (i) is completed. (ii) We find that

It follows from the Lipschitz condition on b in (A1) that

From Lemma 5, we have

From the same argument of (5.7), it holds that

It is satisfied from the proof of (i) that

Therefore,

It follows from (5.7–5.9) that

Therefore,

(iii) From the same argument as the proof of (ii), it holds that

and

(iv) It follows from the same argument as the proof of (ii) that

and

\(\square \)

We shall use the notation:

-

(N13)

We denote the gradient operator of \(f(x,y,\theta )\) with respect to x by

$$\begin{aligned} \triangledown _xf(x,y,\theta )=\left( \partial _{x_1}f(x,y,\theta ),\dots \partial _{x_d}f(x,y,\theta )\right) ^{\top }. \end{aligned}$$

In Lemma 7, the proof ideas follow Long et al. (2013)

Lemma 7

Let \(f\in C_{\uparrow }^{1,1,1}({\mathbb {R}}^d\times {\mathbb {R}}^d\times \Theta )\) and suppose the conditions (A1)–(A3). Then the following (i) and (ii) hold true:

-

(i)

As \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \),

$$\begin{aligned} \frac{1}{n}\sum _{k=1}^{n}f\left( X_{t_{k-1}}^{\varepsilon }, H_n\big (X_{t_{k-1}-\cdot }^{\varepsilon }\big ),\theta \right) \xrightarrow {P}\int _{0}^{1}f\left( X_{s}^0,H\big (X_{s-\cdot }^0\big ),\theta \right) \,\textrm{d}s, \end{aligned}$$uniformly in \(\theta \in \Theta \).

-

(ii)

As \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \),

$$\begin{aligned} \sum _{k=1}^{n}f\left( X_{t_{k-1}}^{\varepsilon },H_n\big (X_{t_{k-1}-\cdot }^{\varepsilon }\big ),\theta \right) P_k(\theta _0)\xrightarrow {P}0, \end{aligned}$$uniformly in \(\theta \in \Theta \).

Proof

(i) From lemma 2, Lemma 3 and Taylor’s formula, we find that

as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty .\) (ii) It is easy to see that

From the Lipschitz condition on b in (A1) it holds that

which converges to zero as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \) by Lemma 2 and Lemma 3. Let \(\tau _m^{n,\varepsilon }=\inf \{t\ge 0;|X_t^\varepsilon |\ge m\) or \(|Y_t^{n,\varepsilon }|\ge m\}\). We find that \(\tau _m^{n,\varepsilon }\rightarrow \infty \) a.s. as \(m\rightarrow \infty \) from Lemma 1. Next, we have that for any \(\eta >0\),

Let

We want to prove that \(u_{n,\varepsilon }^i(\theta )\rightarrow 0\) in P as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \), uniformly in \(\theta \in \Theta \). Therefore, it is sufficient to check the pointwise convergence and the tightness of the sequence \(\{u_{n,\varepsilon }^i(\cdot )\}\). For the pointwise convergence, by Chebyshev’s inequality, the linear growth condition on \(\sigma \) in (A1) and itô’s isometry,

which converges to zero as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \) with fixed m. For the tightness, by using Theorem 20 in Appendix I of Ibragimov and Has’minskii (1981), it is adequate to prove the following two inequalities:

for \(\theta ,\theta _1,\theta _2\in \Theta \), where \(2l>p+q\). The proof of (5.12) is analogous to moment estimates in (5.11) by replacing itô’s isometry with the Burkholder-Davis-Gundy inequality, so we omit the detail here. For (5.13), by using Taylor’s formula and the Burkholder-Davis-Gundy inequality, we get

Combining (5.10) and arguments above, we have that

converges to zero in probability as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \). Therefore, the proof is complete. \(\square \)

In Lemma 8, the proof ideas follow Sørensen and Uchida (2003).

Lemma 8

Let \(f\in C_{\uparrow }^{1,1,1}({\mathbb {R}}^d\times {\mathbb {R}}^d\times \Theta )\) and suppose the conditions (A1)–(A3). If \((\sqrt{n}\varepsilon )^{-1}\rightarrow 0\) as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \), then the following (i) and (ii) hold true:

-

(i)

As \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \),

$$\begin{aligned}&\varepsilon ^{-2} \sum _{k=1}^{n} f\left( X_{t_{k-1}}^\varepsilon , H_n\big (X_{t_{k-1}-\cdot }^\varepsilon \big ), \theta \right) P_{k}^{i}(\theta _{0}) P_{k}^{j}(\theta _{0})\\&\xrightarrow {P} \int _{0}^{1} f\left( X_{s}^0, H\big (X_{s - \cdot }^0\big ), \theta \right) \left[ \sigma \sigma ^{\top }\right] ^{ij}\left( X_{s}^0, H\big (X_{s - \cdot }^0\big ),\beta _{0}\right) \,\textrm{d}s, \end{aligned}$$uniformly in \(\theta \in \Theta .\)

-

(ii)

As \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \),

$$\begin{aligned}&\varepsilon ^{-2} \sum _{k=1}^{n} f\left( X_{t_{k-1}}^\varepsilon , H_n\big (X_{t_{k-1} - \cdot }^\varepsilon \big ), \theta \right) P_{k}^{i}(\theta ) P_{k}^{j}(\theta )\\ \xrightarrow {P}&\int _{0}^{1} f\left( X_{s}^0, H\big (X_{s - \cdot }^0\big ), \theta \right) \left[ \sigma \sigma ^{\top }\right] ^{ij}\left( X_{s}^0, H\big (X_{s - \cdot }^0\big ),\beta _{0}\right) \,\textrm{d}s, \end{aligned}$$uniformly in \(\theta \in \Theta .\)

Proof

(i) It holds from Lemma 6, Lemma 7(i), and the Hölder’s inequality that

as \(n \rightarrow \infty \) and \(\varepsilon \rightarrow 0\). Therefore, it follows from Lemma 9 in Genon-Catalot and Jacod (1993)

as \(n \rightarrow \infty \) and \(\varepsilon \rightarrow 0\). For the tightness of the sequence \(\{\varepsilon ^{-2}\sum _{k=1}^{n}f\left( X_{t_{k-1}},H_n(X_{t_{k-1}}),\cdot \right) P_k^iP_k^j(\theta _0)\}\), according to Lemma 6,

(ii) Noticing that

where \(B^i_{k-1}(\theta _0,\theta )=b^i\left( X_{t_{k-1}},H_n(X_{t_{k-1}-\cdot }),\theta _0\right) -b^i\left( X_{t_{k-1}},H_n(X_{t_{k-1}-\cdot }),\theta \right) \). It follows from Lemmas 7 and 8(i), under P, as \(n\rightarrow \infty \) and \(\varepsilon \rightarrow 0\),

uniformly in \(\theta \in \Theta \). \(\square \)

We are ready to prove Theorem 1. In Theorem 1, the proof ideas mainly follow Sørensen and Uchida (2003).

Proof of Theorem 1

Following the proof of Theorem 1 in Sørensen and Uchida (2003), the consistency follows from the two properties:

where \(B_s(\theta _0, \theta )=b\left( X_s^0,H(X_{s-\cdot }^0),\theta _0\right) -b\left( X_s^0,H(X_{s-\cdot }^0),\theta \right) \), as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty ,\) uniformly in \(\theta \in \Theta .\) First, we show (5.14). It is clear that

From Lemma 7,

as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty ,\) uniformly in \(\theta \in \Theta .\) About (5.15), from Lemma 7(i) and Lemma 8(ii), as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty ,\) it holds that

\(\square \)

Finally, we prove the asymptotic normality of \(\widehat{\theta }_{n,\varepsilon }\). For the proof, we shall use the notations:

-

(N14)

We denote

$$\begin{aligned} \Lambda _{n,\varepsilon }(\theta _0):= \left( \begin{array}{cc} -\varepsilon \left( \frac{\partial }{\partial \alpha _i}U_{n,\varepsilon }(\theta )\Big |_{\theta =\theta _0}\right) _{1\le i\le p} \\ -\frac{1}{\sqrt{n}}\left( \frac{\partial }{\partial \beta _i}U_{n,\varepsilon }(\theta )\Big |_{\theta =\theta _0}\right) _{1\le i\le q} \\ \end{array} \right) . \end{aligned}$$ -

(N15)

We denote

$$\begin{aligned} C_{n,\varepsilon }(\theta _0):= \left( \begin{array}{cc} \varepsilon ^2\left( \frac{\partial ^2}{\partial \alpha _i\alpha _j}U_{n,\varepsilon }(\theta )\Big |_{\theta =\theta _0}\right) _{1\le i,j\le p} &{}\frac{\varepsilon }{\sqrt{n}}\left( \frac{\partial ^2}{\partial \alpha _i\beta _j}U_{n,\varepsilon }(\theta )\Big |_{\theta =\theta _0}\right) _{1\le i\le p,1\le j\le q}\\ \frac{\varepsilon }{\sqrt{n}}\left( \frac{\partial ^2}{\partial \beta _i\alpha _j}U_{n,\varepsilon }(\theta )\Big |_{\theta =\theta _0}\right) _{1\le i\le p,1\le j\le q}&{}\frac{1}{n}\left( \frac{\partial ^2}{\partial \beta _i\beta _j}U_{n,\varepsilon }(\theta )\Big |_{\theta =\theta _0}\right) _{1\le i,j\le q} \\ \end{array} \right) . \end{aligned}$$

In Theorem 2, the proof ideas mainly follow Sørensen and Uchida (2003).

Proof of Theorem 2

By Theorem 1 in Sørensen and Uchida (2003), the asymptotic normality follows from the three properties:

where \(\eta _{n,\varepsilon }\rightarrow 0\), as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \), and

as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \). First, we show (5.16). Note that

It follows from Lemma 7 and Lemma 8(ii), as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty ,\) that

uniformly in \(\theta \in \Theta \). About (5.17), the limit of (5.19), (5.20) and (5.21) are continuous with respect to \(\theta \), which completes the proof. Finally, we prove (5.18). We set

In view of Theorem 3.2 and 3.4 in Hall and Heyde (1980), it is sufficient to show that as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \),

From Lemma 6, we obtain (5.22–5.27). To prove (5.28), we have several estimates as follows:

In the same way as the proof of Lemma 6, we have

It follows from Lemma 6, (5.29–5.31) and the Hölder’s inequality that

as \(\varepsilon \rightarrow 0\) and \(n\rightarrow \infty \). We obtain the conclusion. \(\square \)

References

Arriojas M (1997) A stochastic calculus for functional differential equations. Ph.D. thesis, Southern Illinois University at Carbondale, USA

Backwar E (2006) One-step approximations for stochastic functional differential equations. Appl Numer Math 98:38–58

Beretta E, Takeuchi Y (1995) Global stability of an SIR epidemic model with time delay. J Math Biol 33(3):250–260

De la Sen M, Ibeas A (2021) On an SE(Is)(Ih)AR epidemic model with combined vaccination and antiviral controls for COVID-19 pandemic. Adv Differ Equ 2021(1):1–30

Fu X (2019) On invariant measures and the asymptotic behavior of a stochastic delayed SIRS epidemic model. Physica A Stat Mech Appl 523:1008–1023

Genon-Catalot V, Jacod J (1993) On the estimation of the diffusion coefficient for multidimensional diffusion processes. Ann Inst H Poincaré Probab Stat 29:119–151

Gushchin AA, Küchler U (1999) Asymptotic inference for a linear stochastic differential equation with time delay. Bernoulli 5:1059–1098

Guttrop P, Kulperger R (1984) Statistical inference for some Volterra population processes in a random environment. Can J Stat 12:289–302

Gloter A, Sørensen M (2009) Estimation for stochastic differential equations with a small diffusion coefficient. Stoch Process Appl 119:679–699

Hall P, Heyde C (1980) Martingale limit theory and its applications. Academic Press, New York

Hu HY, Wang ZH (2002) Dynamics of controlled mechanical systems with delayed feedback. Springer, Berlin

Ibragimov IA, Has’minskii RZ (1981) Statistical estimation: asymptotic theory. Springer-Verlag, Berlin

Kessler M (1997) Estimation of an ergodic diffusion from discrete observations. Scand J Stat 24:211–229

Küchler U, Kutoyants YA (2000) Delay estimation for some stationary diffusion-type processes. Scand J Stat 27:405–414

Küchler U, Sørensen M (2013) Statistical inference for discrete-time samples from affine stochastic delay differential equations. Bernoulli 19(2):409–425

Küchler U, Vasiliev V (2005) Sequential identification of linear dynamic systems with memory. Stat Inference Stoch Process 8:1–24

Kutoyants YA (1988) An example of estimating a parameter of a non-differentiable drift coefficient. Theory Probab Appl 33(1):175–179

Kutoyants YuA (1994) Identification of dynamical systems with small noise. Kluwer, Dordrecht

Liptser RS, Shiryayev AN (2001) Statistics of random processes, I, 2nd edn. Springer, N.Y.

Long H, Shimizu Y, Sun W (2013) Least squares estimators for discretely observed stochastic processes driven by small Lévy noise. J Multivar Anal 116:422–439

Mackey MC, Glass L (1977) Oscillation and chaos in physiological control systems. Science 197:287–289

Smith H (2011) An introduction to delay differential equations with applications to the life sciences. Texts in Applied Mathematics, Springer, New York

Mohammed S-EA (1984) Stochastic functional differential equations. Pitman, London

Nualart D (2006) The Malliavin calculus and related topics, 2nd edn. Springer-Verlag, Berlin

Reiss M (2004) Nonparametric estimation for stochastic delay differential equations. Ph.D. thesis, Institut für Mathematik, Humboldt-Universität zu Berlin

Reiss M (2005) Adaptive estimation for affine stochastic delay differential equations. Bernoulli 11:67–102

Ren P, Wu J-L (2019) Least squares estimator for path-dependent McKean-Vlasov SDEs via discrete-time observations. Acta Math Sci 39:691–716

Sørensen M, Uchida M (2003) Small diffusion asymptotics for discretely sampled stochastic differential equations. Bernoulli 9:1051–1069

Volterra V (1959) Theory of functionals. Dover Publications, New York

Wang P (2023) Risk-sensitive maximum principle for controlled system with delay. Mathematics, MDPI 11:1058. https://doi.org/10.3390/math11041058

Funding

The study was funded by JSPS KAKENHI Grant Number 21K03358.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Author Hiroki Nemoto declares that he has no conflict of interest. Author Yasutaka Shimizu has received research grants from JSPS.

Ethical approval

This article does not contain any studies with human participants performed by authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nemoto, H., Shimizu, Y. Statistical inference for discretely sampled stochastic functional differential equations with small noise. Stat Inference Stoch Process 27, 427–456 (2024). https://doi.org/10.1007/s11203-023-09299-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11203-023-09299-7

Keywords

- Stochastic delay equation

- Functional delay

- Discrete observations

- Minimum contrast estimator

- Small noise

- Asymptotic normality