Abstract

Recently Díaz, Hössjer and Marks (DHM) presented a Bayesian framework to measure cosmological tuning (either fine or coarse) that uses maximum entropy (maxent) distributions on unbounded sample spaces as priors for the parameters of the physical models (https://doi.org/10.1088/1475-7516/2021/07/020). The DHM framework stands in contrast to previous attempts to measure tuning that rely on a uniform prior assumption. However, since the parameters of the models often take values in spaces of infinite size, the uniformity assumption is unwarranted. This is known as the normalization problem. In this paper we explain why and how the DHM framework not only evades the normalization problem but also circumvents other objections to the tuning measurement like the so called weak anthropic principle, the selection of a single maxent distribution and, importantly, the lack of invariance of maxent distributions with respect to data transformations. We also propose to treat fine-tuning as an emergence problem to avoid infinite loops in the prior distribution of hyperparameters (common to all Bayesian analysis), and explain that previous attempts to measure tuning using uniform priors are particular cases of the DHM framework. Finally, we prove a theorem, explaining when tuning is fine or coarse for different families of distributions. The theorem is summarized in a table for ease of reference, and the tuning of three physical parameters is analyzed using the conclusions of the theorem.

Similar content being viewed by others

Data Availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Notes

The following argument for the nonexistence of a uniform distribution on \(\Omega = R^+\) does not make use of the starting assumption that this distribution has a constant density on \(R^+\) (which immediately would lead to a contradiction that this density integrates to 1). To showcase that a sequence of truncated uniform distributions on [0, N] does not have a well-defined limit as \(N\rightarrow \infty\), another type of argument is presented here. This will point to the fact that first imposing an upper boundary N on X, and then using the PrOIR is but arbitrary. In order to motivate that a uniform distribution does not exist on the infinite-sized positive real line \(\Omega = {\mathbb {R}}^+\), we first consider, \(\Omega _N = (0,N]\), and assume without loss of generality that N is a positive integer. Then make partition of \(\Omega _N\) into N subintervals of length 1: \(I_1 = (0,1], I_2=(1,2], \ldots , I_N = (N-1,N]\). The uniform distribution assigns probability 1/N to each of these subintervals, since \(P_N(I_i) = \vert I_i\vert /\vert \Omega _N\vert = 1/N\), for \(i \in \{1,\ldots N\}\). Therefore, as N approaches infinity (noted \(N \rightarrow \infty\)), \(\Omega _N\) approaches the whole set of positive real numbers \(\Omega = {\mathbb {R}}^+\). If \(P_N\) were to approach a limiting distribution P on \(\Omega\), then P would have a continuous distribution since \(P(\{x\})\le \limsup _{N\rightarrow \infty } P_N(I_{[x]}\cup I_{[x]+1}) = 0\) for any \(x\in \Omega\), with \(I_0\) interpreted as the empty set. But since P (if it exists) has a continuous distribution it follows that \(P_N(I_i) \rightarrow 0 = P(I_i)\), for all \(i \in {\mathbb {N}}\). From this,

$$1 = P(\Omega ) = \sum\limits_{{i = 1}}^{\infty } P (I_{i} ) = 0,$$(4)as \(N \rightarrow \infty\). That is, in the limit, \(1=0\). This is a contradiction. (Notice that the first equality was obtained by unitarity (1) and the second by \(\sigma\)-additivity (3).) The reason for the contradiction is that the sequence of probability measures \(\{P_N\}\) does not satisfy a property called tightness [20, pp. 7–13], since the probability mass escapes to infinity. This implies that \(\{P_N\}\) does not converge to any limiting probability measure P, in particular not to a uniform distribution on \(\Omega\).

For instance, continuing with the example of the exponential distribution of mean 1, even though the interval \(I = \left( 10^{20}, \infty \right)\) has infinite length, \(P(I) = \exp \left( -10^{20}\right) \approx 0\). On the other hand, although \(I_1 = (0,1]\), for which \(\vert I_1\vert /\vert \Omega \vert = 1/\infty = 0\), the probability of \(I_1\) is \(P(I_1) = 1- e^{-1} \approx 0.63\).

In more detail, let \(\Omega =[a,b]\). Among all continuous distribution F with \(F([a,b])=1\), the continuous version \(H_c(F)\) of the entropy (defined in (15) of Appendix 1) is maximized by the uniform density

$$\begin{aligned} f(x) = \frac{1}{b-a}, \quad a\le x \le b, \end{aligned}$$when no moment restrictions are imposed. This follows by putting \(d=0\) in (16). On the other hand, when \(\Omega ={{\mathbb {R}}}\) is unbounded, it is necessary to impose at least one moment restriction (\(d\ge 1\)) on F in order for f to be integrable.

For instance, pressure decreasing from the Earth as a function of distance can be approximated by a limiting exponential distribution, but it does not reach the limit. See Sect. 4.1 below.

More formally, these constraints are expressed as \(E[M_i(X)]=\theta _i\) for \(i=1,\ldots ,d\). A known probability \(\theta _i\) for the event E corresponds to choosing \(M_i(x)= {\textbf{1}}(x\in E)\), whereas an ordinary moment restriction corresponds to \(M_i(x)=x^i\). Appendix 1 explains how the maxent distribution F of X is obtained from these constraints.

A random variable X is degenerate if \(P(X = x) = 1\) that is, if X is constant with probability 1; which is a maximum entropy distribution for the restriction \(M_i(y) = {\textbf{1}}\left( y\in \{x\}\right)\). On the other hand, X is a non-degenerate random variable if it is not degenerate.

In fact, no scientific theory has ever been final!

Take, for instance, \(\Omega = \{x_1,x_2,x_3\}\). In the absence of further knowledge, the Shannon entropy H(F) in (14) in Appendix 1 is maximized by a uniform distribution F on \(\Omega\), with \(\pi _1=\pi _2 =\pi _3= 1/3\), and \(\pi _i = F(\{x_i\})\). However, let us assume that \(\pi _1= E[M_1(X)]=1/2=\theta _1\), with \(M_1(x)= {\textbf{1}}(x=x_1)\), represents information that is known to the researcher. Under this constraint H(F) is maximized by \((\pi _1,\pi _2,\pi _3)=(1/2,1/4,1/4)\). Therefore, the knowledge of the probability of the event \(\{x_1\}\), redistributes the remaining probability equally among the events \(\{x_2\}\) and \(\{x_3\}\).

In this scenario, there is no fine-tuning. For a constant of nature with value x, this corresponds to choosing \(d=1\) and \(M_1(y) = {\textbf{1}}\left( y \in \{x\}\right)\), which in this scenario corresponds to \(\theta _1 = E[M_1(X)] = P(X=\{x\}) = 1\) (see footnotes 6 and 7 and (16) in Appendix 1).

This corresponds to choosing \(d=1\) and \(M_1(x)=x\) in (8).

This corresponds to choosing \(d=2\), \(M_1(x)=x^k\) and \(M_2(x)=\ln (x)\) in (8).

In more detail, suppose \(Y_i=G_i(X)\) is a strictly increasing and differentiable transformations of X, with \(g_i(x)=G_i^\prime (x)\), for \(i=1,\ldots ,d\). Then, if F and \(F_i\) refer to the distributions of X and \(Y_i\), and \(f=F^\prime\) is the density of X, it can be shown that

$$H_{c} (F_{i} ) = H_{c} (F) + E[M_{i} (X)],$$(9)where \(M_i(x)=\log [g_i(x)]\), and \(H_c\) is the continuous entropy of (15) in Appendix 1. The maxent distribution \(F_i\) therefore corresponds to the distribution F that maximizes \(H_c(F)+\theta _i\) when \(\theta _i=E[M_i(X)]\) varies. From this it follows that the maxent distributions of \(F_1,\ldots ,F_d\) are all members of \({{\mathcal {F}}}=\{F(\cdot ;\theta ); \,\,\theta =(\theta _1,\ldots ,\theta _d)\in \Theta \}\), with \(\Theta\) ranging over all permissible constraints on \((E[M_1(X)],\ldots ,E[M_d(X)])\).

In our motivating example with two constants of nature X and Y, a partition \({\mathcal {P}}\) corresponds to a case when the sample space of Y is countable (\(Y\in \{y_1,y_2,\ldots \}\)) and \(G_i\) is the set of outcomes for which \(Y=y_i\).

References

Lewis, G.F., Barnes, L.A.: A Fortunate Universe: Life in a Finely Tuned Cosmos. Cambridge University Press, Cambridge (2016). https://doi.org/10.1017/9781316661413

Carr, B., Rees, M.J.: The anthropic principle and the structure of the physical world. Nature 278, 605–612 (1979). https://doi.org/10.1038/278605a0

Adams, F.C.: The degree of fine-tuning in our universe—and others. Phys. Rep. 807(15), 1–111 (2019). https://doi.org/10.1016/j.physrep.2019.02.001

McGrew, L., McGrew, T.: On the rational reconstruction of the fine-tuning argument. Philos. Christi 7(2), 423–441 (2005). https://doi.org/10.5840/pc20057235

Barnes, L.A.: Testing the multiverse: Bayes, fine-tuning and typicality. In: Chamcham, K., Silk, J., Barrow, J.D., Saunders, S. (eds.) The Philosophy of Cosmology, pp. 447–466. Cambridge University Press, Cambridge (2017). https://doi.org/10.1017/9781316535783.023

Barnes, L.A.: Fine-tuning in the context of Bayesian theory testing. Eur. J. Philos. Sci. 8(2), 253–269 (2018). https://doi.org/10.1007/s13194-017-0184-2

Barnes, L.A.: A reasonable little question: a formulation of the fine-tuning argument. Ergo 6(42), 1220–1257 (2019–2020). https://doi.org/10.3998/ergo.12405314.0006.042

Collins, R.: The teleological argument: an exploration of the fine-tuning of the universe. In: Craig, W.L., Moreland, J.P. (eds.) Blackwell Companion to Natural Theology, pp. 202–281. Wiley-Blackwell, Chichester (2012). https://doi.org/10.1002/9781444308334.ch4

Tegmark, M., Aguirre, A., Rees, M., Wilczek, F.: Dimensionless constants, cosmology, and other dark matters. Phys. Rev. D 73(2), 023505 (2006). https://doi.org/10.1103/PhysRevD.73.023505

McGrew, T., McGrew, L., Vestrup, E.: Probabilities and the fine-tuning argument: a sceptical view. Mind New Ser. 110(440), 1027–1037 (2001). https://doi.org/10.1093/mind/110.440.1027

Colyvan, M., Garfield, J.L., Priest, G.: Problems with the argument from fine tuning. Synthese 145(3), 325–338 (2005). https://doi.org/10.1007/s11229-005-6195-0

Kolmogorov, A.N.: Foundations of the Theory of Probability, 2nd edn. Dover Publications, Newburyport (2018)

Bernoulli, J.: Ars Conjectandi. Thurneysen Brothers, Basel (1713)

Wolpert, D.H., MacReady, W.G.: No free lunch theorems for search. Technical report SFI-TR-95-02-010, Santa Fe Institute (1995)

Wolpert, D.H., MacReady, W.G.: No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1(1), 67–82 (1997). https://doi.org/10.1109/4235.585893

Jaynes, E.T.: Prior probabilities. IEEE Trans. Syst. Sci. Cybern. 4(3), 227–241 (1968). https://doi.org/10.1109/TSSC.1968.300117

Jaynes, E.T.: Information theory and statistical mechanics. Phys. Rev. 106(4), 620–630 (1957). https://doi.org/10.1103/PhysRev.106.620

Jaynes, E.T.: Information theory and statistical mechanics II. Phys. Rev. 108(2), 171–190 (1957). https://doi.org/10.1103/PhysRev.108.171

Dembski, W.A.: Uniform probability. J. Theor. Probab. 3(4), 611–626 (1990). https://doi.org/10.1007/BF01046100

Billingsley, P.: Convergence of Probability Measures, 2nd edn. Wiley, Hoboken (1999)

Dembski, W.A., Marks, R.J., II.: Conservation of information in search: measuring the cost of success. IEEE Trans. Syst. Man Cybern. A: Syst. Hum. 5(5), 1051–1061 (2009). https://doi.org/10.1109/TSMCA.2009.2025027

Díaz-Pachón, D.A., Marks, R.J., II.: Generalized active information: extensions to unbounded domains. BIO-Complexity 2020(3), 1–6 (2020). https://doi.org/10.5048/BIO-C.2020.3

Park, S.Y., Bera, A.K.: Maximum entropy autoregressive conditional heteroskedasticity model. J. Econom. 150, 219–230 (2009). https://doi.org/10.1016/j.jeconom.2008.12.014

Arstein, S., Ball, K., Barthe, F., Naor, A.: Solution of Shannon’s problem on the monotonicity of entropy. J. Am. Math. Soc. 17, 975–982 (2004). https://doi.org/10.1090/S0894-0347-04-00459-X

Barron, A.R.: Entropy and the central limit theorem. Ann. Probab. 14(1), 336–342 (1986). https://doi.org/10.1214/aop/1176992632

Xue, C., Liu, J.-P., Li, Q., Wu, J.-F., Yang, S.-Q., Liu, Q., Shao, C.-G., Tu, L.-C., Hu, Z.-K., Luo, J.: Precision measurement of the Newtonian gravitational constant. Natl. Sci. Rev. 7(12), 1803–1817 (2020). https://doi.org/10.1093/nsr/nwaa165

Davies, P.: The Accidental Universe. Cambridge University Press, Cambridge (1982)

Díaz-Pachón, D.A., Hössjer, O., Marks, R.J., II.: Is cosmological tuning fine or coarse? J. Cosmol. Astropart. Phys. 2021(07), 020 (2021). https://doi.org/10.1088/1475-7516/2021/07/020

Jaynes, E.T.: Probability Theory: The Logic of Science. Cambridge University Press, Cambridge (2003). https://doi.org/10.1017/CBO9780511790423

Azhar, F., Loeb, A.: Gauging fine-tuning. Phys. Rev. D 98, 103018 (2018). https://doi.org/10.1103/PhysRevD.98.103018

Ellis, G.F.R., Meissner, K.A., Hermann, N.: The physics of infinity. Nat. Phys. 14, 770–772 (2018). https://doi.org/10.1038/s41567-018-0238-1

Grabiner, J.V.: Who gave you the epsilon? Cauchy and the origins of rigorous calculus. Am. Math. Mon. 91, 185–194 (1983). https://doi.org/10.2307/2975545

de Finetti, B.: Philosophical Lectures on Probability. Springer, Dordrecht (2008)

Feller, W.: An Introduction to Probability Theory and Its Applications, vol. 1, 3rd edn. Wiley, Hoboken (1968)

Feller, W.: An Introduction to Probability Theory and Its Applications, vol. 2, 2nd edn. Wiley, Hoboken (1971)

Doob, J.L.: Stochastic Processes, Revised Wiley-Interscience, New York (1990)

Resnick, S.I.: A Probability Path. Birkhäuser, New York (2014). https://doi.org/10.1007/978-0-8176-8409-9

Walker, S.: New approaches to Bayesian consistency. Ann. Stat. 32(5), 2028–2043 (2004). https://doi.org/10.1214/009053604000000409

Einstein, A.: Über die von der molekularkinetischen Theorie der Wärme geforderte Bewegung von in ruhenden Flüssigkeiten suspendierten Teilchen. Annalen der Physik 322(8), 549–560 (1905). https://doi.org/10.1002/andp.19053220806

Popov, S.: Two-Dimensional Random Walk: From Path Counting to Random Interlacements. Cambridge University Press, Cambridge (2021). https://doi.org/10.1017/9781108680134

Mörters, P., Peres, Y.: Brownian Motion. Cambridge University Press, Cambridge (2010). https://doi.org/10.1017/CBO9780511750489

Hössjer, O., Díaz-Pachón, D.A., Rao, J.S.: A formal framework for knowledge acquisition: going beyond machine learning. Entropy 24(10), 1469 (2022). https://doi.org/10.3390/e24101469

Carroll, L.: What the tortoise said to Achilles. Mind 104(416), 691–693 (1895). https://doi.org/10.1093/mind/104.416.691

Gödel, K.: On Formally Undecidable Propositions of Principia Mathematica and Related Systems. Basic Books, New York (1962)

Hofstadter, D.R.: Gödel, Escher, Bach: An Ethernal Golden Braid. Basic Books, New York (1999)

Anderson, P.W.: More is different: broken symmetry and the nature of the hierarchical structure of science. Science 177(4047), 393–396 (1972). https://doi.org/10.1126/science.177.4047.393

Laughlin, R.B., Pines, D.: The theory of everything. Proc. Natl. Acad. Sci. U.S.A. 97(1), 28–31 (2000). https://doi.org/10.1073/pnas.97.1.28

Thorvaldsen, S., Hössjer, O.: Using statistical methods to model the fine-tuning of molecular machines and systems. J. Theor. Biol. 501, 110352 (2020). https://doi.org/10.1016/j.jtbi.2020.110352

Díaz-Pachón, D.A., Hössjer, O.: Assessing, testing and estimating the amount of fine-tuning by means of active information. Entropy 24(10), 1323 (2022). https://doi.org/10.3390/e24101323

Haug, S., Marks, R.J., II., Dembski, W.A.: Exponential contingency explosion: implications for artificial general intelligence. IEEE Trans. Syst. Man Cybern.: Syst. 52(5), 2800–2808 (2022). https://doi.org/10.1109/TSMC.2021.3056669

Koperski, J.: Should we care about fine-tuning? Br. J. Philos. Sci. 56(2), 303–319 (2005). https://doi.org/10.1093/bjps/axi118

Bostrom, N.: Anthropic Bias: Observation Selection Effects in Science and Philosophy. Routledge, London (2002)

McGrew, T.: Fine-tuning and the search for an Archimedean point. Quaestiones Disputatae 8(2), 147–154 (2018). https://doi.org/10.5840/qd2018828

Barnes, L.A.: The fine tuning of the universe for intelligent life. Publ. Astron. Soc. Aust. 29(4), 529–564 (2012). https://doi.org/10.1071/AS12015

Rees, M.J.: Just Six Numbers: The Deep Forces that Shape the Universe. Basic Books, New York (2000)

Secrest, N.J., von Hausegger, S., Rameez, M., Mohayaee, R., Sarkar, S., Colin, J.: A test of the cosmological principle with quasars. Astrophys. J. Lett. 908(2), 51 (2021). https://doi.org/10.3847/2041-8213/abdd40

Sarkar, S.: Heart of darkness. Inference (2022). https://doi.org/10.37282/991819.22.21

Conrad, K.: Probability distributions and maximal entropy (2005). http://www.math.uconn.edu/

Billingsley, P.: Probability and Measure, 3rd edn. Wiley, Hoboken (1995)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Entropy and Maximum Entropy Distributions

Suppose X is a discrete random variable defined on a countable sample space \(\Omega = \{x_1,x_2,\ldots \}\) with distribution F. Then the entropy of F is

with \(\pi _i=F(\{x_i\})\).

For a continuous random variable X, defined on a subset \(\Omega\) of \({\mathbb {R}}^+\), with density function \(f=F^\prime\), the entropy H(F) is not defined. However, for each \(\delta >0\), the distribution F of X can be approximated by a discrete distribution \(F_\delta\) such that \(F_\delta (\{x_i\}) = F(I_i)\) for \(i=1,2,\ldots\), where \(I_i=((i-1)\delta ,i\delta ]\) and \(x_i\) is the mid point of \(I_i\). If \(\delta > 0\) is small, we have approximately

Although \(H(F_\delta ) \rightarrow \infty\) as \(\delta \rightarrow 0\), we may use

as a continuous analogue of the entropy. The motivation of \(H_c\) as a continuous analogue of the entropy is similar for other unbounded sample spaces \(\Omega\), such as \({\mathbb {R}}\), \({\mathbb {R}}^+\times {\mathbb {R}}\), and \({\mathbb {R}}^2\).

The goal is to find a distribution F that maximizes \(H_c(F)\) subject to a constraint \(\int _\Omega f(x)dx = 1\) and the d additional moment constraints \(E[M_i(X)]=\int _\Omega M_i(x) f(x)dx=\theta _i\) for \(i=1,\ldots ,d\). In particular, ordinary moment constraints correspond to choosing \(M_i(x)=x^i\) as polynomials of x of various orders i. The distribution F with maximal entropy \(H_c\), subject to these n constraints, has a density function

where \(\lambda _1,\ldots ,\lambda _d\) are Lagrange multipliers chosen to satisfy the moment constraints, whereas Z is a normalizing partition function, chosen so that f integrates to 1.

Though this is the most common way to find maximum entropy distributions, a more general approach is possible that finds maximum entropy distributions, even in some cases for which Lagrange multipliers do not work [58]. The details go beyond the scope of this paper.

Appendix 2: Conditional Probability

This section shows how the formal construction of conditional expectation avoids problem with infinity.

In the context of tuning, suppose we have two constants of nature, \(X,Y\in {\mathbb {R}}^+\). We want to find the conditional distribution \(x\rightarrow F_{X \mid Y}(x) = P(X\le x \mid Y=y)\) of X given an observed value y of Y. This can be formulated as a conditional probability \(P(A \mid G) = P(A \cap G)/P(G)\), where A and G are the sets of outcomes for which \(X\le x\) and \(Y=y\) respectively. However, this formula does not work when Y has a continuous distribution and \(P(G)=0\). Given this limitation of the classical notion of conditional probability, a more general definition of conditional probability is needed in order to find the conditional distribution of X given Y. Given this, “the whole point of [the measure-theoretic treatment of conditional probability] is the systematic development of a notion of conditional probability that covers conditioning with respect to events of probability 0. This is accomplished by conditioning with respect to collections of events—that is, with respect to \(\sigma\)-fields \({\mathcal {G}}\)” [59].

The formal definition of conditional probability is as follows: Given a probability space \((\Sigma ,{{\mathcal {H}}},P)\), a set \(A\in {{\mathcal {H}}}\), and and a \(\sigma\)-field \({\mathcal {G}} \subset {\mathcal {H}}\), there exists a function f (whose existence is guaranteed by the Radon-Nikodym theorem), \({\mathcal {G}}\)-measurable and integrable with respect to P, such that \(P(A \cap G) = \nu (G) = \int _G fdP\) for all \(G \in {\mathcal {G}}\). This function f can be conveniently noted as \(P(A \mid {\mathcal {G}})\). The function \(P(A \mid {\mathcal {G}})\) thus has two properties that define it:

-

(i)

\(P(A \mid {\mathcal {G}})\) is \({\mathcal {G}}\)-measurable and integrable.

-

(ii)

\(P(A \mid {\mathcal {G}})\) satisfies the functional equation

$$\begin{aligned} \int _G P(A \mid {\mathcal {G}}) dP = P(A \cap G), \ \ \ \ \ \ \ G \in {\mathcal {G}}. \end{aligned}$$

The important point here is that writing f as \(P(A \mid {\mathcal {G}})\) is just a notational convenience that can be interpreted as follows: when \({\mathcal {G}}\) is generated by a partition \({\mathcal {P}} = \{G_1, G_2 \ldots \}\) of \(\Sigma\), conditioning on \({\mathcal {G}}\) can thus be seen as performing an experiment whose outcome

will determine which event \(G_i\) of the partition occurred.Footnote 14 In general, there is a whole family of random variables satisfying properties (i) and (ii). Such random variables are equal with probability 1 (i.e., they can only be different in a set of zero probability); for this reason, each member of the family is called a version of the others. Thus, \(P(A \mid {\mathcal {G}})\) stands for any member of this family. Therefore, if \(P(G_i) = 0\) for some nonempty \(G_i \in {\mathcal {P}}\), a constant value c must be selected to make \(P(A \mid {\mathcal {G}}) = c\) on \(G_i\). Regardless of the choice of \(c\in [0,1]\), \(P(A \mid {\mathcal {G}})\) can still be considered a probability measure on \((\Sigma ,{{\mathcal {H}}})\) that assigns probabilities to all \(A\in {{\mathcal {H}}}\). That is, a version of the conditional probability is selected for any value of c.

However, this interpretation for the notation \(P(A \mid {\mathcal {G}})\) does not hold when \({\mathcal {G}}\) is not generated by a partition of \(\Sigma\), which in our motivating example corresponds to Y having a continuous distribution. Nonetheless, even though the intuition of the conditional probability as the realization of an experiment, with an outcome in \({\mathcal {P}} = \{G_1,G_2,\ldots \}\), is gone, the mathematical formalism stands, independently of the conditioning \(\sigma\)-field. That is, there still exists a family of functions \(\{f\}\) satisfying properties (i) and (ii). As in any area of mathematics, problems would arise when dividing by zero, but the formalism permits to circumvent this situation by selecting a well-defined version of the conditional probability.

Appendix 3: Upper Bounds for Fine-Tuning Probabilities

Let \(x_{\text {obs}} \ne 0\) be the observed value of a constant of nature \(X\in \Omega\) (or a ratio X of two constants of nature), where \(\Omega\) is the sample space. The prior distribution of X has a density \(f=F^\prime\) that belongs a parametric family

with \(\Theta \subset {\mathbb {R}}^d\) the parameter space to which the hyperparameter \(\theta\) belongs. This framework is more general than (7), since (17) does not assume that the hyperparameters \(\theta _i\) represent moment constraints \(\theta _i=E[M_i(X)]\) of Appendix 1. Let

be the life-permitting interval of X, with \(\epsilon >0\) a small number that quantifies half the relative size of I. For a fixed \(\theta\) the tuning probability is

Our quantity of interest is a useful approximation of the upper bound

of the tuning probability, assuming that the maximum in (20) is taken over all hyperparameter vectors that appear in (17).

Appendices 3.1–3.5 will give explicit approximations of the maximal tuning probability (20) for give different families \({{\mathcal {F}}}\) of prior distributions. Then in Table 1 of Appendix 3.6 the results are summarized.

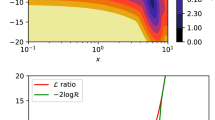

1.1 Appendix 3.1: Scale Parameter for \(\Omega = {\mathbb {R}}^+\)

A scale-parameter corresponds to \(d=1\) and \(\theta =\sigma >0\), so that \(\Theta = {\mathbb {R}}^+\). The prior density is

A typical example is the family of exponential distributions (\(g(x)=e^{-x}\)). Since

it follows that

It is easily seen that \(C_1=e^{-1}\) for the family of exponential distributions.

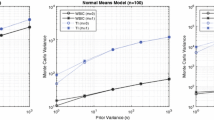

1.2 Appendix 3.2: Form and Scale Parameter for \(\Omega = {\mathbb {R}}^+\)

This scenario corresponds to \(d=2\), \(\theta =(\psi ,\sigma )\), where \(\psi >0\) is the form parameter and \(\sigma >0\) the scale parameter. Consequently

where \(g(\cdot ;\psi )\) is the density of the distribution with shape parameter \(\psi\) and scale parameter 1. Typical examples are the family of gamma distributions (\(g(x;\psi )=x^{\psi -1}e^{-x}/\Gamma (\psi )\)) or the family of Weibull distributions (\(g(x;\psi )=\psi x^{\psi -1}e^{-x^\psi }\)). The expected value and variance of X are

respectively, for some functions \(h_1\) and \(h_2\) (for instance \(h_1(\psi )=h_2(\psi )=\psi\) for the family of gamma distributions, whereas \(h_1(\psi )=\Gamma (1+1/\psi )\) and \(h_2(\psi )=\Gamma (1+2/\psi )-\Gamma ^2(1+1/\psi )\) for the family of Weibull distributions). Let \(h(\psi )=h_1^2(\psi )/h_2(\psi )\). We consider a parameter space

of hyperparameters such that the signal-to-noise ratio

of the prior distribution is at most S. We will prove that

for some constant \(C_2\) (defined below), whenever S is large and

It follows that essentially, (28) is the condition for the upper bound \(\text {TP}_{\text {max}} (I)\) of the tuning probability to be small.

Proof of (27). Equation (24) implies

from which

follows. Since \(I/x_\text {obs} = [1-\epsilon ,1+\epsilon ]\), we assume, without loss of generality, that \(x_\text {obs}=1\). With this choice of \(x_\text {obs}\),

Moreover, it can be seen that

when S is large, since the maximum of \(x g(x;\psi )\) with respect to x approximately equals \(E(X)g(E(X);\psi )\). For the gamma and Weibull families, we have that \(g(x;\psi )\) is approximately a Gaussian density for large signal-to-noise ratios \(h(\psi )\). This implies

when \(h(\psi )\) is large, where \(\phi (x)=e^{-x^2/2}/\sqrt{2\pi }\) is the density of a standard normal distribution. Inserting (32) into (31) we find (with \(\psi _1 = h^{-1}(S)\)) that

where in the last step we used that \(h_1(\psi _1)/\sqrt{h_2(\psi _1)}=\sqrt{S}\) and \(\phi (0)=1/\sqrt{2\pi }\).

1.3 Appendix 3.3: Scale Parameter for \(\Omega = {\mathbb {R}}\)

This is the same kind of density as in (21), with \(d=1\) and \(\theta =\sigma >0\), so that \(\Theta = {\mathbb {R}}^+\). But g is now a symmetric density defined on the whole real line. A typical example is the class of double exponential or Laplace distributions (\(g(x)=e^{-\vert x\vert }/2\)), the class of symmetric normal distributions (\(g(x)=\phi (x)\)) or the class of symmetric Cauchy distributions (\(g(x)=1/\left[ \pi \left( 1+x^2\right) \right]\)). It can be seen that

whereas (23) still holds.

1.4 Appendix 3.4: Location Parameter for \(\Omega = {\mathbb {R}}\)

A location parameter corresponds to \(d=1\), \(\theta =\mu\), \(\Theta ={\mathbb {R}}\), and

Typical examples are the family of normal distributions with fixed variance \(\sigma ^2\) (\(g(x)=\phi (x/\sigma )/\sigma\)), and the family of shifted Cauchy distributions with a fixed scale \(\sigma\) (\(g(x)=1/\left[ \sigma \pi \left( 1+(x/\sigma )^2\right) \right]\)). Since

it follows that

1.5 Appendix 3.5: Location and Scale Parameters for \(\Omega = {\mathbb {R}}\)

The two-parameter location-scale family corresponds to \(d=2\), \(\theta =(\mu ,\sigma )\),

and

If the first two moments of the prior exist and \(X\sim g\) is standardized to have \(E(X)=0\) and \(\text {Var}(X)=1\), then (38) consists of all densities with a signal-to-noise ratio

that is upper bounded by a pre-chosen number S, as in (26). A typical example is the family of normal distributions (\(g(x)=\phi (x)\)). On the other hand, if g corresponds to a Cauchy distribution (\(g(x)=1/\left[ \pi \left( 1+x^2\right) \right]\)) the first two moments of \(X\sim g\) do not exist. Then \(\mu ^2/\sigma ^2\) represents a generalized signal-to-noise ratio, which according to (39) is upper bounded by S. Below we prove that

which holds whenever (28) is satisfied, which is also a condition for the upper bound of the tuning probability to be small. In the transition from (40) to (41) we assumed that g is symmetric, so that \(C_1=\max _{x>0} \{x g(x)\}\), as in Appendix 3.1.

Proof of (40). By a change of variables, it is easily seen that

from which it follows that (29) holds, and we may, without loss of generality, assume \(x_\text {obs}=1\). This gives

where in the second step we substituted \(z=x/\sigma\). We have that

where

whenever \(\mu \ne 0\), and

Insertion of (45) into (44) yields

Finally, inserting (46) into (43), we find that

which proves the desired result. In the last step of (47) we used that the maximum of (47) is obtained when \(\mu\) is such that the two functions within the minimum operator have the same value.

1.6 Appendix 3.6: Summary of Results

The upper bounds of the tuning probability are summarized in the following table:

The constants that appear in the rightmost column of the table are

Remark 1

Each proof in this section is based on the supposition \(\epsilon \ll 1\). This can be seen in (22), (30), (33), (35), and (42), since in all these equations the assumption was that \(\epsilon\) was small enough to warrant that the prior density of X was constant over the life-permitting interval \(I_X\).

Remark 2

The cases where \(\text {TP}_{\text {max}} = 1\) in Table 1 are produced because it is possible that the distribution is highly concentrated inside the life-permitting interval \(I_X\). For instance, this happens for the location-scale parameter of Appendix 3.5 when the scale parameter \(\sigma\) converges to zero (or \(S\rightarrow \infty\)).

Remark 3

Since fine-tuning requires a non-degenerate random variable X, its variance must be positive. Therefore, suppose there exists \(\sigma _0>0\) such that \(\text {Var}(X) \ge \sigma _0^2\). This assumption implies that SNR\(=E^2(X)/\text {Var}(X) \ne 0\) when \(E(X)\ne 0\) in Table 1. For this scenario a sufficient condition for fine-tuning is \(\epsilon /\sigma _0 \ll 1\), regardless of \(\text{ SNR }\). However, the requirement \(\text {Var}(X)\ge \sigma _0^2\) is not invariant with respect to scaling of X, and therefore less general than the dimensionless constraint \(\text{ SNR }\le S<\infty\) (which also excludes degenerate priors). Even when the variance does not exist (as in the Cauchy distribution), the fact that a non-degenerate random variable is under scrutiny warrants that the scale parameter \(\sigma\) must be positive (\(\sigma \ge \sigma _0\)), and then the analogous sufficient condition for fine-tuning applies.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Díaz-Pachón, D.A., Hössjer, O. & Marks, R.J. Sometimes Size Does Not Matter. Found Phys 53, 1 (2023). https://doi.org/10.1007/s10701-022-00650-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10701-022-00650-1